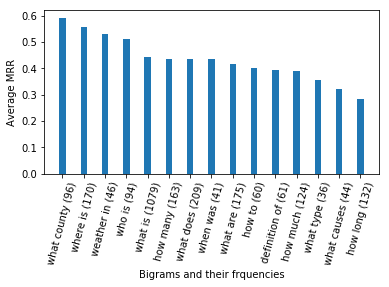

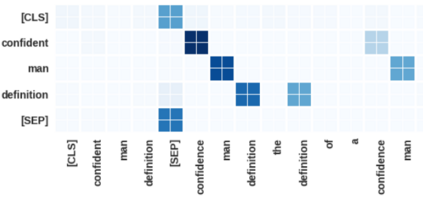

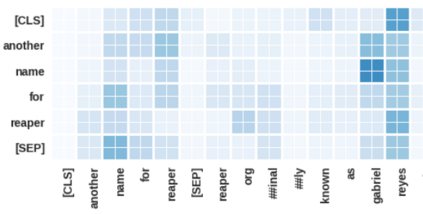

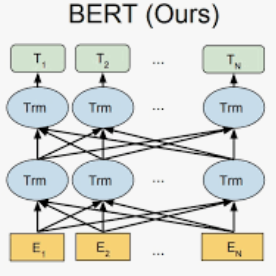

The bidirectional encoder representations from transformers (BERT) model has recently advanced the state-of-the-art in passage re-ranking. In this paper, we analyze the results produced by a fine-tuned BERT model to better understand the reasons behind such substantial improvements. To this aim, we focus on the MS MARCO passage re-ranking dataset and provide potential reasons for the successes and failures of BERT for retrieval. In more detail, we empirically study a set of hypotheses and provide additional analysis to explain the successful performance of BERT.

翻译:变压器(BERT)模型的双向编码器表示方式最近已推进了通过重新排行的最新水平,在本文中,我们分析了经过微调的BERT模型所产生的结果,以更好地了解这些重大改进背后的原因,为此,我们侧重于MS MARCO通过重新排行的数据集,并提供了BERT成功和失败检索的潜在原因。更详细地说,我们从经验上研究了一套假设,并提供了补充分析,以解释BERT的成功表现。

相关内容

专知会员服务

19+阅读 · 2019年10月22日

Arxiv

4+阅读 · 2019年9月11日

Arxiv

15+阅读 · 2018年10月11日