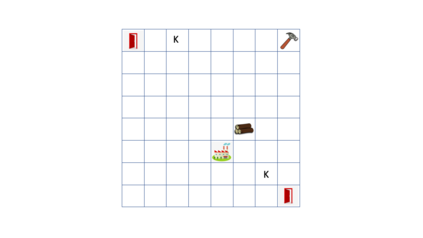

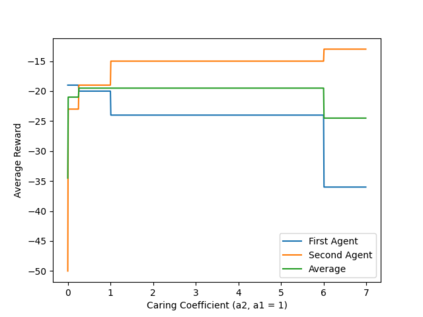

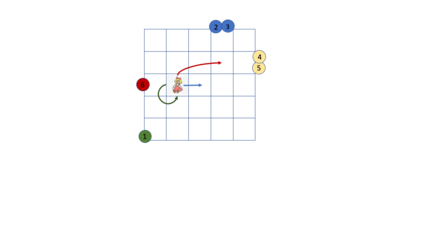

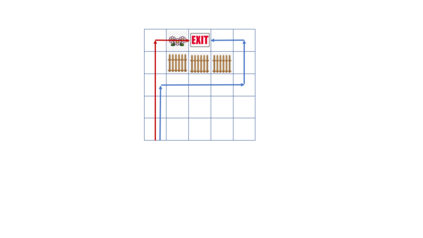

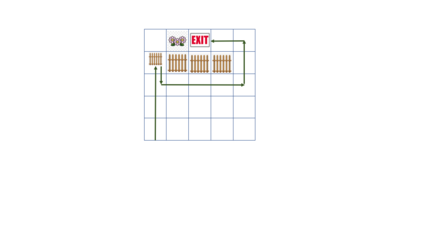

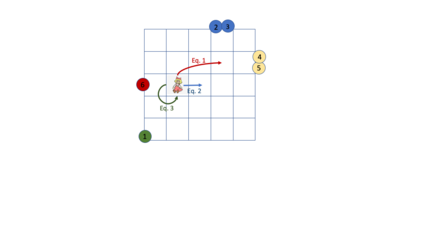

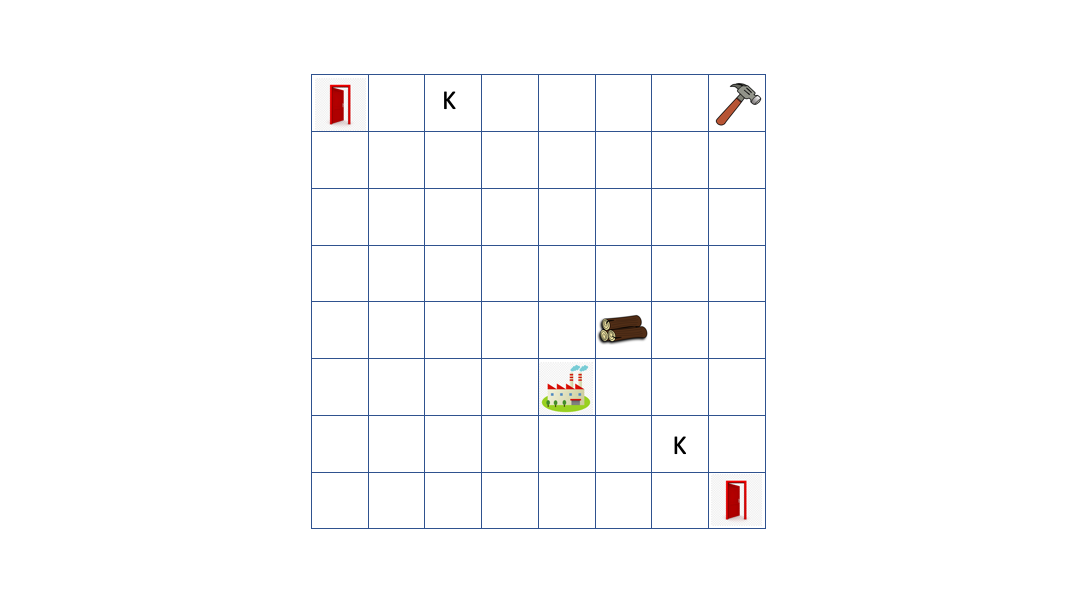

Recent work in AI safety has highlighted that in sequential decision making, objectives are often underspecified or incomplete. This gives discretion to the acting agent to realize the stated objective in ways that may result in undesirable outcomes. We contend that to learn to act safely, a reinforcement learning (RL) agent should include contemplation of the impact of its actions on the wellbeing and agency of others in the environment, including other acting agents and reactive processes. We endow RL agents with the ability to contemplate such impact by augmenting their reward based on expectation of future return by others in the environment, providing different criteria for characterizing impact. We further endow these agents with the ability to differentially factor this impact into their decision making, manifesting behavior that ranges from self-centred to self-less, as demonstrated by experiments in gridworld environments.

翻译:AI安全方面的近期工作强调,在顺序决策中,目标往往没有被详细规定或不完整,这使代理商有酌处权以可能导致不良结果的方式实现所述目标。我们主张,为了学会安全地行动,强化学习(RL)代理商应当考虑其行动对他人在环境中的福利和作用的影响,包括其他代理商和反应性程序。我们赋予RL代理商以能力来考虑这种影响,根据他人在环境中未来返回的预期增加奖励,为影响定性提供不同的标准。我们进一步赋予这些代理商能力,使其在决策中将这种影响因素加以区别,表现的行为从自我中心到自我无能不等,正如在电网环境中的实验所显示的那样。