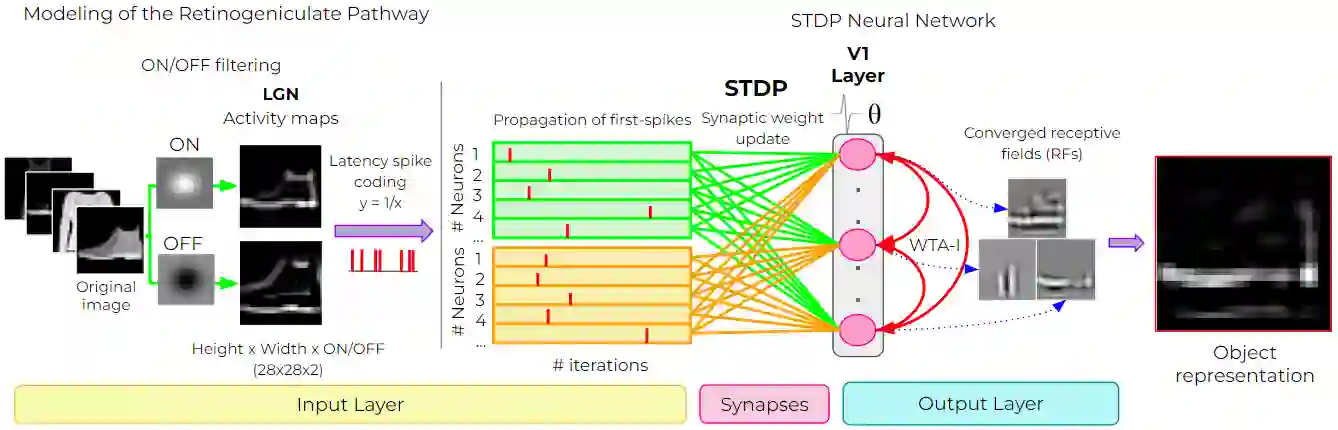

Deep neural networks have surpassed human performance in key visual challenges such as object recognition, but require a large amount of energy, computation, and memory. In contrast, spiking neural networks (SNNs) have the potential to improve both the efficiency and biological plausibility of object recognition systems. Here we present a SNN model that uses spike-latency coding and winner-take-all inhibition (WTA-I) to efficiently represent visual stimuli from the Fashion MNIST dataset. Stimuli were preprocessed with center-surround receptive fields and then fed to a layer of spiking neurons whose synaptic weights were updated using spike-timing-dependent-plasticity (STDP). We investigate how the quality of the represented objects changes under different WTA-I schemes and demonstrate that a network of 150 spiking neurons can efficiently represent objects with as little as 40 spikes. Studying how core object recognition may be implemented using biologically plausible learning rules in SNNs may not only further our understanding of the brain, but also lead to novel and efficient artificial vision systems.

翻译:深神经网络在物体识别等关键视觉挑战中超过了人类的性能,但需要大量的能量、计算和记忆。相比之下,神经网络(SNNS)具有提高物体识别系统的效率和生物可行性的潜力。我们在这里展示了一个SNN模型,它使用高延度编码和赢者全吞抑制(WTA-I)来有效代表时装MNIST数据集的视觉刺激。 Stimuli先用中环可接收场进行处理,然后被喂养给一组神经元,这些神经元的合成重量通过使用顶峰模拟依赖性特征(STDP)更新。我们调查了不同WTA-I方案代表的物体变化的质量,并证明由150个脉冲神经元组成的网络能够有效地代表物体,只有40个峰值。研究核心物体识别如何利用SNNUS的生物上可信的学习规则来实施,不仅可能进一步加深我们对大脑的理解,而且还导致新的和高效的人工视觉系统。