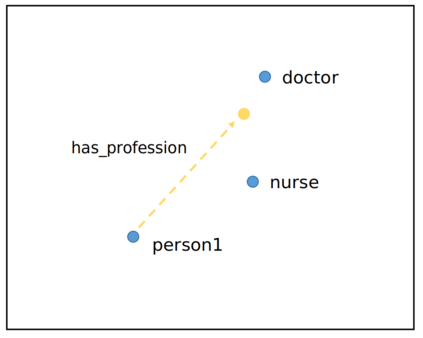

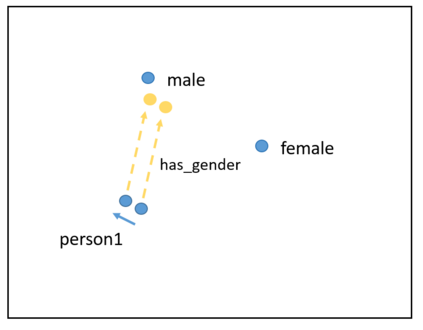

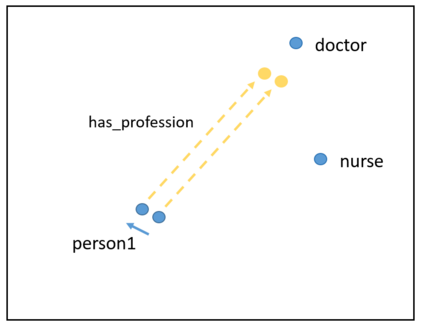

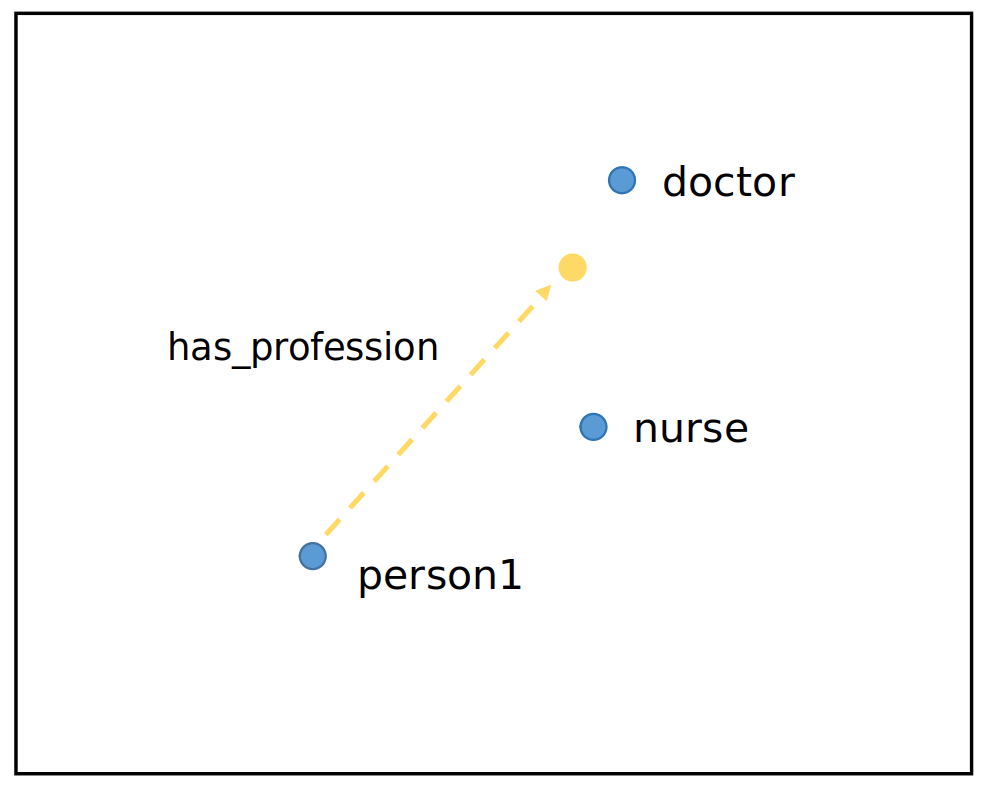

It has recently been shown that word embeddings encode social biases, with a harmful impact on downstream tasks. However, to this point there has been no similar work done in the field of graph embeddings. We present the first study on social bias in knowledge graph embeddings, and propose a new metric suitable for measuring such bias. We conduct experiments on Wikidata and Freebase, and show that, as with word embeddings, harmful social biases related to professions are encoded in the embeddings with respect to gender, religion, ethnicity and nationality. For example, graph embeddings encode the information that men are more likely to be bankers, and women more likely to be homekeepers. As graph embeddings become increasingly utilized, we suggest that it is important the existence of such biases are understood and steps taken to mitigate their impact.

翻译:最近,人们发现,文字嵌入将社会偏见编码,对下游任务产生有害影响,然而,到此为止,在图嵌入领域没有做类似的工作。我们提出了关于知识图形嵌入中的社会偏见的第一份研究报告,并提出了适合衡量这种偏见的新指标。我们在维基数据与自由基础上进行了实验,并表明,与文字嵌入一样,与职业有关的有害的社会偏见在嵌入中也编码在性别、宗教、族裔和国籍上。例如,图表嵌入将男子更有可能成为银行家,妇女更有可能成为家庭主妇的信息编码。随着图表嵌入的日益被利用,我们建议必须理解这种偏见的存在,并采取步骤减轻其影响。