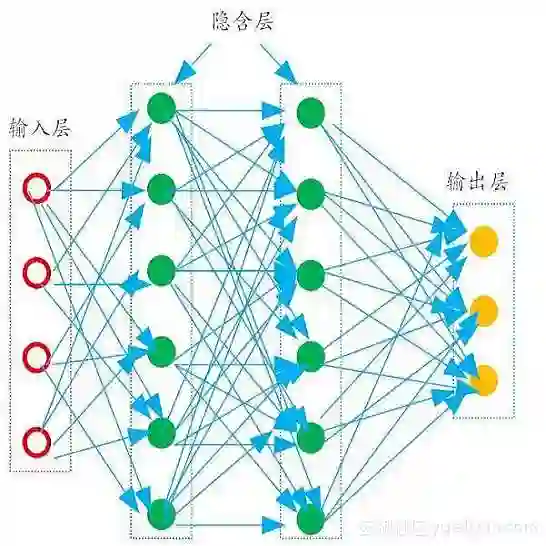

The surrogate model-based uncertainty quantification method has drawn a lot of attention in recent years. Both the polynomial chaos expansion (PCE) and the deep learning (DL) are powerful methods for building a surrogate model. However, the PCE needs to increase the expansion order to improve the accuracy of the surrogate model, which causes more labeled data to solve the expansion coefficients, and the DL also needs a lot of labeled data to train the neural network model. This paper proposes a deep arbitrary polynomial chaos expansion (Deep aPCE) method to improve the balance between surrogate model accuracy and training data cost. On the one hand, the multilayer perceptron (MLP) model is used to solve the adaptive expansion coefficients of arbitrary polynomial chaos expansion, which can improve the Deep aPCE model accuracy with lower expansion order. On the other hand, the adaptive arbitrary polynomial chaos expansion's properties are used to construct the MLP training cost function based on only a small amount of labeled data and a large scale of non-labeled data, which can significantly reduce the training data cost. Four numerical examples and an actual engineering problem are used to verify the effectiveness of the Deep aPCE method.

翻译:近年来,基于替代模型的不确定性定量方法引起了人们的极大关注。多元混乱扩展(PCE)和深层次学习(DL)是建立替代模型的有力方法。然而,多层渗透器模型(MLP)需要增加扩展量,以提高替代模型的准确性,这可以用更多的标签数据解决扩张系数,而DL也需要大量标签数据来培训神经网络模型。本文件建议了一种深层次的任意多元混乱扩展(深植 aPCE)方法,以改善替代模型准确性与培训数据成本之间的平衡。一方面,多层渗透器模型(MLP)用于解决任意多层扰动扩展的适应性扩展系数,这可以用较低的扩展顺序改进深海的CEep a模型的准确性。另一方面,适应性任意的多层混乱扩展特性被用于构建仅以少量标签数据为基础的MLP培训成本功能,以及大量非标签数据之间的成本。 一方面,多层渗透器模型(MLP)模型(MLP)被用于解决任意多层渗透器扩展的适应性扩展系数,这可以大大降低实际使用的数据成本。