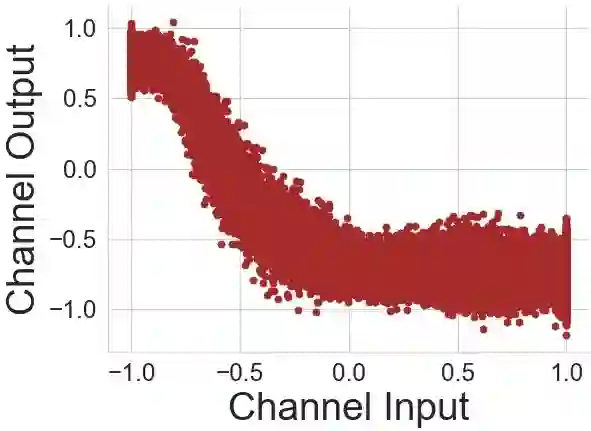

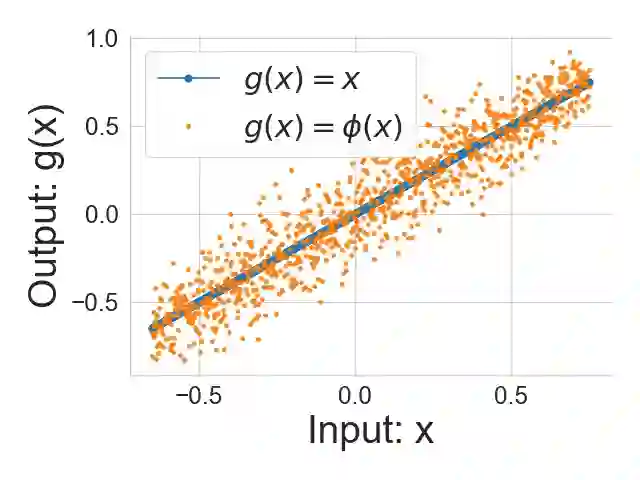

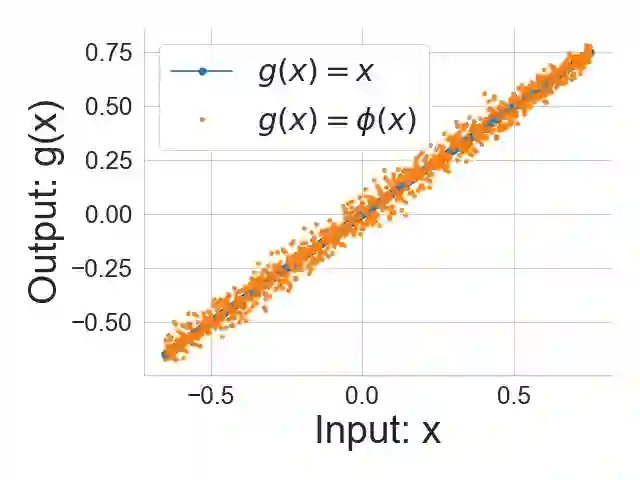

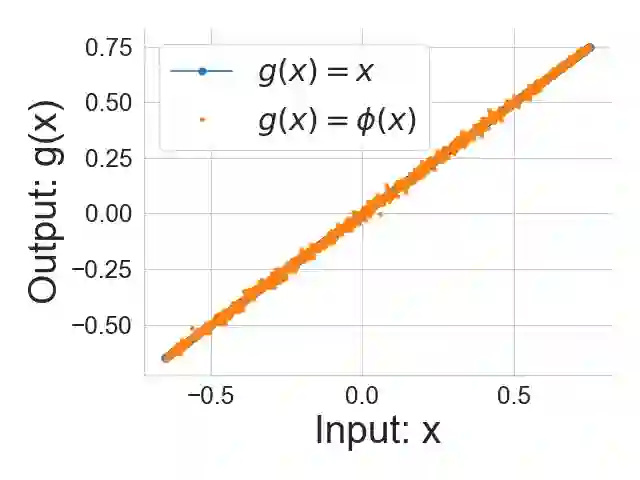

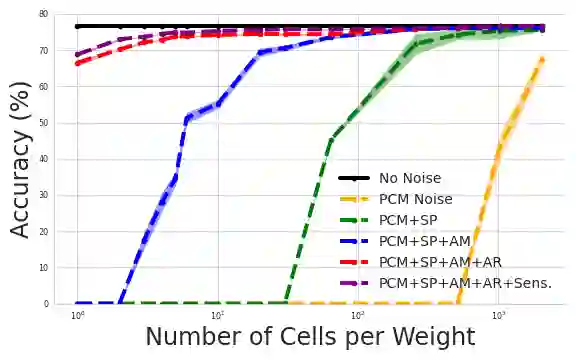

Compression and efficient storage of neural network (NN) parameters is critical for applications that run on resource-constrained devices. Although NN model compression has made significant progress, there has been considerably less investigation in the actual physical storage of NN parameters. Conventionally, model compression and physical storage are decoupled, as digital storage media with error correcting codes (ECCs) provide robust error-free storage. This decoupled approach is inefficient, as it forces the storage to treat each bit of the compressed model equally, and to dedicate the same amount of resources to each bit. We propose a radically different approach that: (i) employs analog memories to maximize the capacity of each memory cell, and (ii) jointly optimizes model compression and physical storage to maximize memory utility. We investigate the challenges of analog storage by studying model storage on phase change memory (PCM) arrays and develop a variety of robust coding strategies for NN model storage. We demonstrate the efficacy of our approach on MNIST, CIFAR-10 and ImageNet datasets for both existing and novel compression methods. Compared to conventional error-free digital storage, our method has the potential to reduce the memory size by one order of magnitude, without significantly compromising the stored model's accuracy.

翻译:神经网络(NN)参数的压缩和高效储存对于在资源限制装置上运行的应用至关重要。虽然NN模型压缩已经取得重大进展,但在实际实际储存NN参数方面,调查次数却少得多。在公约方面,模型压缩和物理储存被拆解,因为带有错误校正代码的数字存储介质提供了强力的无误存储代码。这种脱钩方法效率低下,因为它迫使存储对压缩模型的每一部分进行同等处理,并将同样数量的资源投入到每一部分。我们提议了一种完全不同的方法:(一) 使用模拟记忆来最大限度地发挥每个记忆细胞的能力,和(二) 联合优化模型压缩和物理储存以最大限度地发挥记忆的效用。我们通过研究阶段改变存储(PCM)阵列的模型存储和为NNM模型存储制定各种稳健的编码战略,来调查模拟存储的挑战。我们展示了我们在MNIST、CIFAR-10和图像网络数据集方面的做法对现有和新压缩方法的有效性。与常规的无误存储模式相比,我们的方法具有大幅降低一个记忆质量的潜力。