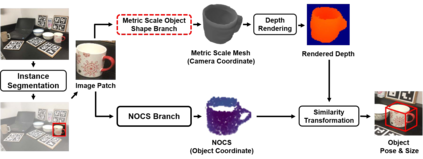

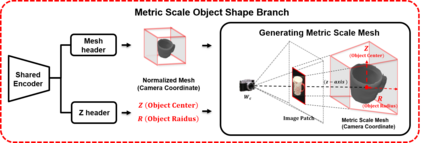

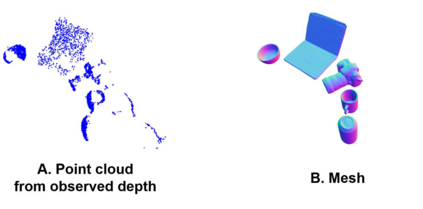

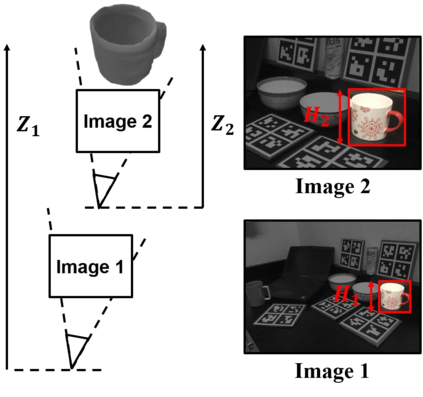

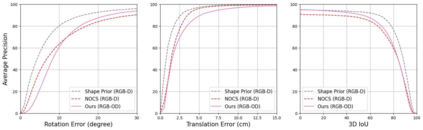

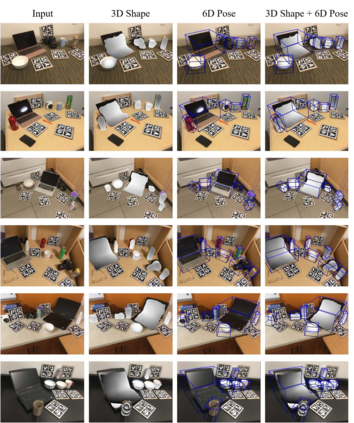

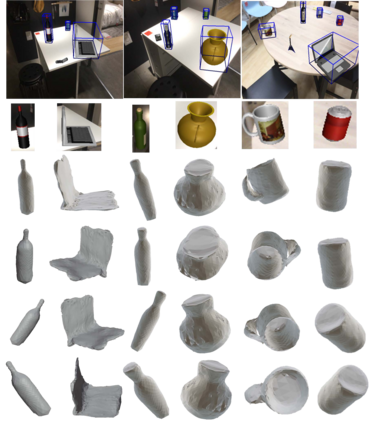

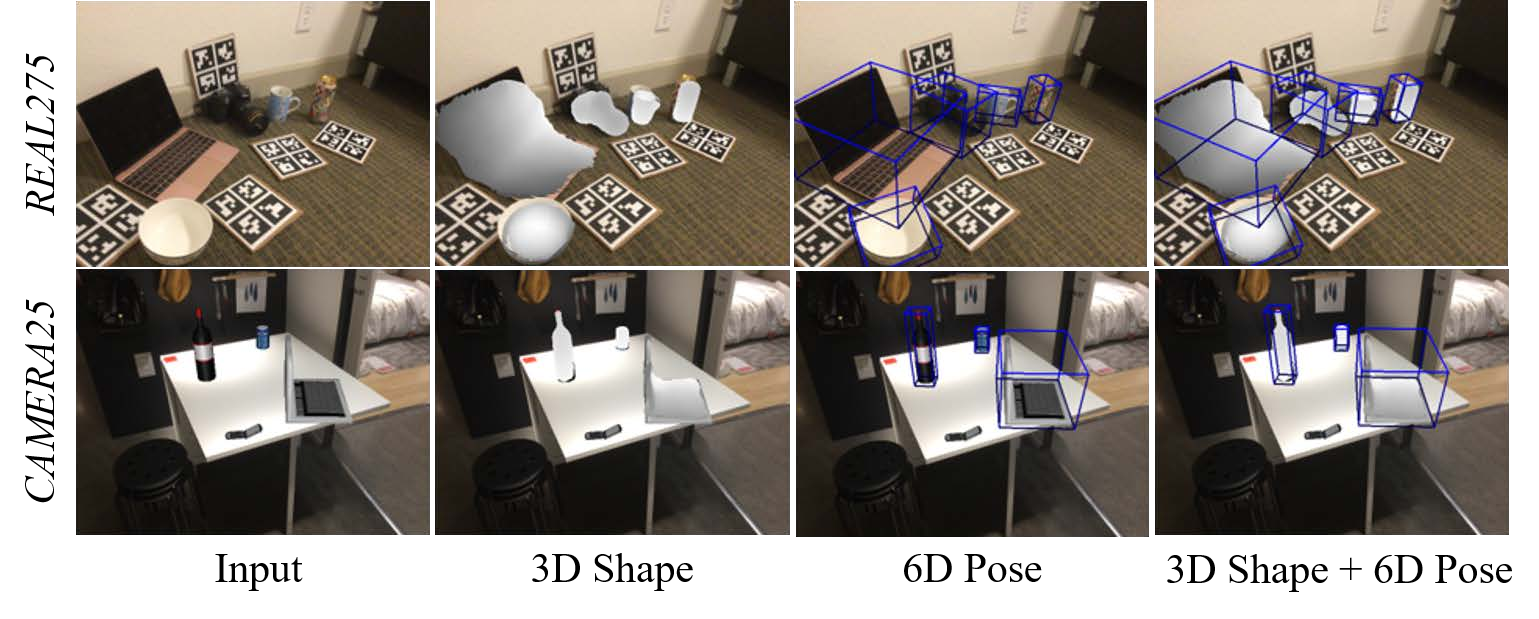

Advances in deep learning recognition have led to accurate object detection with 2D images. However, these 2D perception methods are insufficient for complete 3D world information. Concurrently, advanced 3D shape estimation approaches focus on the shape itself, without considering metric scale. These methods cannot determine the accurate location and orientation of objects. To tackle this problem, we propose a framework that jointly estimates a metric scale shape and pose from a single RGB image. Our framework has two branches: the Metric Scale Object Shape branch (MSOS) and the Normalized Object Coordinate Space branch (NOCS). The MSOS branch estimates the metric scale shape observed in the camera coordinates. The NOCS branch predicts the normalized object coordinate space (NOCS) map and performs similarity transformation with the rendered depth map from a predicted metric scale mesh to obtain 6d pose and size. Additionally, we introduce the Normalized Object Center Estimation (NOCE) to estimate the geometrically aligned distance from the camera to the object center. We validated our method on both synthetic and real-world datasets to evaluate category-level object pose and shape.

翻译:深层学习识别的进步导致对2D图像进行精确的天体探测。 但是, 这些 2D 的认知方法不足以提供完整的 3D 世界信息 。 同时, 高级 3D 形状估计方法在不考虑尺度尺度的情况下, 侧重于形状本身 。 这些方法无法确定物体的准确位置和方向 。 为了解决这个问题, 我们建议了一个框架, 共同估计一个尺度形状, 并从单一的 RGB 图像中产生。 我们的框架有两个分支 : 矩阵对象形状分支( MSOS ) 和 普通化物体协调空间分支( NOCS ) 。 MSOS 分支 估计了在相机坐标中观测到的尺度形状 。 NOCS 分支预测了正常物体协调空间( NOCS) 地图, 并进行了相似性转换, 其深度图来自预测的尺度网格网格, 以获得 6d 形状和大小 。 此外, 我们引入了正常物体中心 Estimation (NOC) 来估计从相机到对象中心之间的几等一致的距离 。 我们验证了我们在合成和现实世界 数据集上的方法, 来评价分类物体的形状和形状 。