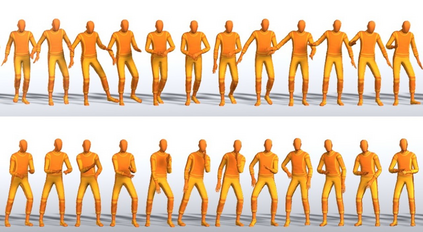

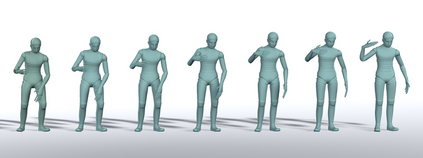

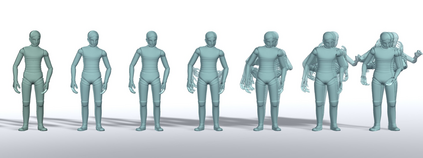

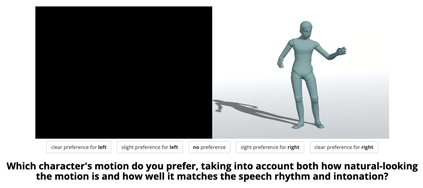

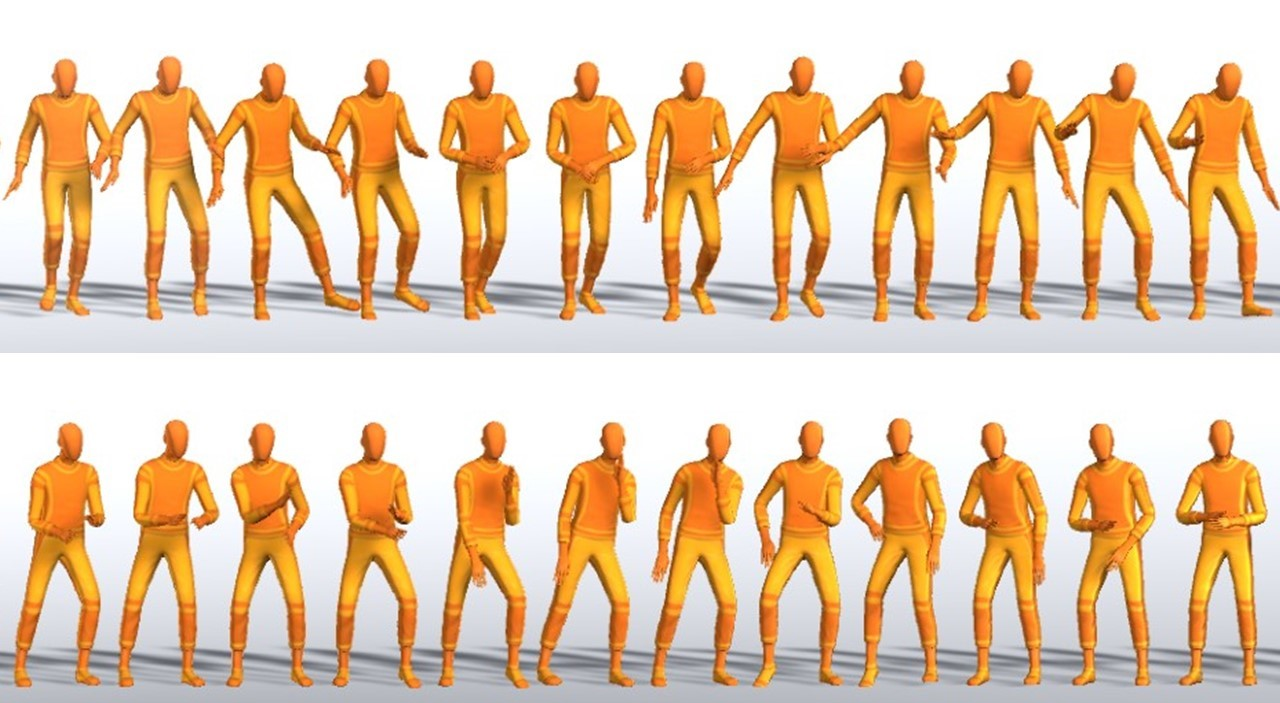

Diffusion models have experienced a surge of interest as highly expressive yet efficiently trainable probabilistic models. We show that these models are an excellent fit for synthesising human motion that co-occurs with audio, for example co-speech gesticulation, since motion is complex and highly ambiguous given audio, calling for a probabilistic description. Specifically, we adapt the DiffWave architecture to model 3D pose sequences, putting Conformers in place of dilated convolutions for improved accuracy. We also demonstrate control over motion style, using classifier-free guidance to adjust the strength of the stylistic expression. Gesture-generation experiments on the Trinity Speech-Gesture and ZeroEGGS datasets confirm that the proposed method achieves top-of-the-line motion quality, with distinctive styles whose expression can be made more or less pronounced. We also synthesise dance motion and path-driven locomotion using the same model architecture. Finally, we extend the guidance procedure to perform style interpolation in a manner that is appealing for synthesis tasks and has connections to product-of-experts models, a contribution we believe is of independent interest. Video examples are available at https://www.speech.kth.se/research/listen-denoise-action/

翻译:作为高度直观的、高效的、可训练的概率模型,扩散模型已经引起了人们的极大兴趣。我们表明,这些模型非常适合合成人类运动,这种运动与音频(例如共同语音)同步进行,因为运动是复杂和高度模糊的,给听力要求一种概率性描述。具体地说,我们将DiffWave结构调整为3D型结构构成序列,将变形变形变形变形变形,以提高准确性。我们还展示对运动风格的控制,使用无分类指导来调整气态表达的强度。关于Trinity Speaking-Gesture和ZeroGGGS数据集的策略生成实验证实,拟议方法达到了最上线运动质量,其表达方式可以越来越明显。我们还利用同样的模型合成舞蹈运动和路径驱动的变形变形变形变形变形变形变形。最后,我们扩展了指导程序来执行风格变形变形,以吸引合成任务和链接的方式进行调。我们在MARV/Exexexexexexexeximational 模型上拥有链接。