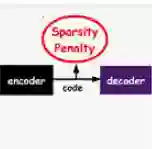

Deep Convolutional Sparse Coding (D-CSC) is a framework reminiscent of deep convolutional neural networks (DCNNs), but by omitting the learning of the dictionaries one can more transparently analyse the role of the activation function and its ability to recover activation paths through the layers. Papyan, Romano, and Elad conducted an analysis of such an architecture, demonstrated the relationship with DCNNs and proved conditions under which the D-CSC is guaranteed to recover specific activation paths. A technical innovation of their work highlights that one can view the efficacy of the ReLU nonlinear activation function of a DCNN through a new variant of the tensor's sparsity, referred to as stripe-sparsity. Using this they proved that representations with an activation density proportional to the ambient dimension of the data are recoverable. We extend their uniform guarantees to a modified model and prove that with high probability the true activation is typically possible to recover for a greater density of activations per layer. Our extension follows from incorporating the prior work on one step thresholding by Schnass and Vandergheynst.

翻译:Papyan、Romano和Elad对这种结构进行了分析,展示了与DCNN(D-CSC)的关系,并证明在何种条件下D-CSC能够保证恢复特定的激活路径。其工作的技术创新突出显示,人们可以通过被称为“条形分化”的新变体来查看RELU DCNNN的非线性激活功能的功效。利用这些变体,他们证明,与数据环境层面成正比的激活密度表示是可以恢复的。我们将其统一保证扩展至一个修改后的模型,并证明,在非常可能的情况下,真正的激活通常能够恢复到更密集的每个层。我们的扩展是通过Schnass和Vandergheyns在一步阈值上的先前工作。