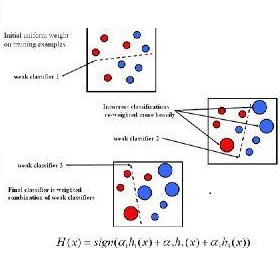

The design of deep graph models still remains to be investigated and the crucial part is how to explore and exploit the knowledge from different hops of neighbors in an efficient way. In this paper, we propose a novel RNN-like deep graph neural network architecture by incorporating AdaBoost into the computation of network; and the proposed graph convolutional network called AdaGCN~(Adaboosting Graph Convolutional Network) has the ability to efficiently extract knowledge from high-order neighbors of current nodes and then integrates knowledge from different hops of neighbors into the network in an Adaboost way. Different from other graph neural networks that directly stack many graph convolution layers, AdaGCN shares the same base neural network architecture among all ``layers'' and is recursively optimized, which is similar to an RNN. Besides, We also theoretically established the connection between AdaGCN and existing graph convolutional methods, presenting the benefits of our proposal. Finally, extensive experiments demonstrate the consistent state-of-the-art prediction performance on graphs across different label rates and the computational advantage of our approach AdaGCN~\footnote{Code is available at \url{https://github.com/datake/AdaGCN}.}

翻译:深图模型的设计仍有待调查,关键部分是如何以高效的方式探索和利用来自邻国不同潮流的知识。在本文中,我们提出一个新的新型RNN类似深图神经网络结构,将AdaBoost纳入网络计算中;以及拟议的称为AdaGCN~(Adabushsting Plusion Convolutional Network)的图形革命网络,能够有效地从当前节点的高层邻居那里获取知识,然后以Adaboost方式将来自邻国不同潮流的知识融入网络。不同于直接堆放许多图层的图层神经网络,AdaGGCN在“机组”所有“机组”之间共享相同的基神经网络结构,并被循环优化,与RNN类似。此外,我们理论上还建立了AdaGCNN和现有的图层革命方法之间的联系,介绍了我们提案的好处。最后,广泛的实验表明,不同标签率和我们方法的计算优势是AdaGGG_G_Fortonology。