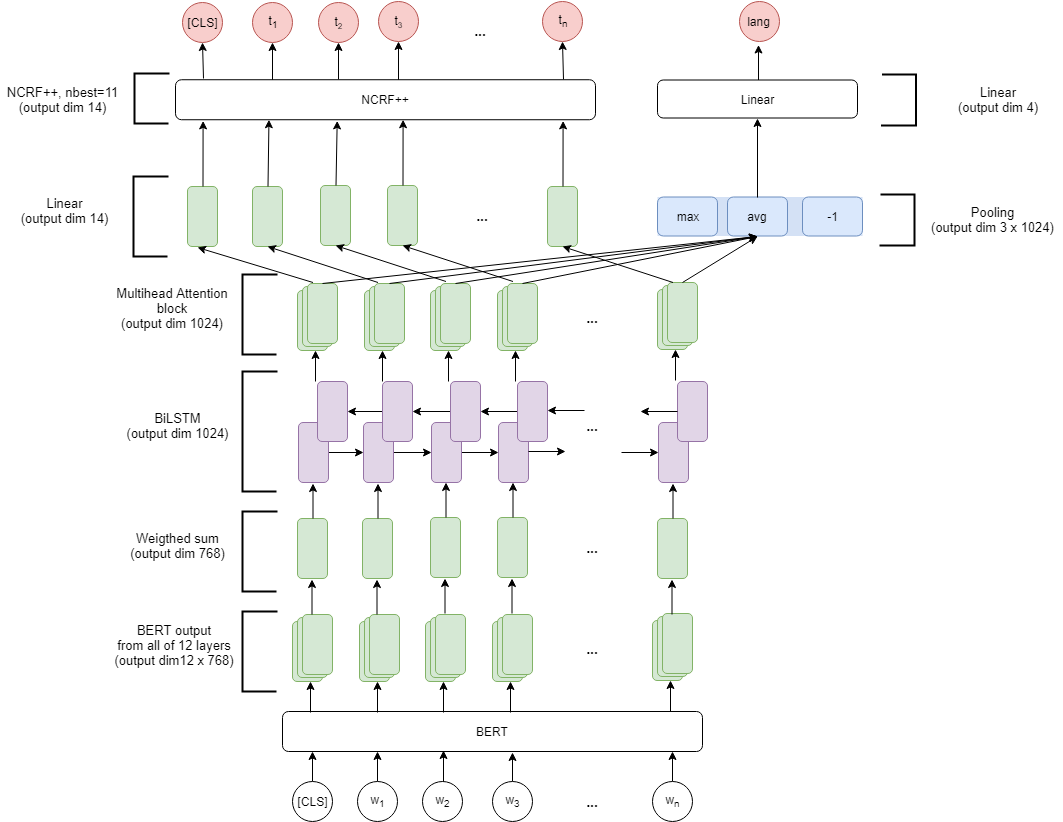

In this paper we tackle multilingual named entity recognition task. We use the BERT Language Model as embeddings with bidirectional recurrent network, attention, and NCRF on the top. We apply multilingual BERT only as embedder without any fine-tuning. We test out model on the dataset of the BSNLP shared task, which consists of texts in Bulgarian, Czech, Polish and Russian languages.

翻译:在本文中,我们处理多语种名称的实体识别任务。我们使用BERT语言模式作为嵌入双向经常性网络、注意力和上方的NCRF的嵌入器。我们只将多语种BERT作为嵌入器,而不作任何微调。我们测试了BSNLP共同任务的数据集模型,该模型由保加利亚、捷克、波兰和俄语文本组成。

相关内容

专知会员服务

54+阅读 · 2020年1月30日

专知会员服务

52+阅读 · 2019年12月28日

Arxiv

6+阅读 · 2019年8月21日

Arxiv

5+阅读 · 2019年5月20日

Arxiv

6+阅读 · 2019年4月30日

Arxiv

16+阅读 · 2019年4月3日