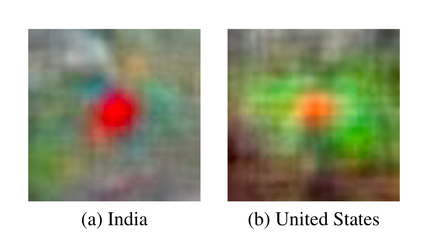

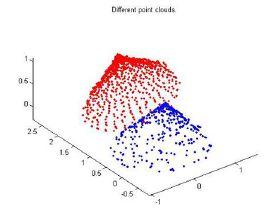

From face recognition in smartphones to automatic routing on self-driving cars, machine vision algorithms lie in the core of these features. These systems solve image based tasks by identifying and understanding objects, subsequently making decisions from these information. However, errors in datasets are usually induced or even magnified in algorithms, at times resulting in issues such as recognising black people as gorillas and misrepresenting ethnicities in search results. This paper tracks the errors in datasets and their impacts, revealing that a flawed dataset could be a result of limited categories, incomprehensive sourcing and poor classification.

翻译:这些系统通过识别和理解对象,从而解决基于图像的任务,然后从这些信息中作出决定。然而,数据集中的错误通常在算法中引起甚至放大,有时导致诸如承认黑人是大猩猩和在搜索结果中歪曲族裔等问题。本文跟踪数据集中的错误及其影响,揭示出有缺陷的数据集可能是有限类别、不全面的来源和分类不完善的结果。