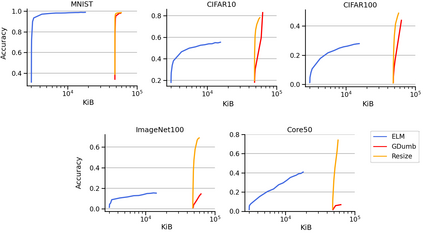

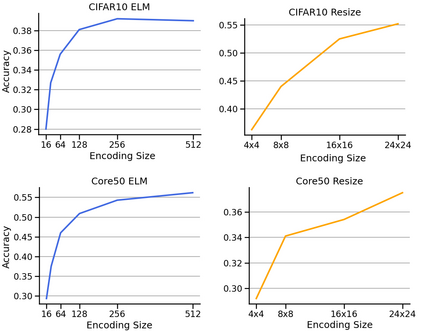

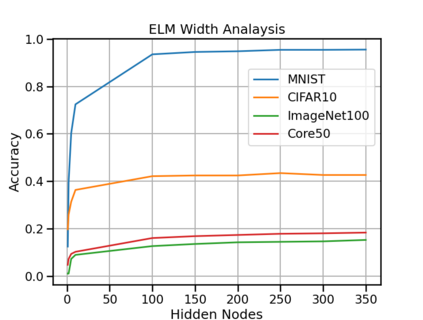

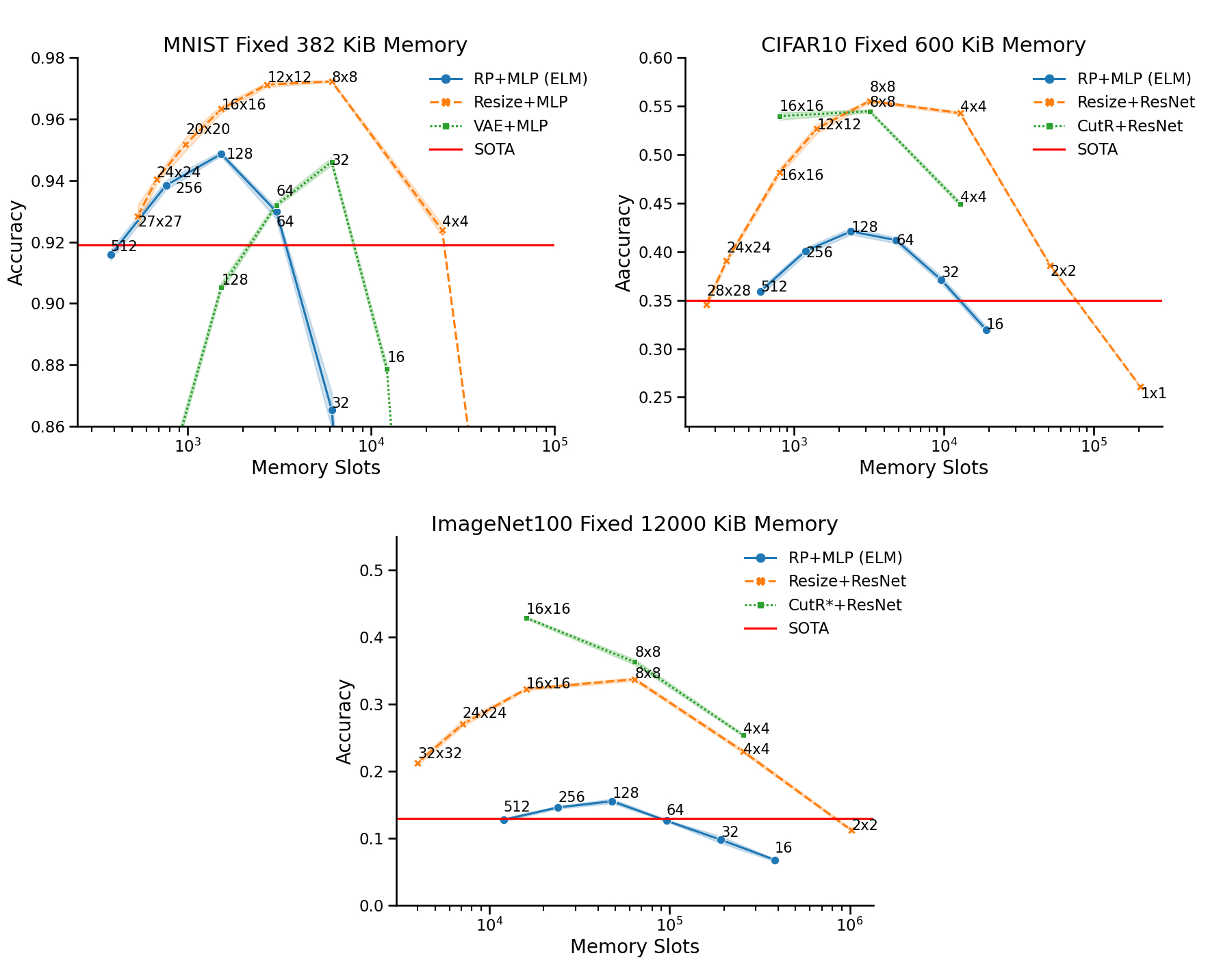

The design of machines and algorithms capable of learning in a dynamically changing environment has become an increasingly topical problem with the increase of the size and heterogeneity of data available to learning systems. As a consequence, the key issue of Continual Learning has become that of addressing the stability-plasticity dilemma of connectionist systems, as they need to adapt their model without forgetting previously acquired knowledge. Within this context, rehearsal-based methods i.e., solutions in where the learner exploits memory to revisit past data, has proven to be very effective, leading to performance at the state-of-the-art. In our study, we propose an analysis of the memory quantity/quality trade-off adopting various data reduction approaches to increase the number of instances storable in memory. In particular, we investigate complex instance compression techniques such as deep encoders, but also trivial approaches such as image resizing and linear dimensionality reduction. Our findings suggest that the optimal trade-off is severely skewed toward instance quantity, where rehearsal approaches with several heavily compressed instances easily outperform state-of-the-art approaches with the same amount of memory at their disposal. Further, in high memory configurations, deep approaches extracting spatial structure combined with extreme resizing (of the order of $8\times8$ images) yield the best results, while in memory-constrained configurations where deep approaches cannot be used due to their memory requirement in training, Extreme Learning Machines (ELM) offer a clear advantage.

翻译:能够在一个动态变化的环境中学习的机器和算法的设计,随着可供学习系统使用的数据规模和多样性的增加,已成为一个日益热门的问题;因此,持续学习的关键问题已成为如何解决联结系统的稳定-可塑性的两难困境,因为它们需要调整其模型而不忘记先前获得的知识;在这方面,以排练为基础的方法,即学习者利用记忆重新审视过去数据的方法证明非常有效,导致最先进数据的性能。在我们的研究中,我们提议分析记忆数量/质量交换方法,采用各种数据减少方法,增加记忆中可储存的事例的数目。特别是,我们研究复杂的压缩技术,如深层编码器,但也研究像图像重新定位和线性维度减少等微不足道的方法。我们的调查结果表明,最佳交换方法严重偏向实例数量,以若干高度压缩的事例进行排练,从而在最先进的方式进行排练,在深度的记忆中采用最精良性的方法,在深度的记忆处理中采用最精良的存储方法。

相关内容

Source: Apple - iOS 8