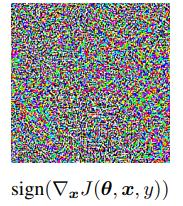

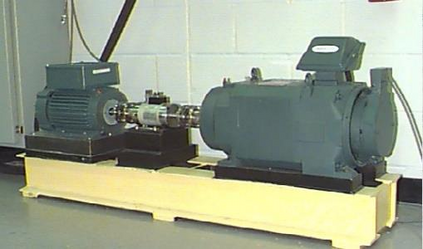

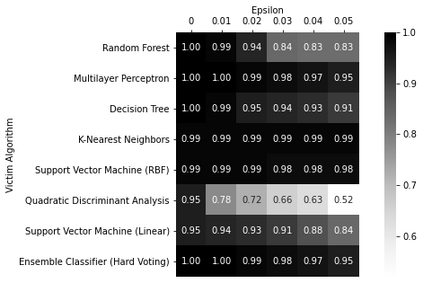

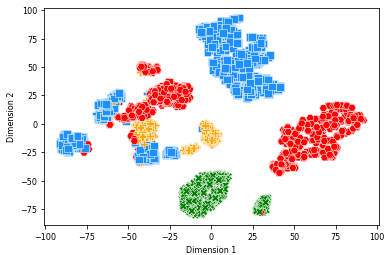

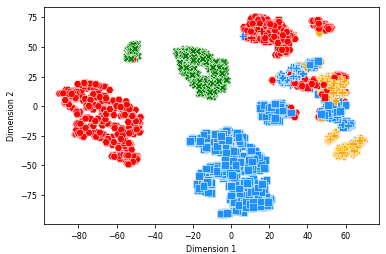

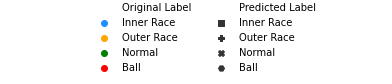

Condition-based maintenance (CBM) strategies exploit machine learning models to assess the health status of systems based on the collected data from the physical environment, while machine learning models are vulnerable to adversarial attacks. A malicious adversary can manipulate the collected data to deceive the machine learning model and affect the CBM system's performance. Adversarial machine learning techniques introduced in the computer vision domain can be used to make stealthy attacks on CBM systems by adding perturbation to data to confuse trained models. The stealthy nature causes difficulty and delay in detection of the attacks. In this paper, adversarial machine learning in the domain of CBM is introduced. A case study shows how adversarial machine learning can be used to attack CBM capabilities. Adversarial samples are crafted using the Fast Gradient Sign method, and the performance of a CBM system under attack is investigated. The obtained results reveal that CBM systems are vulnerable to adversarial machine learning attacks and defense strategies need to be considered.

翻译:有条件维护(CBM)战略利用机器学习模型来评估基于从物理环境中收集的数据的系统健康状况,而机器学习模型则容易受到对抗性攻击;恶意对手可以操纵所收集的数据来欺骗机器学习模型并影响CBM系统的性能;计算机视觉领域引入的反向机器学习技术可以用来对CBM系统进行隐形攻击,方法是在数据中增加扰动以混淆受过训练的模型;隐形性质在发现攻击方面造成困难和延误;本文介绍了CBM领域对抗性机器学习的方法;案例研究表明如何利用对抗性机器学习来攻击CBM能力;使用快速渐进信号方法制作反向抽样,并调查受到攻击的CBM系统的性能;获得的结果显示,CBM系统容易受到对抗性机器学习攻击和防御战略的伤害。