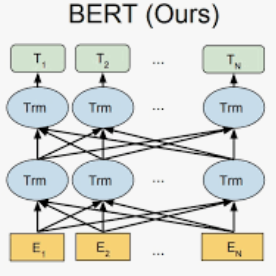

Transformer-based pre-trained language models like BERT and its variants have recently achieved promising performance in various natural language processing (NLP) tasks. However, the conventional paradigm constructs the backbone by purely stacking the manually designed global self-attention layers, introducing inductive bias and thus leads to sub-optimal. In this work, we make the first attempt to automatically discover novel pre-trained language model (PLM) backbone on a flexible search space containing the most fundamental operations from scratch. Specifically, we propose a well-designed search space which (i) contains primitive math operations in the intra-layer level to explore novel attention structures, and (ii) leverages convolution blocks to be the supplementary for attentions in the inter-layer level to better learn local dependency. To enhance the efficiency for finding promising architectures, we propose an Operation-Priority Neural Architecture Search (OP-NAS) algorithm, which optimizes both the search algorithm and evaluation of candidate models. Specifically, we propose Operation-Priority (OP) evolution strategy to facilitate model search via balancing exploration and exploitation. Furthermore, we design a Bi-branch Weight-Sharing (BIWS) training strategy for fast model evaluation. Extensive experiments show that the searched architecture (named AutoBERT-Zero) significantly outperforms BERT and its variants of different model capacities in various downstream tasks, proving the architecture's transfer and scaling abilities. Remarkably, AutoBERT-Zero-base outperforms RoBERTa-base (using much more data) and BERT-large (with much larger model size) by 2.4 and 1.4 higher score on GLUE test set.

翻译:我们首次尝试在包含从头开始的最基本操作的灵活搜索空间上自动发现新的预先培训的语言模型主干线。具体地说,我们提议一个设计良好的搜索空间,以便(一) 包含在层内一级探索新的关注结构的原始数学操作,(二) 利用变动区块作为在层间一级关注的补充,以更好地学习当地依赖性。为了提高寻找有希望架构的效率,我们提议了一个“行动-重点神经结构搜索”算法,以优化搜索算法和对候选人模型的评估。具体地说,我们提议了一个设计良好的搜索空间,以(一) 包含在层内一级探索新关注结构的原始数学操作,(二) 利用变动区块作为在层间一级关注的辅助,以引入感化偏重度偏重度偏重力,从而更好地学习当地依赖性。我们设计了一个“行动-重点”神经结构搜索(OP-NAS)算法(PLM), 最优化搜索算法和对候选人模型的评估。具体地说,我们提议“行动-优先(OP)进化战略战略,通过平衡勘探和开发促进模型的模型搜索。 此外,我们设计了一个更深层次的更深的BROB级测试系统系统测试系统, 的模型,以显示其快速的升级的升级的升级的系统结构结构。