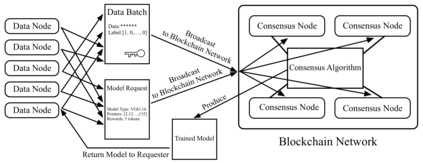

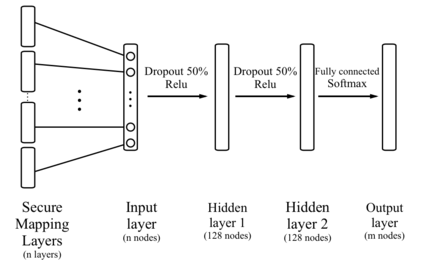

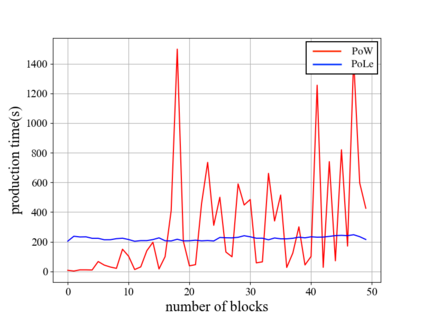

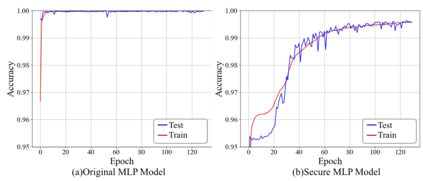

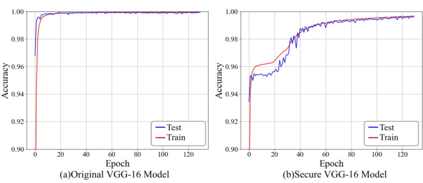

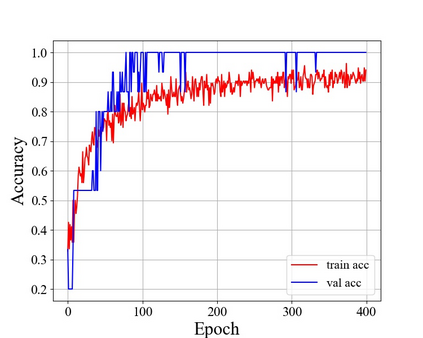

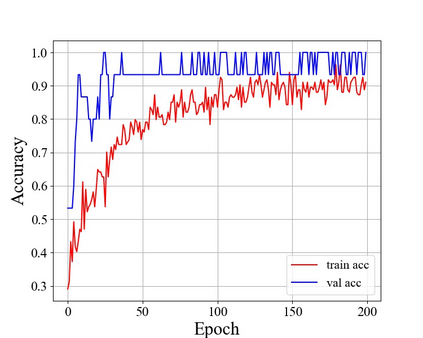

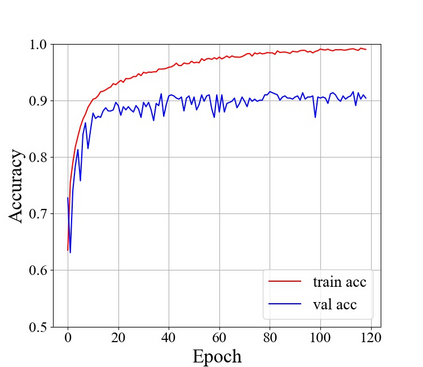

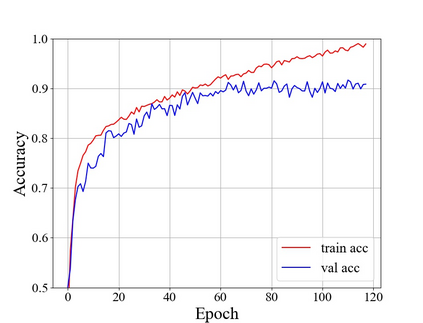

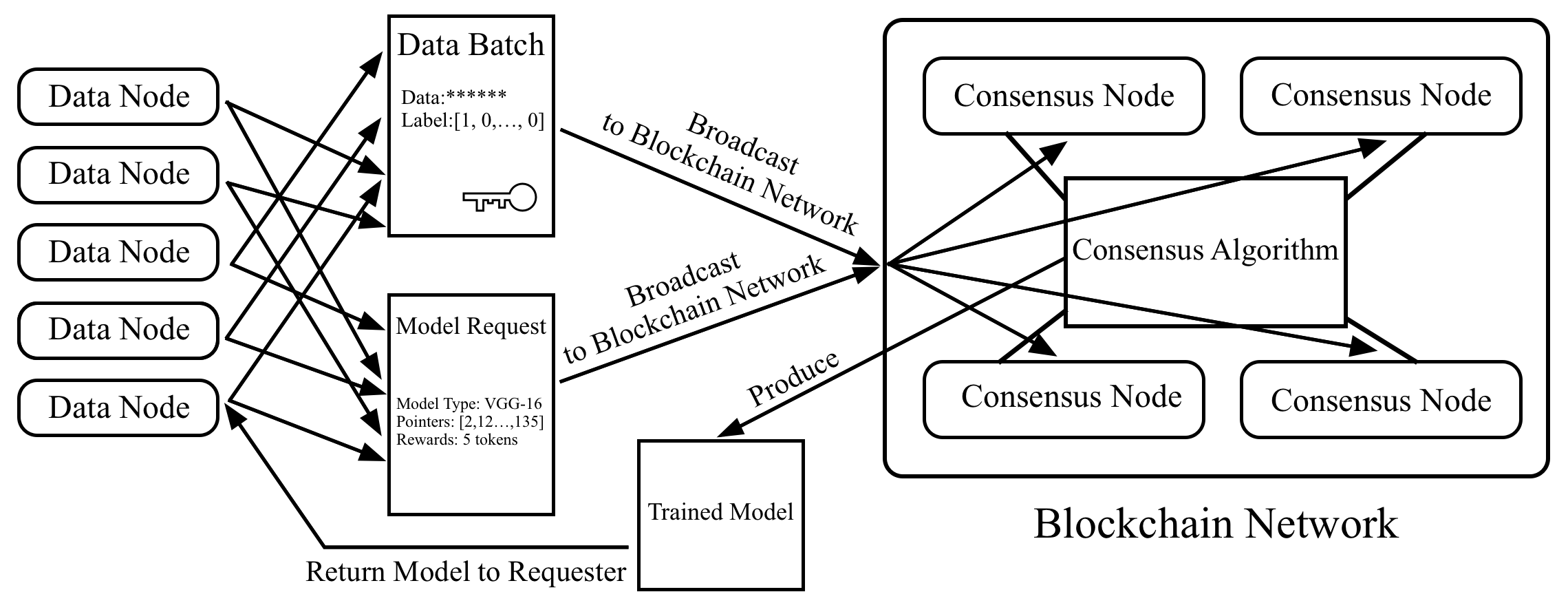

The progress of deep learning (DL), especially the recent development of automatic design of networks, has brought unprecedented performance gains at heavy computational cost. On the other hand, blockchain systems routinely perform a huge amount of computation that does not achieve practical purposes in order to build Proof-of-Work (PoW) consensus from decentralized participants. In this paper, we propose a new consensus mechanism, Proof of Learning (PoLe), which directs the computation spent for consensus toward optimization of neural networks (NN). In our mechanism, the training/testing data are released to the entire blockchain network (BCN) and the consensus nodes train NN models on the data, which serves as the proof of learning. When the consensus on the BCN considers a NN model to be valid, a new block is appended to the blockchain. We experimentally compare the PoLe protocol with Proof of Work (PoW) and show that PoLe can achieve a more stable block generation rate, which leads to more efficient transaction processing. We also introduce a novel cheating prevention mechanism, Secure Mapping Layer (SML), which can be straightforwardly implemented as a linear NN layer. Empirical evaluation shows that SML can detect cheating nodes at small cost to the predictive performance.

翻译:深层次学习(DL)的进展,特别是最近开发的网络自动设计,已经以高昂的计算成本带来了前所未有的绩效收益。另一方面,链链系统通常进行大量无法达到实际目的的计算,以建立分散参与者的工作证明共识。在本文件中,我们提议一个新的共识机制,即学习证明(Pole),用于优化神经网络的共识计算方法指导了计算。在我们的机制中,培训/测试数据被放给整个链链网络(BCN)和协商一致节点培训NNN数据模型,这可以作为学习的证明。当关于BCN的共识认为NNN模式有效时,一个新的块被附在链上。我们实验性地将PoLe协议与工作证明(PoW)进行比较,并表明Pole可以实现更稳定的区生成率,从而实现更有效的交易处理。我们还引入了一个新的欺骗预防机制,即安全映像层(SML),它不能在一小的线性内层直接实施。Empricalal 评估显示SML能够检测到SML的成本。