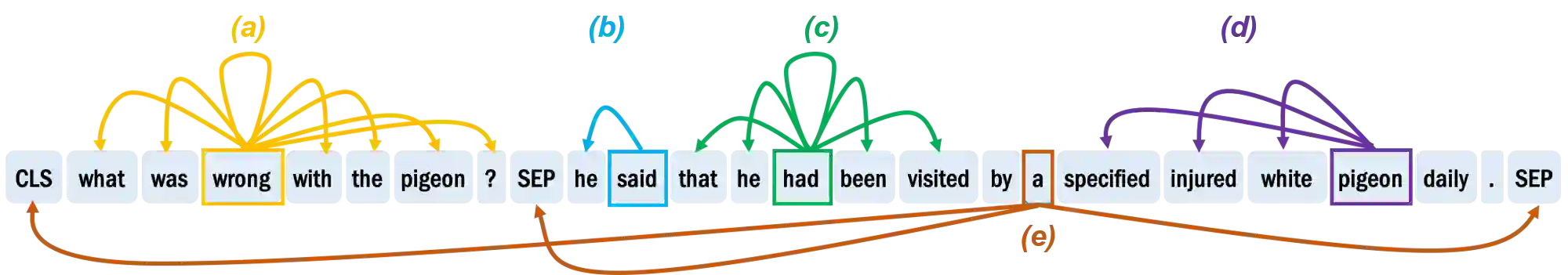

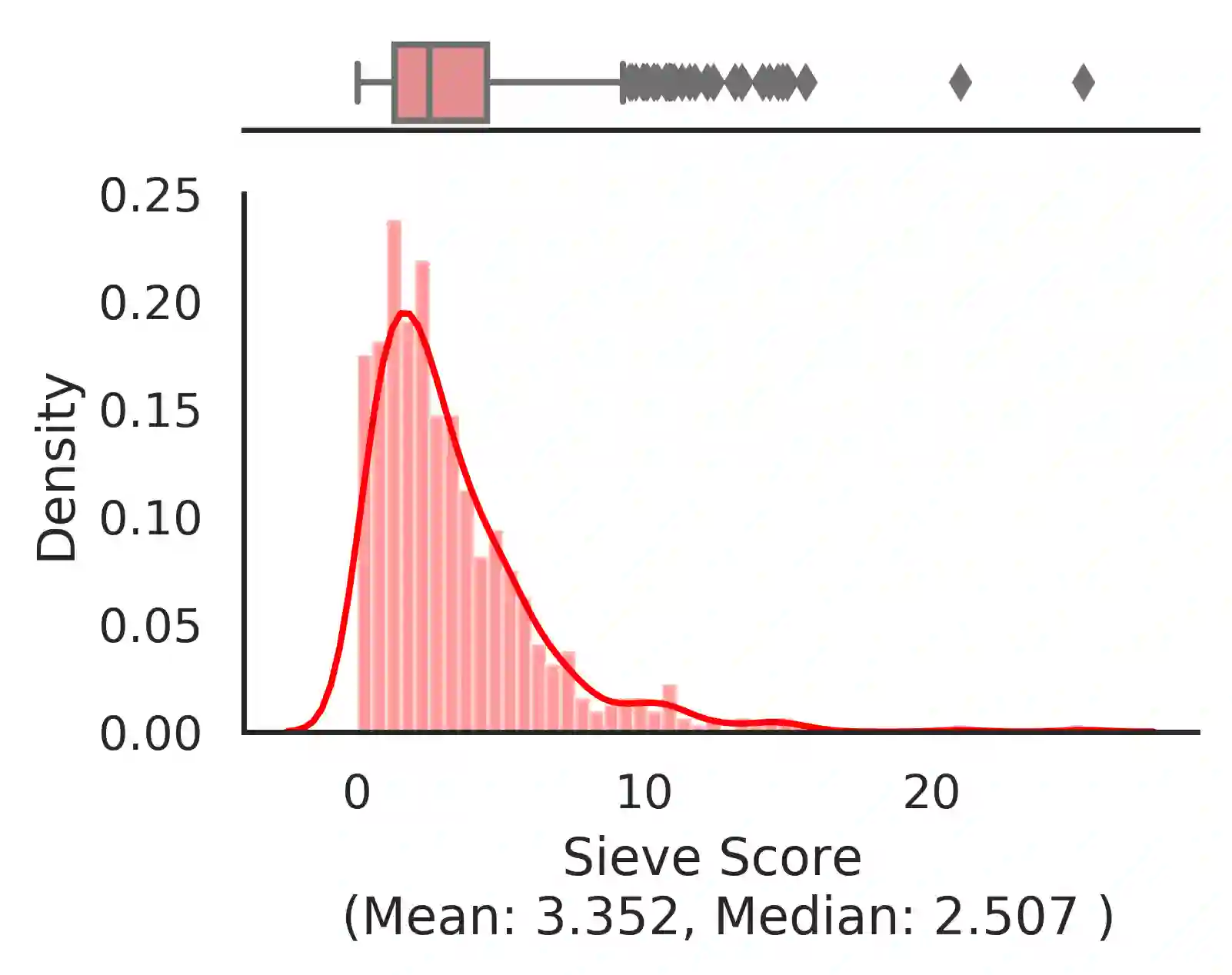

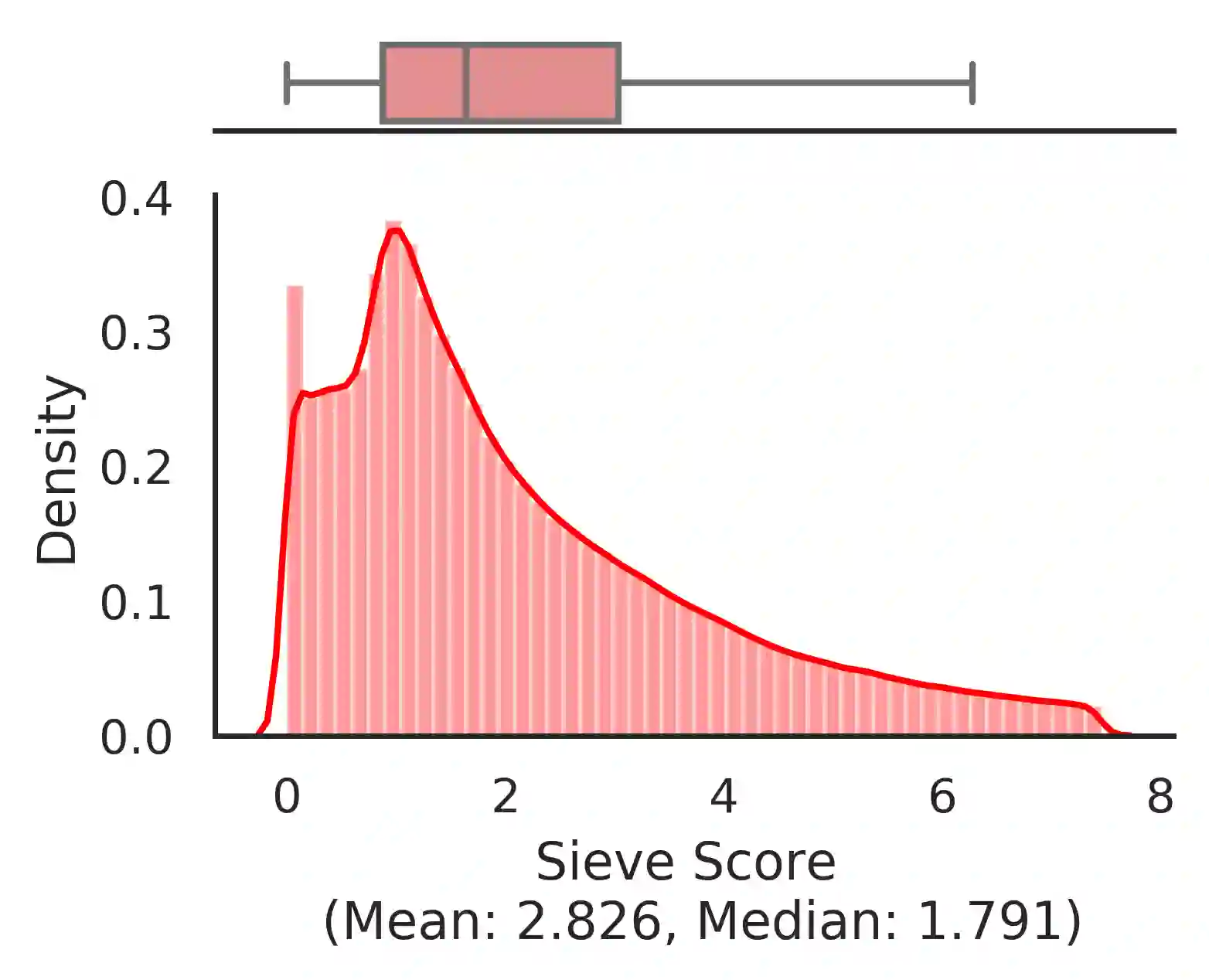

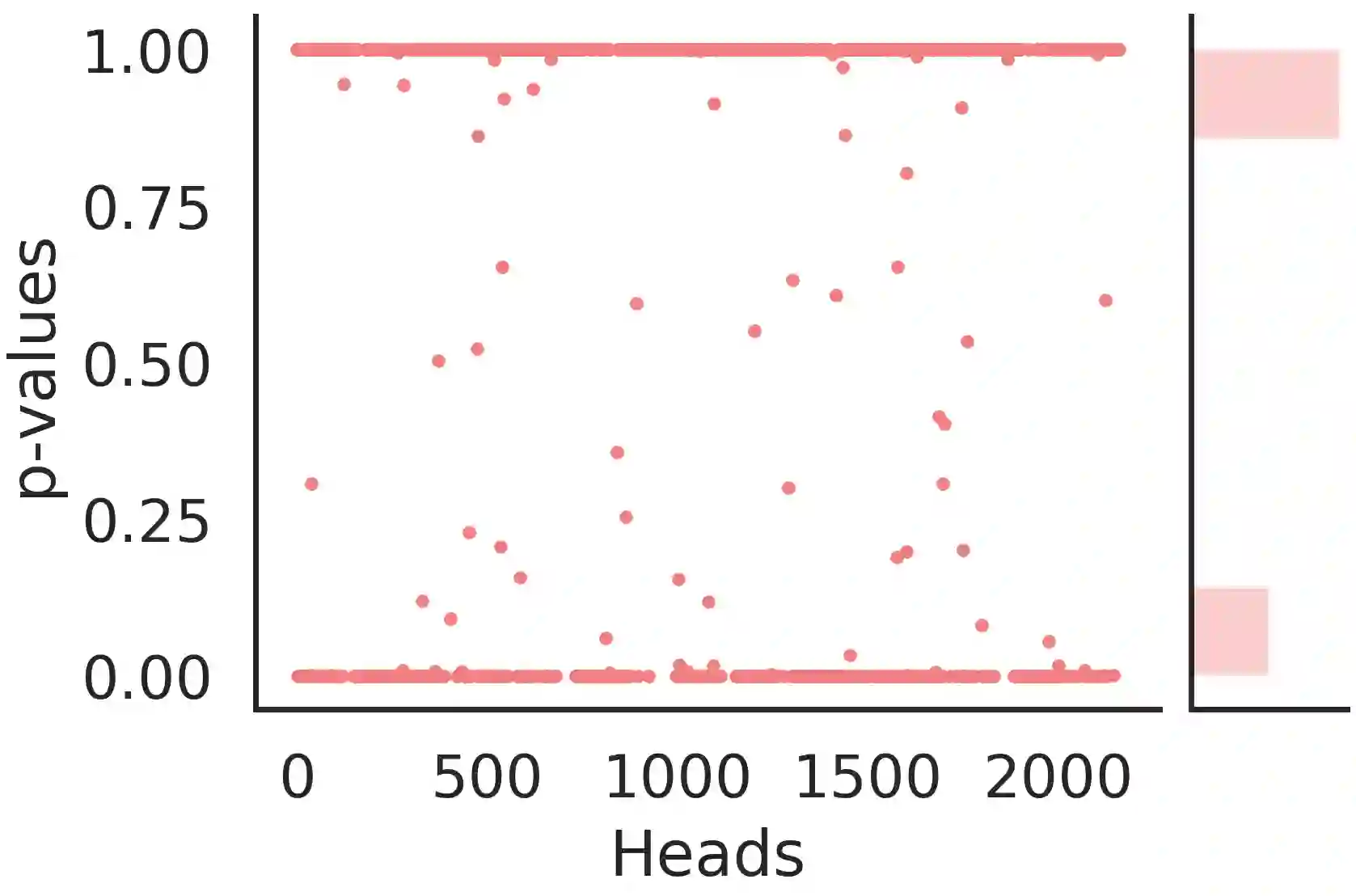

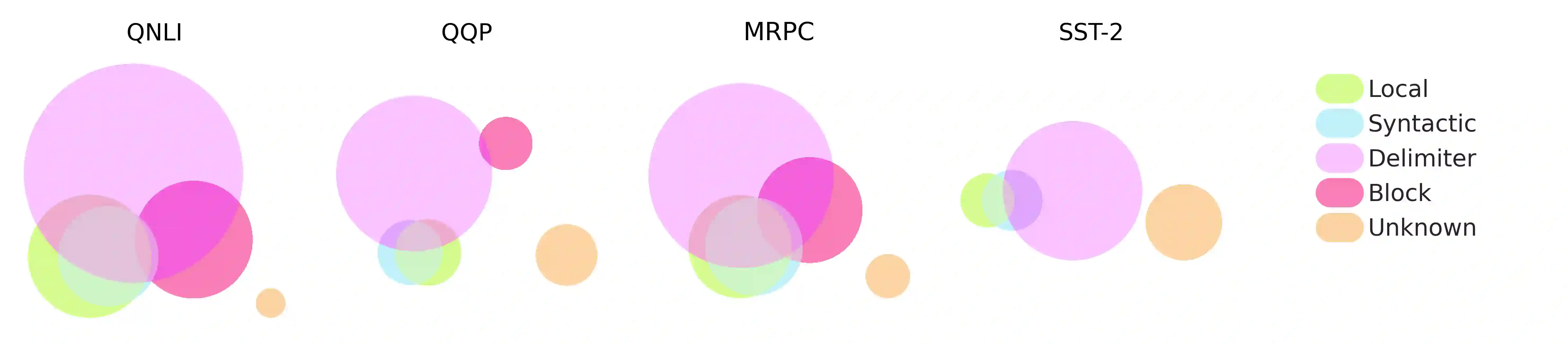

Multi-headed attention heads are a mainstay in transformer-based models. Different methods have been proposed to classify the role of each attention head based on the relations between tokens which have high pair-wise attention. These roles include syntactic (tokens with some syntactic relation), local (nearby tokens), block (tokens in the same sentence) and delimiter (the special [CLS], [SEP] tokens). There are two main challenges with existing methods for classification: (a) there are no standard scores across studies or across functional roles, and (b) these scores are often average quantities measured across sentences without capturing statistical significance. In this work, we formalize a simple yet effective score that generalizes to all the roles of attention heads and employs hypothesis testing on this score for robust inference. This provides us the right lens to systematically analyze attention heads and confidently comment on many commonly posed questions on analyzing the BERT model. In particular, we comment on the co-location of multiple functional roles in the same attention head, the distribution of attention heads across layers, and effect of fine-tuning for specific NLP tasks on these functional roles.

翻译:多头关注头部是变压器模型的支柱。提出了不同方法,根据具有高对称关注的象征之间的关系,对每个关注头的作用进行分类。这些作用包括综合(与某种合成关系相提并论)、本地(近亲象征)、块(同一句中的标记)和分隔器(特殊[CLS]、[SEP]符号),现有分类方法面临两大挑战:(a) 不同研究或不同功能作用之间没有标准分数,以及(b) 这些分数往往是跨刑期平均量的衡量,而没有统计意义。在这项工作中,我们正式确定一个简单而有效的分数,概括了所有关注头部的作用,并对这一分数进行假设测试,以获得稳健的推理。这为我们提供了一种正确的透镜,可以系统分析关注头部,并自信地评论在分析BERT模型时通常提出的许多问题。特别是,我们评论同一头多个职能角色的同位、跨层分配,以及具体NLP任务的微调这些职能任务的影响。