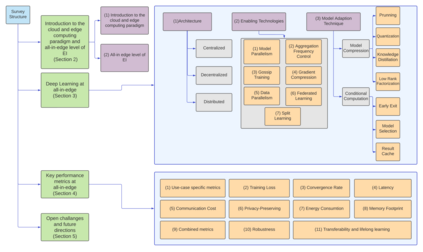

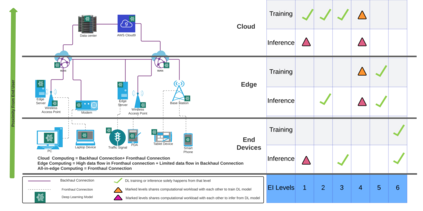

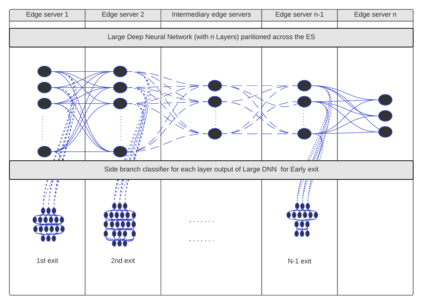

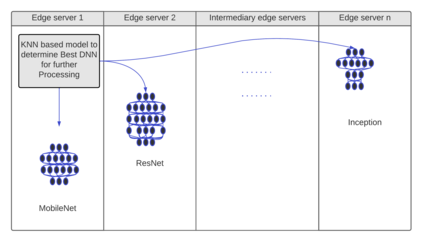

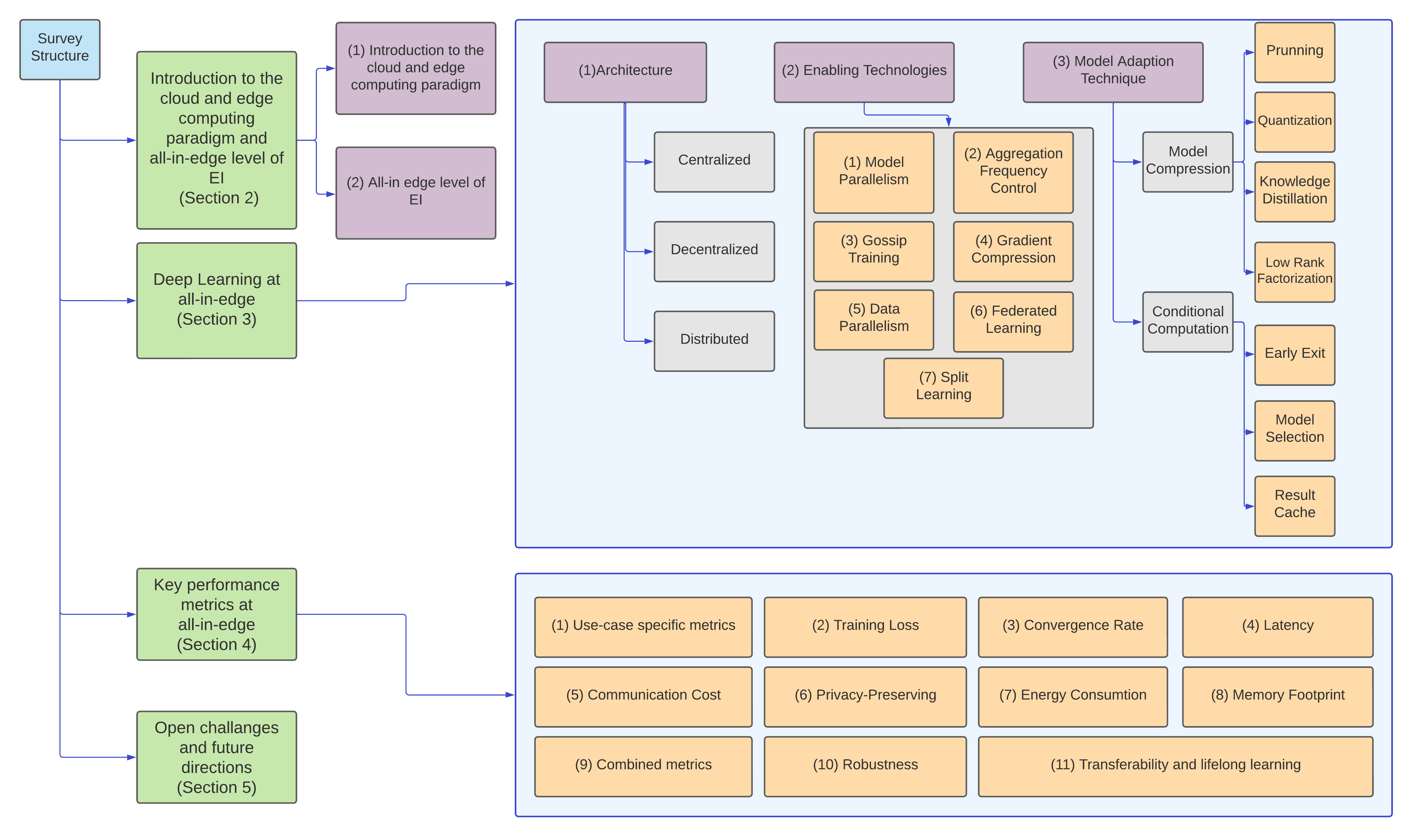

In recent years, deep learning (DL) models have demonstrated remarkable achievements on non-trivial tasks such as speech recognition and natural language understanding. One of the significant contributors to its success is the proliferation of end devices that acted as a catalyst to provide data for data-hungry DL models. However, computing DL training and inference is the main challenge. Usually, central cloud servers are used for the computation, but it opens up other significant challenges, such as high latency, increased communication costs, and privacy concerns. To mitigate these drawbacks, considerable efforts have been made to push the processing of DL models to edge servers. Moreover, the confluence point of DL and edge has given rise to edge intelligence (EI). This survey paper focuses primarily on the fifth level of EI, called all in-edge level, where DL training and inference (deployment) are performed solely by edge servers. All in-edge is suitable when the end devices have low computing resources, e.g., Internet-of-Things, and other requirements such as latency and communication cost are important in mission-critical applications, e.g., health care. Firstly, this paper presents all in-edge computing architectures, including centralized, decentralized, and distributed. Secondly, this paper presents enabling technologies, such as model parallelism and split learning, which facilitate DL training and deployment at edge servers. Thirdly, model adaptation techniques based on model compression and conditional computation are described because the standard cloud-based DL deployment cannot be directly applied to all in-edge due to its limited computational resources. Fourthly, this paper discusses eleven key performance metrics to evaluate the performance of DL at all in-edge efficiently. Finally, several open research challenges in the area of all in-edge are presented.

翻译:近些年来,深层学习模式(DL)在语言识别和自然语言理解等非三角任务方面取得了显著成就。其成功的一个重要贡献者是作为为数据饥饿DL模型提供数据的催化剂的终端设备的扩散。然而,计算DL培训和推论是主要的挑战。通常,中央云服务器用于计算,但是它也带来了其他重大挑战,如高悬浮、通信成本增加和隐私问题。为减轻这些缺陷,已经作出了相当大的努力,将DL模型的处理推向边缘服务器。此外,DL和边缘的连接点已经产生了优势智能(EI)。这份调查文件主要侧重于EI的第五层,称为所有前沿水平,DL的培训和推断(部署)仅由边缘服务器进行。当最终装置的模型部署资源较低时,例如,基于互联网的定位挑战,以及隐私和通信成本等其他要求,如在任务边缘应用的定位和通信成本是十分重要的,例如,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在成本方面,在