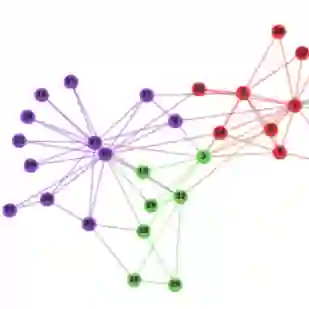

Node representation learning in a network is an important machine learning technique for encoding relational information in a continuous vector space while preserving the inherent properties and structures of the network. Recently, \textit{unsupervised} node embedding methods such as DeepWalk \citep{deepwalk}, LINE \citep{line}, struc2vec \citep{struc2vec}, PTE \citep{pte}, UserItem2vec \citep{wu2020multi}, and RWJBG \citep{li2021random} have emerged from the Skip-gram model \citep{word2vec} and perform better performance in several downstream tasks such as node classification and link prediction than the existing relational models. However, providing post-hoc explanations of Skip-gram-based embeddings remains a challenging problem because of the lack of explanation methods and theoretical studies applicable for embeddings. In this paper, we first show that global explanations to the Skip-gram-based embeddings can be found by computing \textit{bridgeness} under a spectral cluster-aware local perturbation. Moreover, a novel gradient-based explanation method, which we call GRAPH-wGD, is proposed that allows the top-$q$ global explanations about learned graph embedding vectors more efficiently. Experiments show that the ranking of nodes by scores using GRAPH-wGD is highly correlated with true \textit{bridgeness} scores. We also observe that the top-$q$ node-level explanations selected by GRAPH-wGD have higher importance scores and produce more changes in class label prediction when perturbed, compared with the nodes selected by recent alternatives, using five real-world graphs.

翻译:暂无翻译