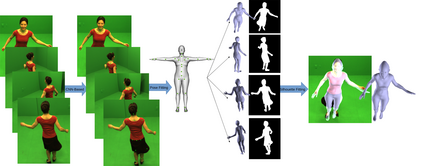

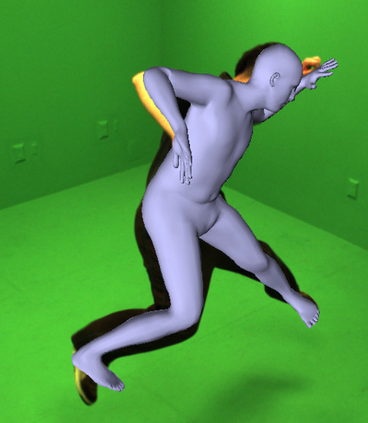

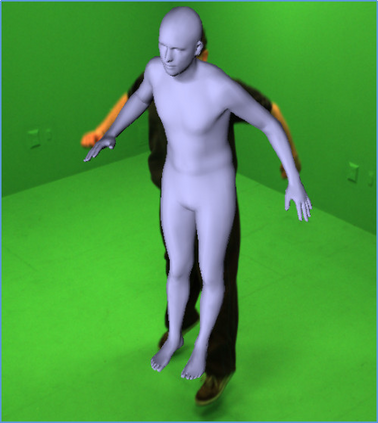

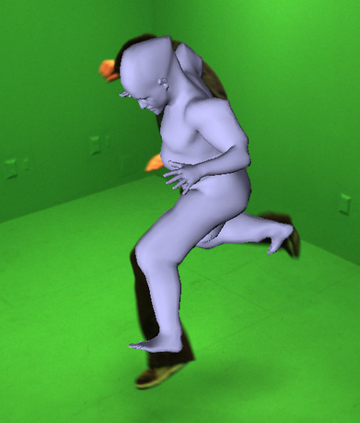

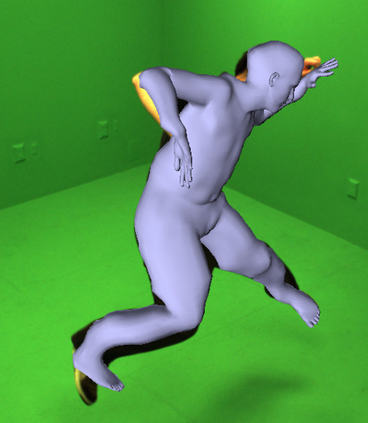

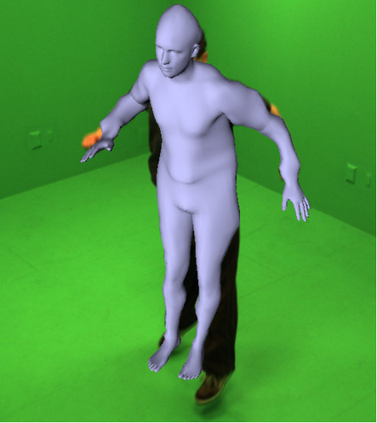

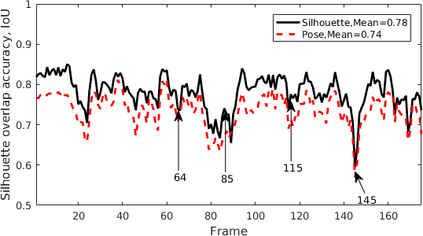

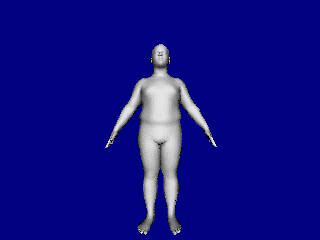

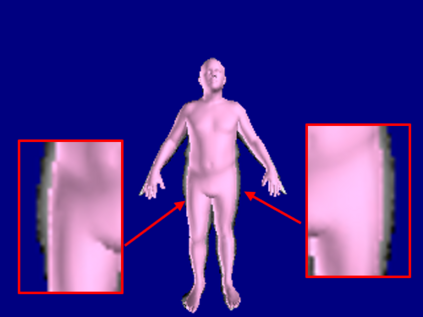

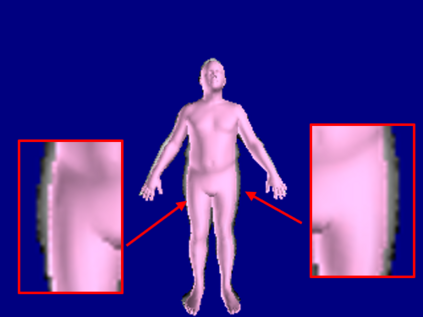

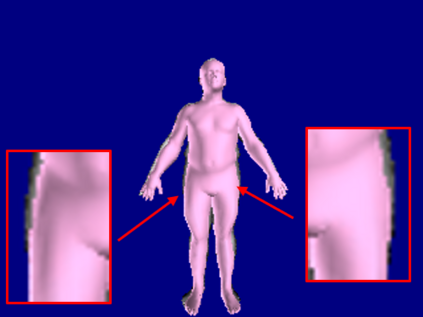

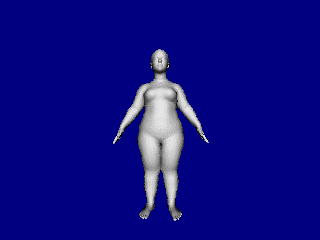

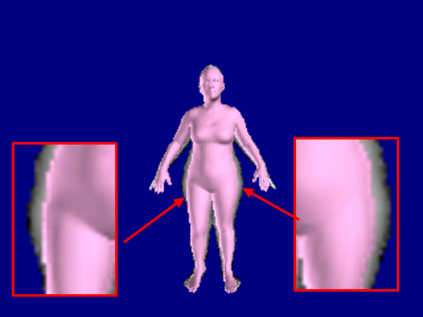

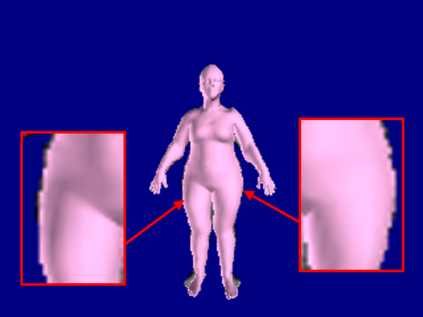

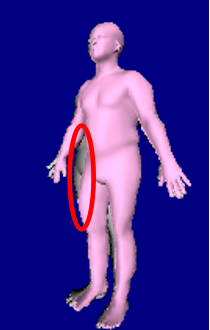

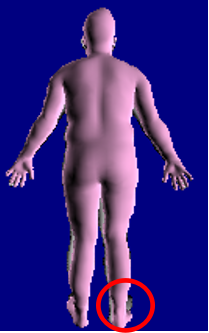

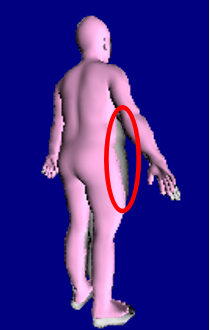

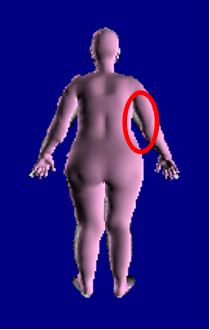

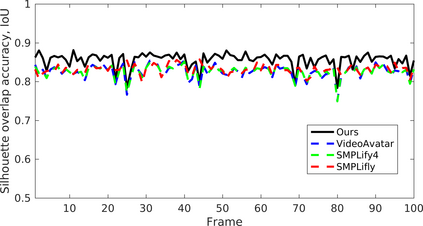

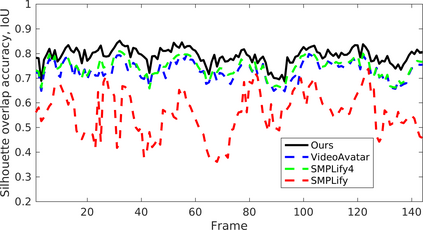

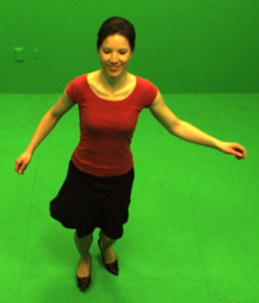

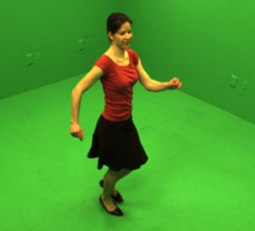

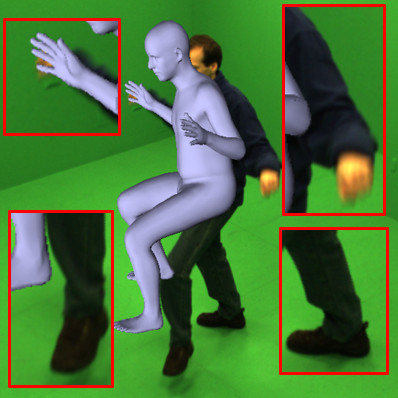

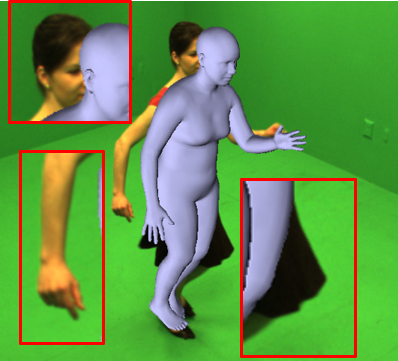

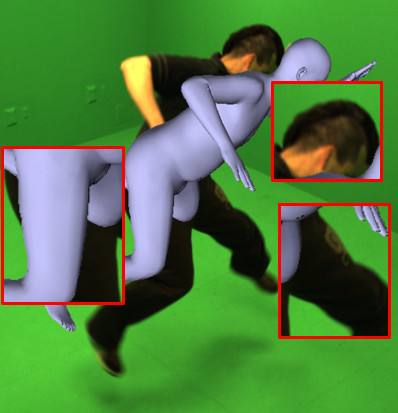

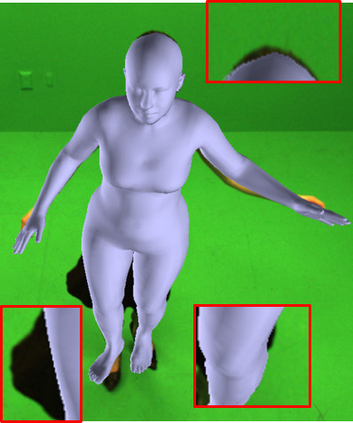

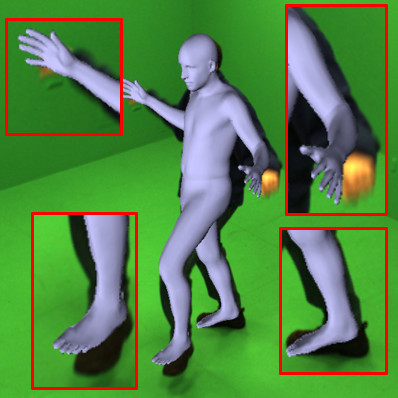

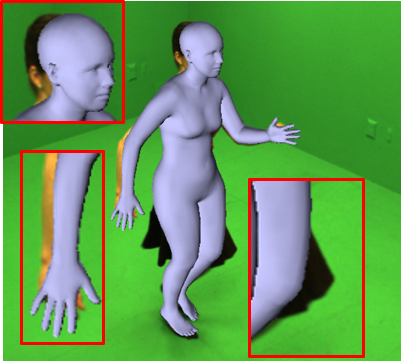

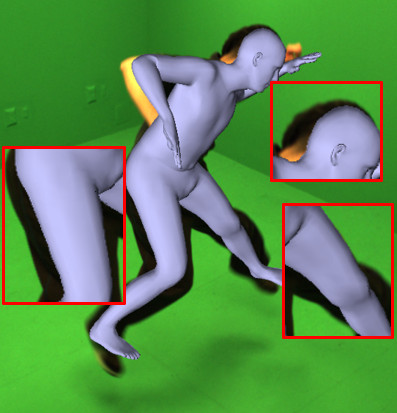

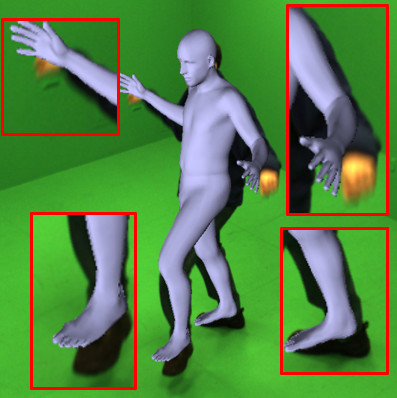

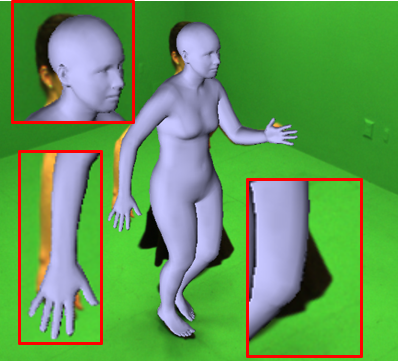

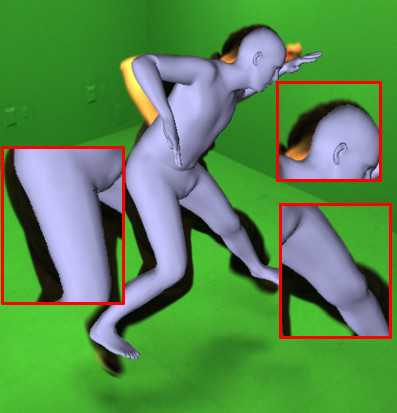

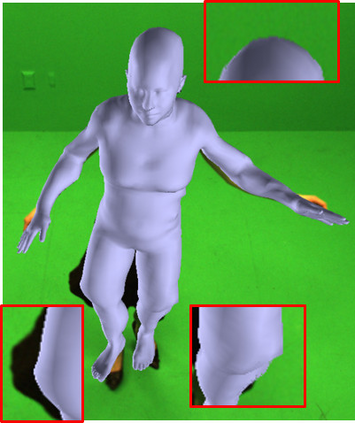

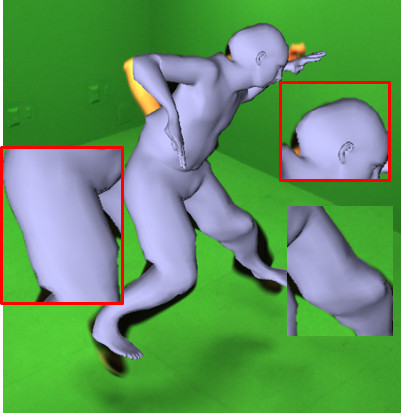

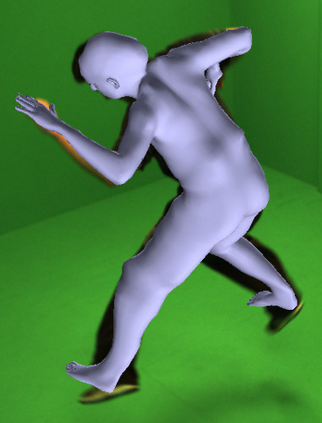

This paper presents a novel method for 3D human pose and shape estimation from images with sparse views, using joint points and silhouettes, based on a parametric model. Firstly, the parametric model is fitted to the joint points estimated by deep learning-based human pose estimation. Then, we extract the correspondence between the parametric model of pose fitting and silhouettes on 2D and 3D space. A novel energy function based on the correspondence is built and minimized to fit parametric model to the silhouettes. Our approach uses sufficient shape information because the energy function of silhouettes is built from both 2D and 3D space. This also means that our method only needs images from sparse views, which balances data used and the required prior information. Results on synthetic data and real data demonstrate the competitive performance of our approach on pose and shape estimation of the human body.

翻译:本文根据一种参数模型,利用联合点和双光影模型,对3D人的外形和外观稀少的图像进行形状估计,提出了一种新的方法。首先,参数模型与深层学习人类外观估计得出的联合点相匹配。然后,我们提取了2D和3D空间外观装配的外观模型与侧面外观的外观模型之间的对应关系。基于对等的新的能量功能已经建成,并且最小化,以适合双光谱模型。我们的方法使用了足够的形状信息,因为双光影的能量功能是从2D和3D空间建立起来的。这也意味着我们的方法只需要从稀少的外观获得图像,这种图像能够平衡数据使用和所需的先前信息。关于合成数据和真实数据的结果显示了我们关于人体的外观和形状估计方法的竞争性表现。