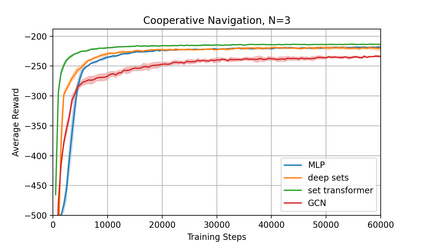

The cooperative Multi-A gent R einforcement Learning (MARL) with permutation invariant agents framework has achieved tremendous empirical successes in real-world applications. Unfortunately, the theoretical understanding of this MARL problem is lacking due to the curse of many agents and the limited exploration of the relational reasoning in existing works. In this paper, we verify that the transformer implements complex relational reasoning, and we propose and analyze model-free and model-based offline MARL algorithms with the transformer approximators. We prove that the suboptimality gaps of the model-free and model-based algorithms are independent of and logarithmic in the number of agents respectively, which mitigates the curse of many agents. These results are consequences of a novel generalization error bound of the transformer and a novel analysis of the Maximum Likelihood Estimate (MLE) of the system dynamics with the transformer. Our model-based algorithm is the first provably efficient MARL algorithm that explicitly exploits the permutation invariance of the agents. Our improved generalization bound may be of independent interest and is applicable to other regression problems related to the transformer beyond MARL.

翻译:在本文中,我们核实变压器采用了复杂的关联推理,并提议和分析与变压器相近的无模型和基于模型的离线 MARL 算法。我们证明,无模型和基于模型的算法的次优化差分别独立于不同物剂的数量,并且对不同物剂的数量不甚一致,这减轻了许多物剂的诅咒。这些结果是变压器新颖的概括错误和与变压器一起对系统动态的最大相似性估计(MLE)的新分析的结果。我们基于模型的算法是第一个明显利用不同物剂的变异性、明显有效的MARL 算法。我们改进的普遍化约束可能具有独立的兴趣,并适用于与变压器以外的其他回归问题。