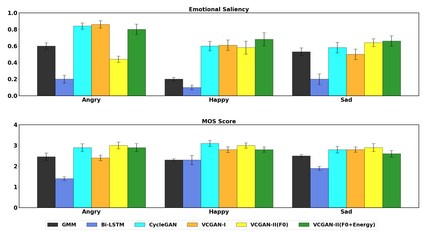

This paper introduces a new framework for non-parallel emotion conversion in speech. Our framework is based on two key contributions. First, we propose a stochastic version of the popular CycleGAN model. Our modified loss function introduces a Kullback Leibler (KL) divergence term that aligns the source and target data distributions learned by the generators, thus overcoming the limitations of sample wise generation. By using a variational approximation to this stochastic loss function, we show that our KL divergence term can be implemented via a paired density discriminator. We term this new architecture a variational CycleGAN (VCGAN). Second, we model the prosodic features of target emotion as a smooth and learnable deformation of the source prosodic features. This approach provides implicit regularization that offers key advantages in terms of better range alignment to unseen and out of distribution speakers. We conduct rigorous experiments and comparative studies to demonstrate that our proposed framework is fairly robust with high performance against several state-of-the-art baselines.

翻译:本文引入了非平行语言情绪转换的新框架。 我们的框架基于两个关键贡献 。 首先, 我们提出流行循环GAN模型的随机版。 我们修改过的损失函数引入了 Kullback Leibel (KL) 差异术语, 该术语将源和发电机所学的目标数据发布方法相匹配, 从而克服了样本智慧生成的局限性 。 通过对随机损失函数使用变相近度, 我们显示我们的 KL 差异术语可以通过配对密度分析器实施 。 我们把这个新架构称为变异循环GAN( VCGAN ) 。 第二, 我们将目标情感的预变异性特征建模为源性特征的顺利和可学习的变形。 这种方法提供了隐含的规范化, 提供了更好的范围与隐蔽和流传者相匹配的关键优势 。 我们进行了严格的实验和比较研究, 以证明我们提议的框架与一些最先进的基线相比, 具有相当强的性。