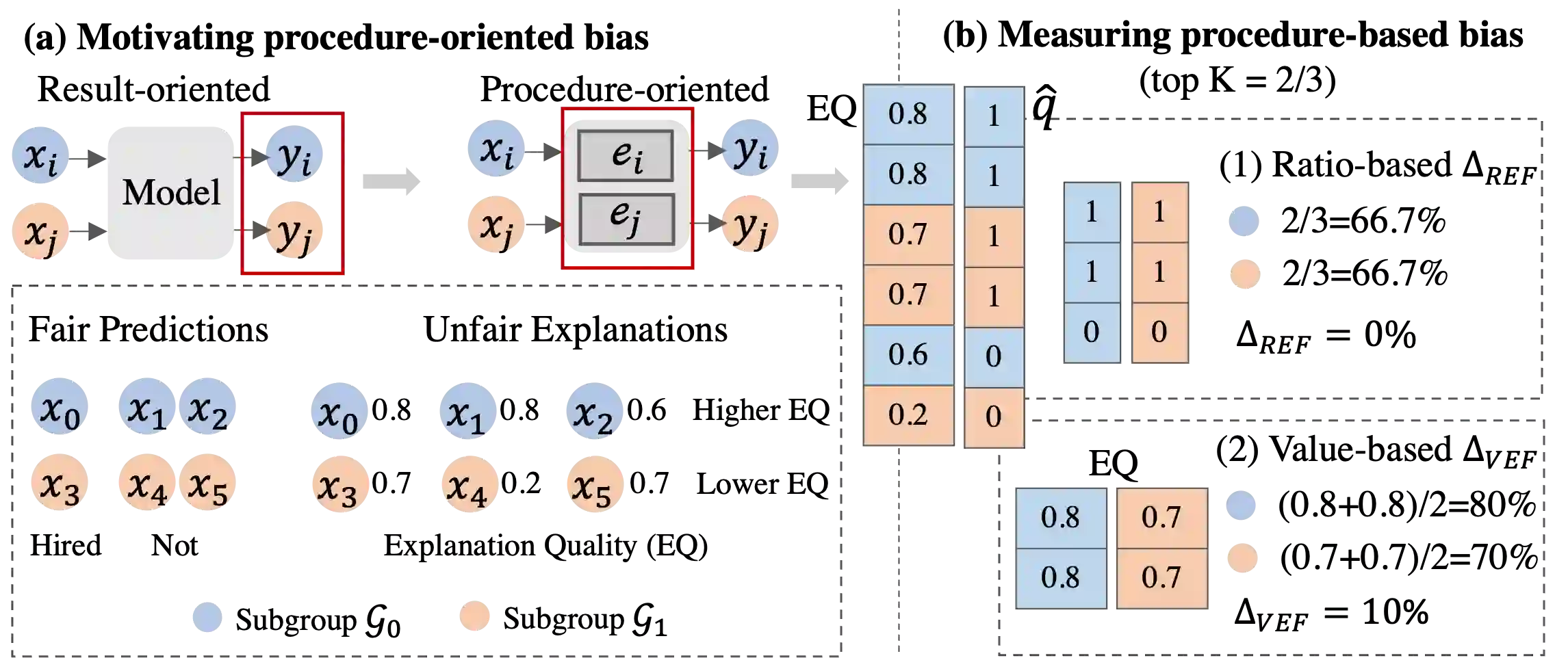

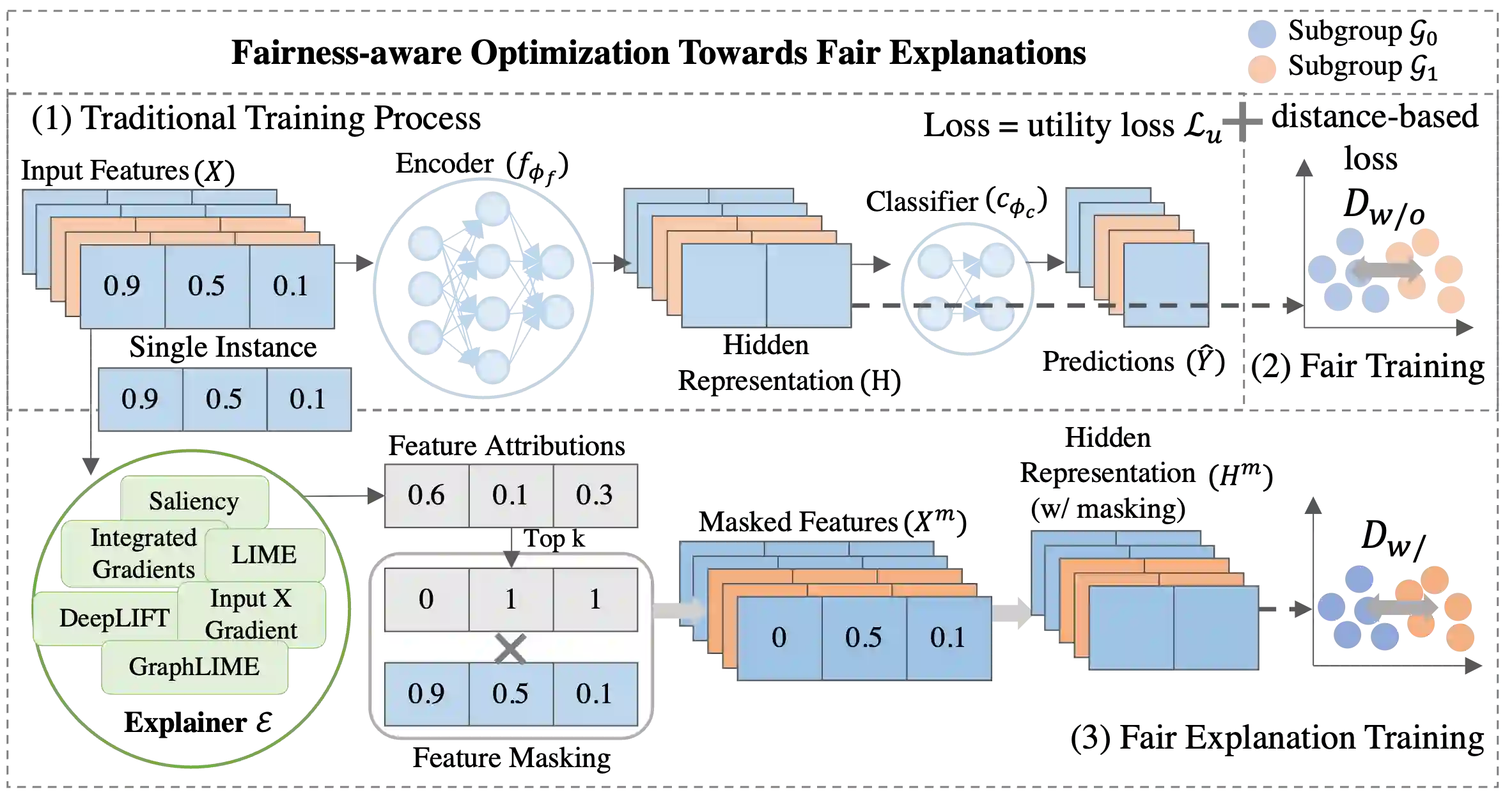

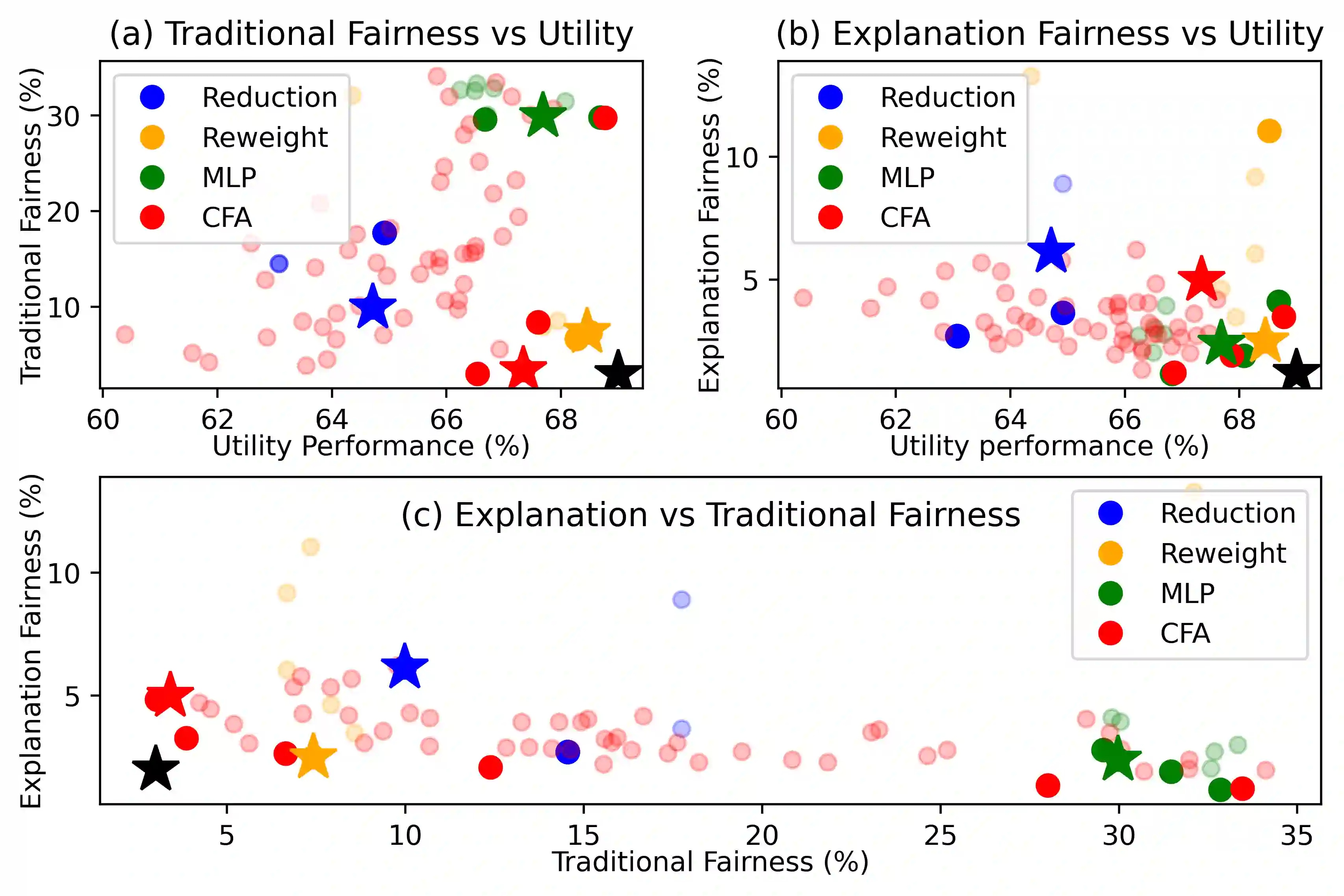

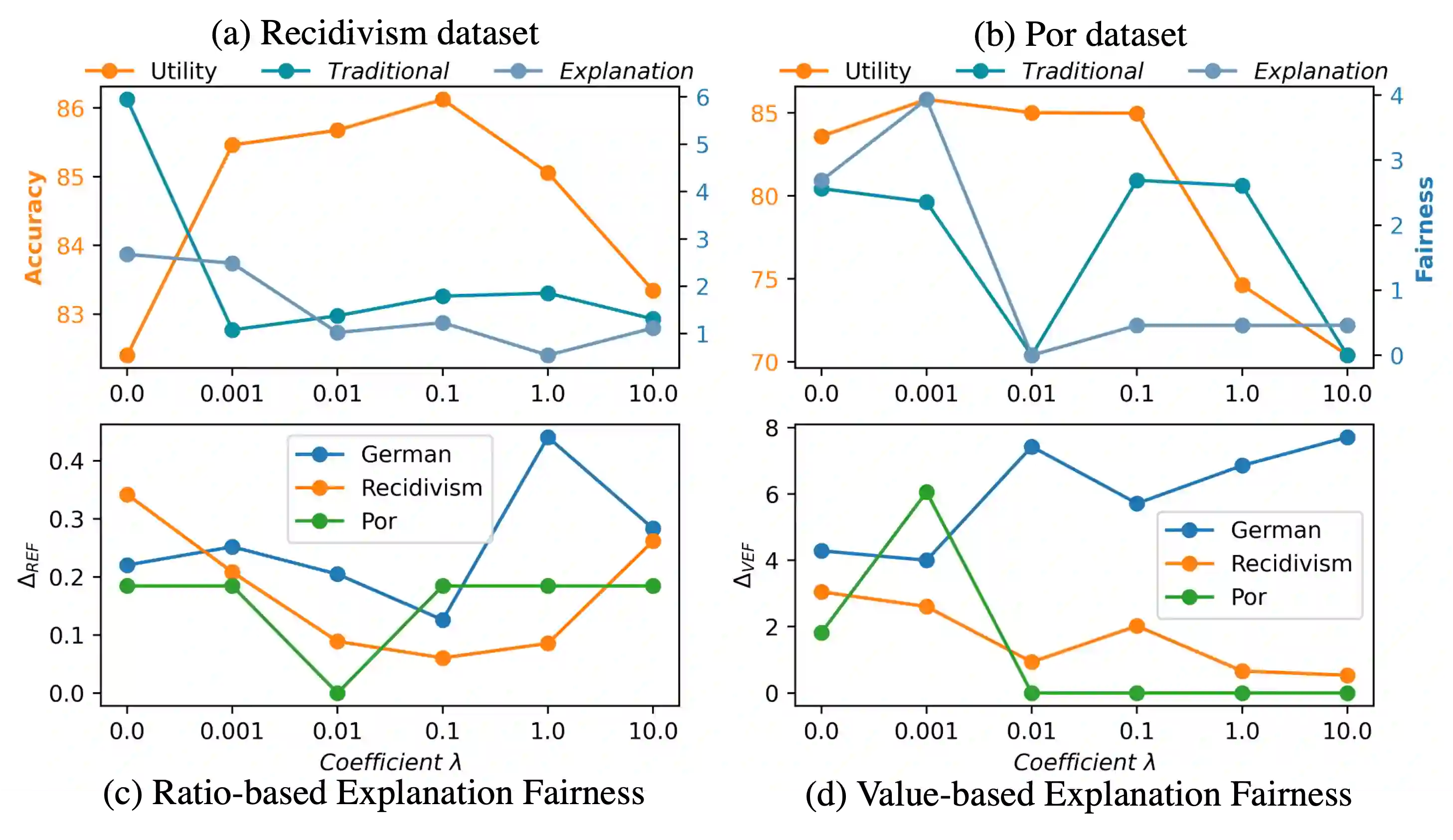

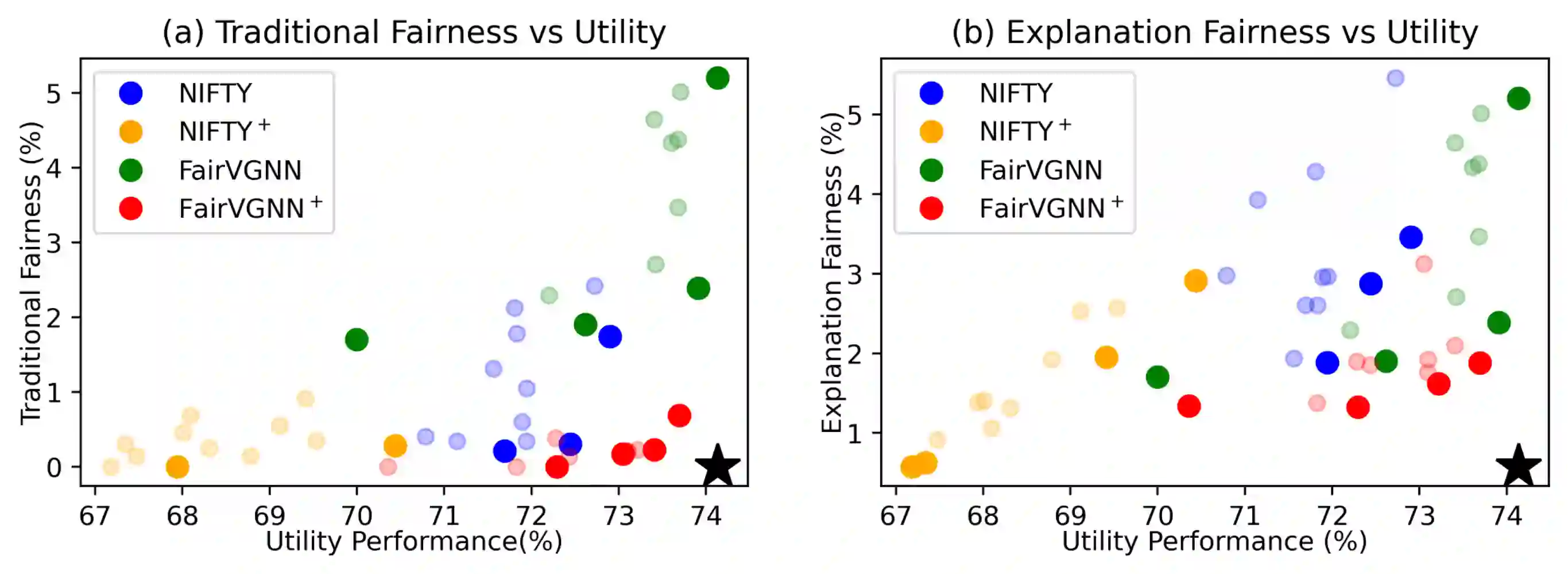

While machine learning models have achieved unprecedented success in real-world applications, they might make biased/unfair decisions for specific demographic groups and hence result in discriminative outcomes. Although research efforts have been devoted to measuring and mitigating bias, they mainly study bias from the result-oriented perspective while neglecting the bias encoded in the decision-making procedure. This results in their inability to capture procedure-oriented bias, which therefore limits the ability to have a fully debiasing method. Fortunately, with the rapid development of explainable machine learning, explanations for predictions are now available to gain insights into the procedure. In this work, we bridge the gap between fairness and explainability by presenting a novel perspective of procedure-oriented fairness based on explanations. We identify the procedure-based bias by measuring the gap of explanation quality between different groups with Ratio-based and Value-based Explanation Fairness. The new metrics further motivate us to design an optimization objective to mitigate the procedure-based bias where we observe that it will also mitigate bias from the prediction. Based on our designed optimization objective, we propose a Comprehensive Fairness Algorithm (CFA), which simultaneously fulfills multiple objectives - improving traditional fairness, satisfying explanation fairness, and maintaining the utility performance. Extensive experiments on real-world datasets demonstrate the effectiveness of our proposed CFA and highlight the importance of considering fairness from the explainability perspective. Our code is publicly available at https://github.com/YuyingZhao/FairExplanations-CFA .

翻译:虽然机器学习模式在现实世界应用方面取得了前所未有的成功,但它们可能会为特定人口群体作出偏向/不公平的决定,从而产生歧视性结果。虽然研究努力致力于衡量和减少偏向,但主要是从注重结果的角度研究偏向,而忽视了决策程序中编码的偏向,这导致他们无法捕捉以程序为导向的偏向,从而限制了完全降低偏见的方法。幸运的是,随着可解释的机器学习的迅速发展,现在可以对预测作出解释,以获得对程序的洞察力。在这项工作中,我们通过提出基于解释的程序偏向公平的新视角,弥合公平和可解释性之间的差距。我们通过衡量基于比率和基于价值的解释公平性的不同群体之间在解释质量方面的差距,确定基于程序上的偏向性。新的指标进一步激励我们设计一个优化目标,以缓解基于程序的偏向性方法的偏向,因为我们认为它也会减轻来自预测的偏向性。根据我们设计的优化目标,我们提出了全面公平性Algoiththimicality(CFAFA),同时提出一种基于基于解释程序偏向公平性原则的新观点的新的公平性目标,即提高传统公平性,并反映我们目前现有的公平性,从可公开性原则的角度说明我们现有的公平性。