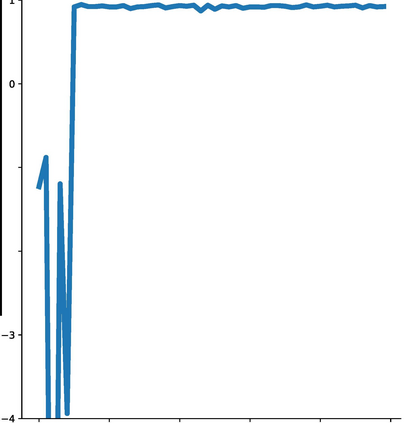

Experience replay enables off-policy reinforcement learning (RL) agents to utilize past experiences to maximize the cumulative reward. Prioritized experience replay that weighs experiences by the magnitude of their temporal-difference error ($|\text{TD}|$) significantly improves the learning efficiency. But how $|\text{TD}|$ is related to the importance of experience is not well understood. We address this problem from an economic perspective, by linking $|\text{TD}|$ to value of experience, which is defined as the value added to the cumulative reward by accessing the experience. We theoretically show the value metrics of experience are upper-bounded by $|\text{TD}|$ for Q-learning. Furthermore, we successfully extend our theoretical framework to maximum-entropy RL by deriving the lower and upper bounds of these value metrics for soft Q-learning, which turn out to be the product of $|\text{TD}|$ and "on-policyness" of the experiences. Our framework links two important quantities in RL: $|\text{TD}|$ and value of experience. We empirically show that the bounds hold in practice, and experience replay using the upper bound as priority improves maximum-entropy RL in Atari games.

翻译:经验重现使政策外强化学习(RL)代理商能够利用过去的经验来尽量扩大累积的奖励。 优先经验重现,根据时间差差差差的幅度来权衡经验( { text{ TD} $) 大大提高了学习效率 。 但是, 美元 text{ TD} $ 美元与经验重要性的关系并没有得到很好理解。 我们从经济角度解决这个问题,方法是将美元 text{TD} $与经验价值挂钩,后者被定义为通过获取经验获得累积奖励的附加值。 我们理论上展示了经验的价值尺度由用于Q学习的${ text{TD}$ 和 经验价值的上限。 此外,我们成功地把我们的理论框架扩大到了最大耐用性RLL, 得出这些价值指标用于软学习的下限和上限。 我们从实验性的角度展示了Atriproduces在练习中保持最高优先级, 并用最高优先级改进了Rtrocal 。