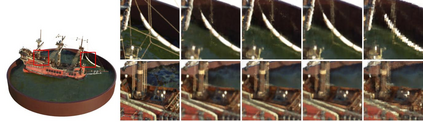

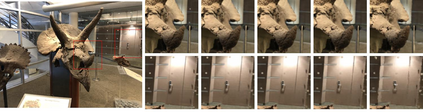

Recent work on Neural Radiance Fields (NeRF) showed how neural networks can be used to encode complex 3D environments that can be rendered photorealistically from novel viewpoints. Rendering these images is very computationally demanding and recent improvements are still a long way from enabling interactive rates, even on high-end hardware. Motivated by scenarios on mobile and mixed reality devices, we propose FastNeRF, the first NeRF-based system capable of rendering high fidelity photorealistic images at 200Hz on a high-end consumer GPU. The core of our method is a graphics-inspired factorization that allows for (i) compactly caching a deep radiance map at each position in space, (ii) efficiently querying that map using ray directions to estimate the pixel values in the rendered image. Extensive experiments show that the proposed method is 3000 times faster than the original NeRF algorithm and at least an order of magnitude faster than existing work on accelerating NeRF, while maintaining visual quality and extensibility.

翻译:神经辐射场(NERF)的近期工作表明,神经网络可以如何用于从新观点中以光化现实化的方式对复杂的 3D 环境进行编码。 这些图像的生成要求很高,而且最近的改进距离促成互动率还有很长的路要走,即使是在高端硬件上也是如此。受移动和混合现实设备设想的驱动,我们提出了FastNERF,这是第一个基于NERF的系统,能够在高端消费者GPU上,在200赫兹上显示高度忠诚的摄影现实化图像。我们的方法的核心是图形激励因素化,允许(一) 紧紧地在空间的每个位置绘制深亮度地图,(二) 有效地查询地图,使用射线方向估计成像中的像像的像素值。广泛的实验显示,拟议方法比原NERF算法快3000倍,至少比现有加速NRF的工程快1个数量级,同时保持视觉质量和可扩展性。

相关内容

- Today (iOS and OS X): widgets for the Today view of Notification Center

- Share (iOS and OS X): post content to web services or share content with others

- Actions (iOS and OS X): app extensions to view or manipulate inside another app

- Photo Editing (iOS): edit a photo or video in Apple's Photos app with extensions from a third-party apps

- Finder Sync (OS X): remote file storage in the Finder with support for Finder content annotation

- Storage Provider (iOS): an interface between files inside an app and other apps on a user's device

- Custom Keyboard (iOS): system-wide alternative keyboards

Source: iOS 8 Extensions: Apple’s Plan for a Powerful App Ecosystem