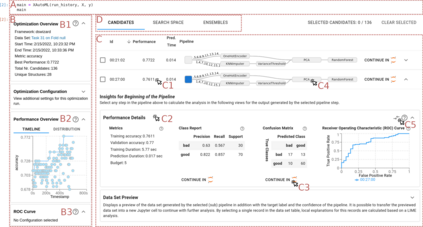

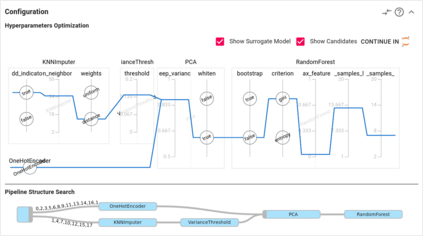

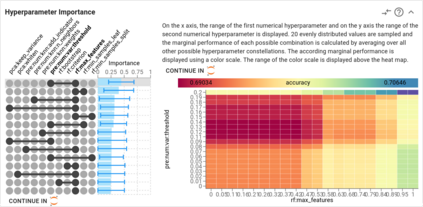

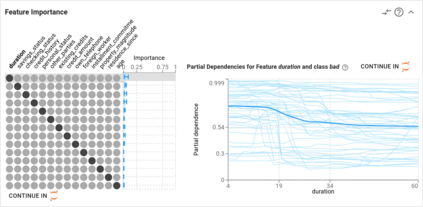

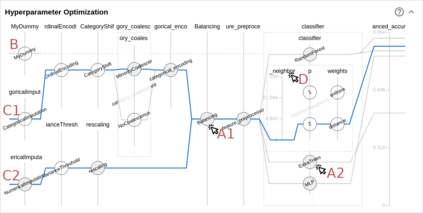

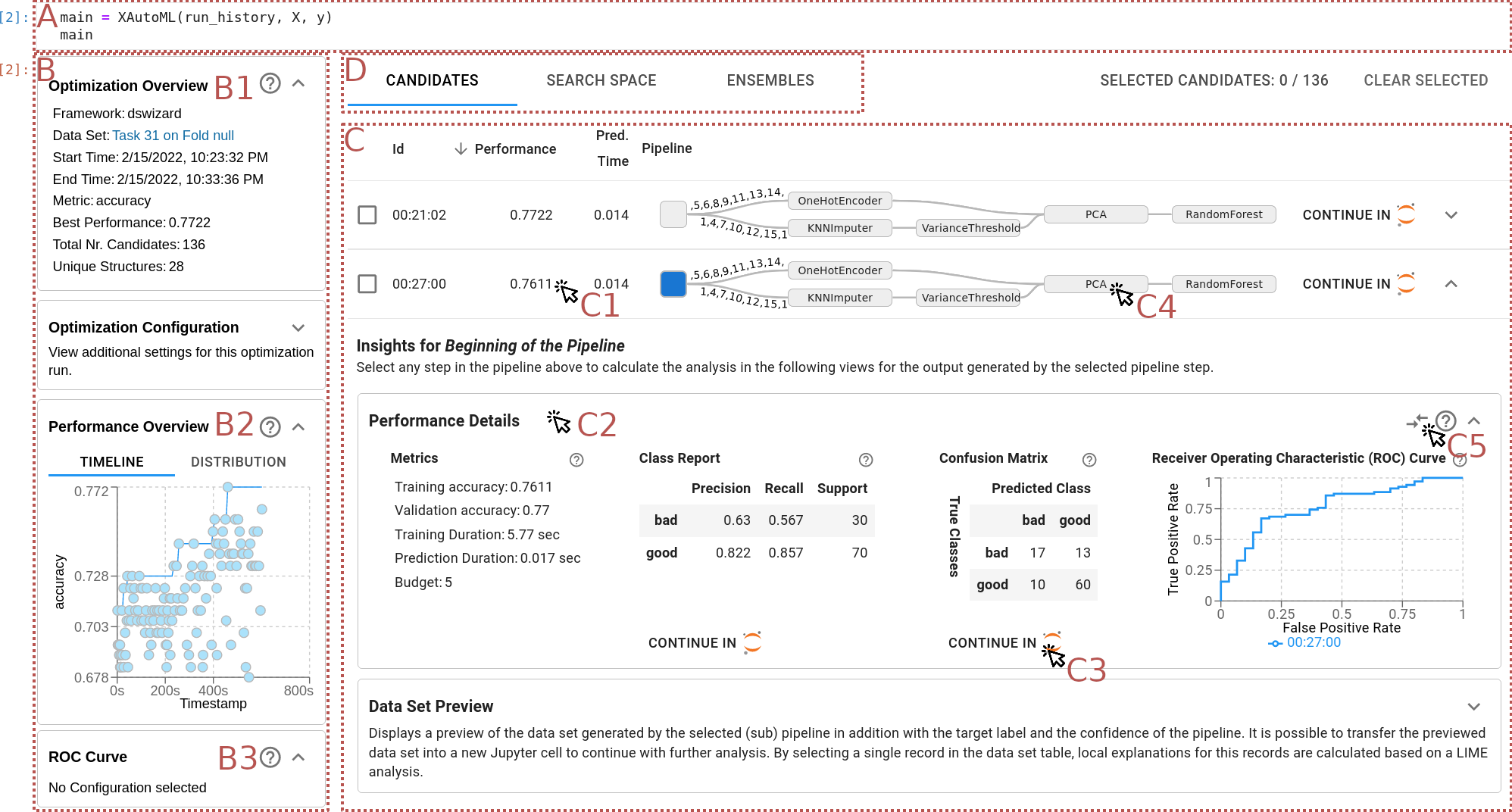

In the last ten years, various automated machine learning (AutoML) systems have been proposed to build end-to-end machine learning (ML) pipelines with minimal human interaction. Even though such automatically synthesized ML pipelines are able to achieve a competitive performance, recent studies have shown that users do not trust models constructed by AutoML due to missing transparency of AutoML systems and missing explanations for the constructed ML pipelines. In a requirements analysis study with 26 domain experts, data scientists, and AutoML researchers from different professions with vastly different expertise in ML, we collect detailed informational needs to establish trust in AutoML. We propose XAutoML, an interactive visual analytics tool for explaining arbitrary AutoML optimization procedures and ML pipelines constructed by AutoML. XAutoML combines interactive visualizations with established techniques from explainable artificial intelligence (XAI) to make the complete AutoML procedure transparent and explainable. By integrating XAutoML with JupyterLab, experienced users can extend the visual analytics with ad-hoc visualizations based on information extracted from XAutoML. We validate our approach in a user study with the same diverse user group from the requirements analysis. All participants were able to extract useful information from XAutoML, leading to a significantly increased trust in ML pipelines produced by AutoML and the AutoML optimization itself.

翻译:过去十年来,提出了各种自动机器学习系统(Automal),以建立终端到终端机学习(ML)管道,尽量减少人际互动,尽管这种自动合成ML管道能够取得竞争性的性能,但最近的研究表明,用户不相信Automil所建立的模式,因为Automil系统缺乏透明度,而且对建造的ML管道的解释也缺失。在与26名领域专家、数据科学家和在ML领域具有巨大专长的不同专业的Automil研究人员进行的需求分析研究中,我们收集了建立对Automal的信任的详细信息需求。我们提出了XAutomoML,这是一个解释任意自动自动合成ML优化程序和由AutomoML建造的ML管道的交互式视觉分析工具。XAutomotML将互动视觉化与从可解释的人工智能(XAI)的既定技术相结合,以使完整的自动解算程序透明和可解释。通过将XAutomotML与JupetLab相结合,有经验的用户根据从XAututimML获取的信息,通过一个高效的用户分析,验证了我们从XAutML获得的用户的高效分析方法。