一文详解bundle adjustment

点击上方“计算机视觉life”,选择“星标”

快速获得最新干货

本文转自3D视觉工坊

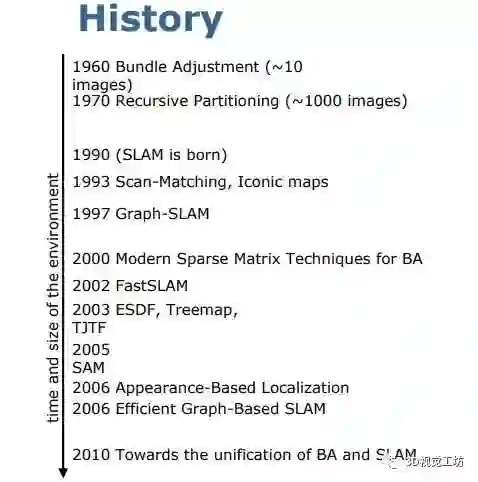

bundle adjustment 的历史发展

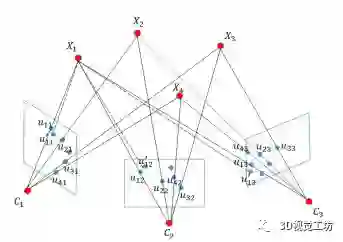

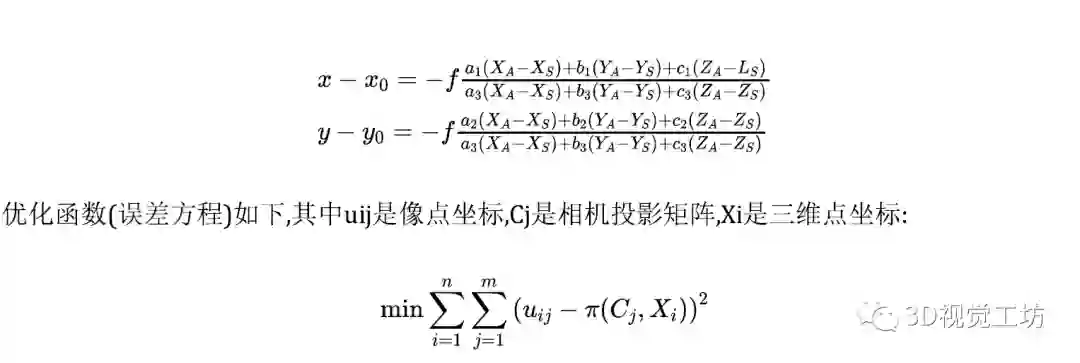

Bundle adjustment 的算法理论

四种Bundle adjustment 算法代码

-

优化相机内参及畸变系数,相机的pose(6dof)和landmark 代价函数写法如下:

template <typename CameraModel>

class BundleAdjustmentCostFunction {

public:

explicit BundleAdjustmentCostFunction(const Eigen::Vector2d& point2D)

: observed_x_(point2D(0)), observed_y_(point2D(1)) {}

//构造函数传入的是观测值

static ceres::CostFunction* Create(const Eigen::Vector2d& point2D) {

return (new ceres::AutoDiffCostFunction<

BundleAdjustmentCostFunction<CameraModel>, 2, 4, 3, 3,

CameraModel::kNumParams>(

new BundleAdjustmentCostFunction(point2D)));

}

//优化量:2代表误差方程个数;4代表pose中的姿态信息,用四元数表示;3代表pose中的位置信息;3代表landmark

自由度;CameraModel::kNumParams是相机内参和畸变系数,其取决于相机模型是what

// opertator 重载函数的参数即是待优化的量

template <typename T>

bool operator()(const T* const qvec, const T* const tvec,

const T* const point3D, const T* const camera_params,

T* residuals) const {

// Rotate and translate.

T projection[3];

ceres::UnitQuaternionRotatePoint(qvec, point3D, projection);

projection[0] += tvec[0];

projection[1] += tvec[1];

projection[2] += tvec[2];

// Project to image plane.

projection[0] /= projection[2];

projection[1] /= projection[2];

// Distort and transform to pixel space.

CameraModel::WorldToImage(camera_params, projection[0], projection[1],

&residuals[0], &residuals[1]);

// Re-projection error.

residuals[0] -= T(observed_x_);

residuals[1] -= T(observed_y_);

return true;

}

private:

const double observed_x_;

const double observed_y_;

};

ceres::Problem problem;

ceres::CostFunction* cost_function = nullptr;

ceres::LossFunction * p_LossFunction =

ceres_options_.bUse_loss_function_ ?

new ceres::HuberLoss(Square(4.0))

: nullptr; // 关于为何使用损失函数,因为现实中并不是所有观测过程中的噪声都服从

//gaussian noise的(或者可以说几乎没有),

//遇到有outlier的情况,这些方法非常容易挂掉,

//这时候就得用到robust statistics里面的

//robust cost(*cost也可以叫做loss, 统计学那边喜欢叫risk) function了,

//比较常用的有huber, cauchy等等。

cost_function = BundleAdjustmentCostFunction<CameraModel>::Create(point2D.XY());

//将优化量加入残差块

problem_->AddResidualBlock(cost_function, p_LossFunction, qvec_data,

tvec_data, point3D.XYZ().data(),

camera_params_data);

-

优化相机内参及畸变系数,pose subset parameterization(pose 信息部分参数优化)和3D landmark,当 只优化姿态信息时候,problem需要添加的代码如下:

//这里姿态又用欧拉角表示

map_poses[indexPose] = {angleAxis[0], angleAxis[1], angleAxis[2], t(0), t(1), t(2)};

double * parameter_block = &map_poses.at(indexPose)[0];

problem.AddParameterBlock(parameter_block, 6);

std::vector<int> vec_constant_extrinsic;

vec_constant_extrinsic.insert(vec_constant_extrinsic.end(), {3,4,5});

if (!vec_constant_extrinsic.empty())

{

// 主要用到ceres的SubsetParameterization函数

ceres::SubsetParameterization *subset_parameterization =

new ceres::SubsetParameterization(6, vec_constant_extrinsic);

problem.SetParameterization(parameter_block, subset_parameterization);

}

-

优化相机内参及畸变系数,pose subset parameterization(pose 信息部分参数优化)和3D landmark,当 只优化位置信息时候,problem需要添加的代码如下(同上面代码,只需修改一行):

//这里姿态又用欧拉角表示

map_poses[indexPose] = {angleAxis[0], angleAxis[1], angleAxis[2], t(0), t(1), t(2)};

double * parameter_block = &map_poses.at(indexPose)[0];

problem.AddParameterBlock(parameter_block, 6);

std::vector<int> vec_constant_extrinsic;

vec_constant_extrinsic.insert(vec_constant_extrinsic.end(), {1,2,3});

if (!vec_constant_extrinsic.empty())

{

ceres::SubsetParameterization *subset_parameterization =

new ceres::SubsetParameterization(6, vec_constant_extrinsic);

problem.SetParameterization(parameter_block, subset_parameterization);

}

-

优化相机内参及畸变系数,pose 是常量不优化 和3D landmark. 代价函数写法如下:

//相机模型

template <typename CameraModel>

class BundleAdjustmentConstantPoseCostFunction {

public:

BundleAdjustmentConstantPoseCostFunction(const Eigen::Vector4d& qvec,

const Eigen::Vector3d& tvec,

const Eigen::Vector2d& point2D)

: qw_(qvec(0)),

qx_(qvec(1)),

qy_(qvec(2)),

qz_(qvec(3)),

tx_(tvec(0)),

ty_(tvec(1)),

tz_(tvec(2)),

observed_x_(point2D(0)),

observed_y_(point2D(1)) {}

static ceres::CostFunction* Create(const Eigen::Vector4d& qvec,

const Eigen::Vector3d& tvec,

const Eigen::Vector2d& point2D) {

return (new ceres::AutoDiffCostFunction<

BundleAdjustmentConstantPoseCostFunction<CameraModel>, 2, 3,

CameraModel::kNumParams>(

new BundleAdjustmentConstantPoseCostFunction(qvec, tvec, point2D)));

}

template <typename T>

bool operator()(const T* const point3D, const T* const camera_params,

T* residuals) const {

const T qvec[4] = {T(qw_), T(qx_), T(qy_), T(qz_)};

// Rotate and translate.

T projection[3];

ceres::UnitQuaternionRotatePoint(qvec, point3D, projection);

projection[0] += T(tx_);

projection[1] += T(ty_);

projection[2] += T(tz_);

// Project to image plane.

projection[0] /= projection[2];

projection[1] /= projection[2];

// Distort and transform to pixel space.

CameraModel::WorldToImage(camera_params, projection[0], projection[1],

&residuals[0], &residuals[1]);

// Re-projection error.

residuals[0] -= T(observed_x_);

residuals[1] -= T(observed_y_);

return true;

}

private:

const double qw_;

const double qx_;

const double qy_;

const double qz_;

const double tx_;

const double ty_;

const double tz_;

const double observed_x_;

const double observed_y_;

};

ceres::Problem problem;

ceres::CostFunction* cost_function = nullptr;

ceres::LossFunction * p_LossFunction =

ceres_options_.bUse_loss_function_ ?

new ceres::HuberLoss(Square(4.0))

: nullptr; // 关于为何使用损失函数,因为现实中并不是所有观测过程中的噪声都服从

//gaussian noise的(或者可以说几乎没有),

//遇到有outlier的情况,这些方法非常容易挂掉,

//这时候就得用到robust statistics里面的

//robust cost(*cost也可以叫做loss, 统计学那边喜欢叫risk) function了,

//比较常用的有huber, cauchy等等。

cost_function = BundleAdjustmentConstantPoseCostFunction<CameraModel>::Create( \

image.Qvec(), image.Tvec(), point2D.XY());//观测值输入

//将优化量加入残差块

problem_->AddResidualBlock(cost_function, loss_function, \

point3D.XYZ().data(), camera_params_data);//被优化量加入残差-3D点和相机内参

参考资料

-

colmap openmvg 源代码,github 地址: https://github.com/openMVG/openMVG https://github.com/colmap/colmap -

单杰. 光束法平差简史与概要. 武汉大学学报·信息科学版, 2018, 43(12): 1797-1810.

从0到1学习SLAM,戳↓

交流群

欢迎加入公众号读者群一起和同行交流,目前有SLAM、三维视觉、传感器、自动驾驶、计算摄影、检测、分割、识别、医学影像、GAN、算法竞赛等微信群(以后会逐渐细分),请扫描下面微信号加群,备注:”昵称+学校/公司+研究方向“,例如:”张三 + 上海交大 + 视觉SLAM“。请按照格式备注,否则不予通过。添加成功后会根据研究方向邀请进入相关微信群。请勿在群内发送广告,否则会请出群,谢谢理解~

投稿、合作也欢迎联系:simiter@126.com

扫描关注视频号,看最新技术落地及开源方案视频秀 ↓

登录查看更多