博客 | 关于SLU(意图识别、槽填充、上下文LU、结构化LU)和NLG的论文汇总

本文原载于微信公众号:AI部落联盟(AI_Tribe),AI研习社经授权转载。欢迎关注 AI部落联盟 微信公众号、知乎专栏 AI部落、及 AI研习社博客专栏。

社长提醒:本文的相关链接请点击文末【阅读原文】进行查看

不少人通过知乎或微信给我要论文的链接,统一发一下吧,后续还有DST、DPL、迁移学习在对话系统的应用、强化学习在对话系统的应用、memory network在对话系统的应用、GAN在对话系统的应用等论文,整理后发出来,感兴趣的可以期待一下。

SLU

1.SLU-Domain/Intent Classification

1.1 SVM MaxEnt

1.2 Deep belief nets (DBN)

Deep belief nets for natural language call-routing, Sarikaya et al., 2011

1.3 Deep convex networks (DCN)

Towards deeper understanding: Deep convex networks for semantic utterance classification, Tur et al., 2012

1.4 Extension to kernel-DCN

Use of kernel deep convex networks and end-to-end learning for spoken language understanding, Deng et al., 2012

1.5 RNN and LSTMs

Recurrent Neural Network and LSTM Models for Lexical Utterance Classification, Ravuri et al., 2015

1.6 RNN and CNNs

Sequential Short-Text Classification with Recurrent and Convolutional Neural Networks, Lee et al,2016 NAACL

2. SLU – Slot Filling

2.1.RNN for Slot Tagging

Bi-LSTMs and Input sliding window of n-grams

2.1.1 Recurrent neural networks for language understanding, interspeech2013

2.1.2 Using recurrent neural networks for slot filling in spoken language understanding, Mesnil et al, 2015

2.1.3.

Encoder-decoder networks

Leveraging Sentence-level Information with Encoder LSTM for Semantic Slot Filling, Kurata et al., EMNLP 2016

Attention-based encoder-decoder

Exploring the use of attention-based recurrent neural networks for spoken language understanding, Simonnet et al., 2015

2.2 Multi-task learning

2.2.1 , Domain Adaptation of Recurrent Neural Networks for Natural Language Understanding ,Jaech et al., Interspeech 2016

Joint Segmentation and Slot Tagging

2.2.2 Neural Models for Sequence Chunking, Zhai et al., AAAI 2017

Joint Semantic Frame Parsing

2.2.3, Slot filling and intent prediction in the same output sequence

Multi-Domain Joint Semantic Frame Parsing using Bi-directional RNN-LSTM ,Hakkani-Tur et al., Interspeech 2016

2.2.4 Intent prediction and slot filling are performed in two branches

Attention-Based Recurrent Neural Network Models for Joint Intent Detection and Slot Filling, Liu and Lane, Interspeech 2016

3.Contextual LU

3.1 Context Sensitive Spoken Language Understanding using Role Dependent LSTM layers, Hori et al, 2015

3.2 E2E MemNN for Contextual LU

End-to-End Memory Networks with Knowledge Carryover for Multi-Turn Spoken Language Understanding, Chen et al., 2016

3.3 Sequential Dialogue Encoder Network

Sequential Dialogue Context Modeling for Spoken Language Understanding,Bapna et.al., SIGDIAL 2017

4 Structural LU

4.1 K-SAN:prior knowledge as a teacher,Sentence structural knowledge stored as memory

Knowledge as a Teacher: Knowledge-Guided Structural Attention Networks,Chen et al., 2016

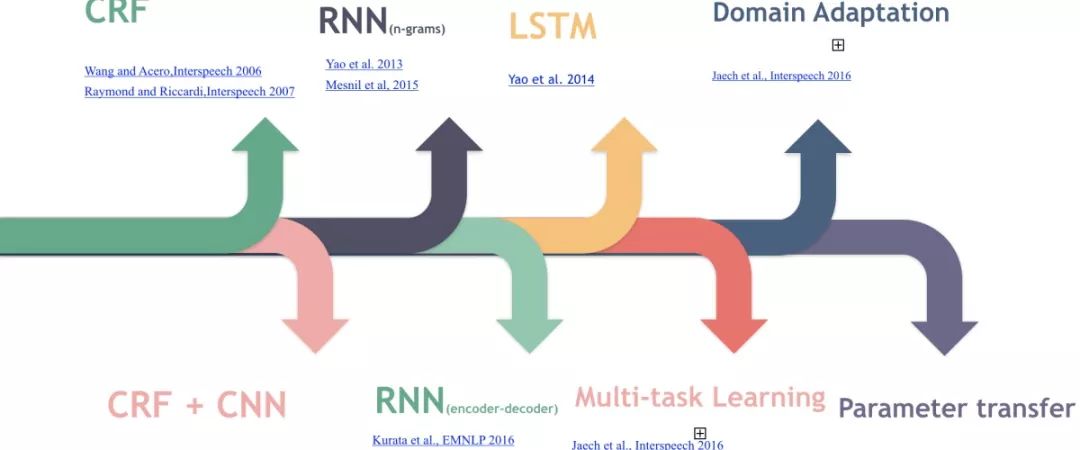

5. SL

CRF (Wang and Acero 2006; Raymond and Riccardi 2007):

Discriminative Models for Spoken Language Understanding; Wang and Acero , Interspeech, 2006

Generative and discriminative algorithms for spoken language understanding; Raymond and Riccardi, Interspeech, 2007

Puyang Xu and Ruhi Sarikaya. Convolutional neural network based triangular crf for joint intent detection and slot filling.

RNN (Yao et al. 2013; Mesnil et al. 2013, 2015; Liu and Lane 2015);

Recurrent neural networks for language understanding, interspeech 2013

Using recurrent neural networks for slot filling in spoken language understanding, Mesnil et al, 2015

Investigation of recurrent-neural-network architectures and learning methods for spoken language understanding.;Mesnil et al, Interspeech, 2013

Recurrent Neural Network Structured Output Prediction for Spoken Language Understanding, Liu and Lane, NIPS, 2015

LSTM (Yao et al. 2014)

Spoken language understanding using long short-term memory neural networks

6. SL + TL

Instance based transfer for SLU (Tur 2006);

Gokhan Tur. Multitask learning for spoken language understanding. In 2006IEEE

Model adaptation for SLU (Tür 2005);

Gökhan Tür. Model adaptation for spoken language understanding. In ICASSP (1), pages 41–44. Citeseer, 2005.

Parameter transfer (Yazdani and Henderson)

A Model of Zero-Shot Learning of Spoken Language Understanding

_____________________________________________________________________

NLG

Tradition

Template-Based NLG

Plan-Based NLG (Walker et al., 2002)

Class-Based LM NLG

Stochastic language generation for spoken dialogue systems, Oh and Rudnicky, NAACL 2000

Phrase-Based NLG

Phrase-based statistical language generation using graphical models and active learning, Mairesse et al, 2010

RNN-Based LM NLG

Stochastic Language Generation in Dialogue using Recurrent Neural Networks with Convolutional Sentence Reranking, Wen et al., SIGDIAL 2015

Semantic Conditioned LSTM

Semantically Conditioned LSTM-based Natural Language Generation for Spoken Dialogue Systems, Wen et al., EMNLP 2015

Structural NLG

Sequence-to-Sequence Generation for Spoken Dialogue via Deep Syntax Trees and Strings, Dušek and Jurčíček, ACL 2016

Contextual NLG

A Context-aware Natural Language Generator for Dialogue Systems, Dušek and Jurčíček, 2016

Controlled Text Generation

Toward Controlled Generation of Text , Hu et al., 2017

1.NLG-Traditional

Marilyn A Walker, Owen C Rambow, and Monica Rogati. Training a sentence planner for spoken dialogue using boosting.

2. NLG-Corpus based

Alice H Oh and Alexander I Rudnicky. Stochastic language generation for spoken dialogue systems. Oh et al. 2000

François Mairesse and Steve Young. Stochastic language generation in dialogue using factored language models. Mairesse and Young 2014

3. NLG-Neural Network

Recurrent neural network based language model.

Extensions of recurrent neural network language model

Stochastic language generation in dialogue using recurrent neural networks with convolutional sentence reranking

Semantically conditioned lstm-based natural language generation for spoken dialogue systems.

4. Transfer learning for NLG

Recurrent neural network based languagemodel personalization by social network crowdsourcing. In INTERSPEECH, 2013. Wen et al., 2013

Recurrent neural network language model adaptation with curriculum learning. Shi et al., 2015

Multi-domain neural network language generation for spoken dialogue systems. Wen et al., NAACL 2016

独家中文版 CMU 秋季深度学习课程免费开学!

CMU 2018 秋季《深度学习导论》为官方开源最新版本,由卡耐基梅隆大学教授 Bhiksha Raj 授权 AI 研习社翻译。学员将在本课程中学习深度神经网络的基础知识,以及它们在众多 AI 任务中的应用。课程结束后,期望学生能对深度学习有足够的了解,并且能够在众多的实际任务中应用深度学习。

↗扫码即可免费学习↖

点击 阅读原文 查看本文更多内容↙