Control is important! model predictive control mpc.pytorch lib

https://locuslab.github.io/mpc.pytorch/

Control is important!

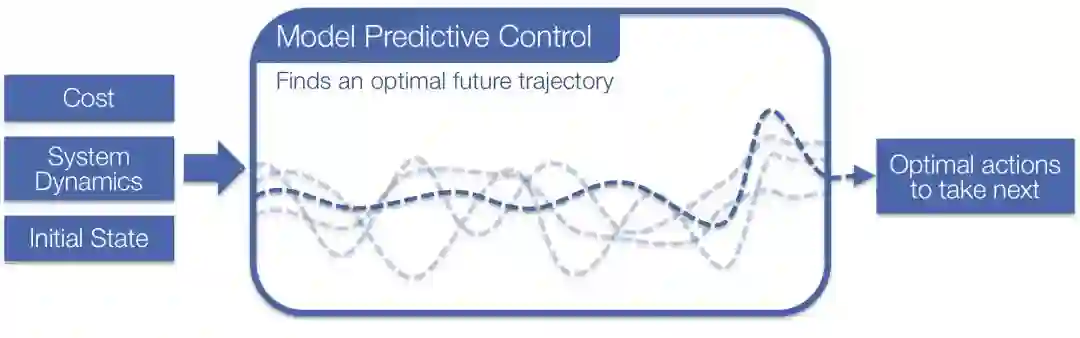

Optimal control is a widespread field that involve finding an optimal sequence of future actions to take in a system or environment. This is the most useful in domains when you can analytically model your system and can easily define a cost to optimize over your system. This project focuses on solving model predictive control (MPC) with the box-DDP heuristic. MPC is a powerhouse in many real-world domains ranging from short-time horizon robot control tasks to long-time horizon control of chemical processing plants. More recently, the reinforcement learning community, strife with poor sample-complexity and instability issues in model-free learning, has been activelysearching for useful model-based applications and priors.

Going deeper, model predictive control (MPC) is the strategy of controlling a system by repeatedly solving a model-based optimization problem in a receding horizon fashion. At each time step in the environment, MPC solves the non-convex optimization problem

x⋆1:T,u⋆1:T=argminx1:T∈,u1:T∈subjectto∑t=1TCt(xt,ut)xt+1=f(xt,ut)x1=xinit,

where xt,ut denote the state and control at time t, and denote constraints on valid states and controls, Ct:×→ℝ is a (potentially time-varying) cost function, f:×→ is a (potentially non-linear) dynamics model, and xinit denotes the initial state of the system. After solving this problem, we execute the first returned control u1, on the real system, step forward in time, and repeat the process. The MPC optimization problem can be efficiently solved with a number of methods, for example the finite-horizon iterative Linear Quadratic Regulator (iLQR)algorithm. We focus on the box-DDP heuristic which adds control bounds to the problem.

Control in PyTorch has been painful before now

There has been an indisputable rise in control and model-based algorithms in the learning communities lately and integrating these techniques with learning-based methods is important.PyTorch is a strong foundational Python library for implementing and coding learning systems. Before our library, there was a significant barrier to integrating PyTorch learning systems with control methods. The appropriate data and tensors would have to be transferred to the CPU, converted to numpy, and then passed into 1) one of the few Python control libraries, like python-control, 2) a hand-coded solver using CPLEX or Gurobi, or 3) your hand-rolled bindings to C/C++/matlab control libraries such as fast_mpc. All of these sound like fun!

This Library: A Differentiable PyTorch MPC Layer

We provide a PyTorch library for solving the non-convex control problem

x⋆1:T,u⋆1:T=argminx1:T∈,u1:T∈subjectto∑t=1TCt(xt,ut)xt+1=f(xt,ut)x1=xinit,

Our code currently supports a quadratic cost function C (non-quadratic support coming soon!) and non-linear system transition dynamics f that can be defined by hand if you understand your environment or a neural network if you don’t.

Our library is fast

We have baked in a lot of tricks to optimize the performance. Our CPU runtime is competitive with other solvers and our library shines brightly on the GPU as we have implemented it with efficient GPU-based PyTorch operations. This lets us solve many MPC problems simultaneously on the GPU with minimal overhead.

Internally we solve a sequence of quadratic programs

More details on this are in the box-DDP paper that we implement.

Differentiable MPC as a Layer

Our MPC layer is also differentiable! You can do learning directly through it. The backwards pass is nearly free. More details on this are in our NIPS 2018 paper Differentiable MPC for End-to-end Planning and Control.

Differentiable MPC and fixed points

Sometimes the controller does not run for long enough to reach a fixed point, or a fixed point doesn’t exist, which often happens when using neural networks to approximate the dynamics. When this happens, our solver cannot be used to differentiate through the controller, because it assumes a fixed point happens. Differentiating through the final iLQR iterate that’s not a fixed point will usually give the wrong gradients. Treating the iLQR procedure as a compute graph and differentiating through the unrolled operations is a reasonable alternative in this scenario that obtains surrogate gradients to the control problem, but this is not currently implemented as an option in this library.

To help catch fixed-point differentiation errors, our solver has the options exit_unconverged that forcefully exits the program if a fixed-point is not hit (to make sure users are aware of this issue) and detach_unconverged that more silently detaches unconverged examples from the batch so that they are not differentiated through.

Setup and Dependencies

Python/numpy/PyTorch

You can set this project up manually by cloning the git repo or you can install it via pip with:

pip install mpcDynamics Jacobian Computation Modes

https://locuslab.github.io/mpc.pytorch/