2019斯坦福CS224n深度学习自然语言处理课程视频和相关资料分享

斯坦福大学2019年新一季的CS224n深度学习自然语言处理课程(CS224n: Natural Language Processing with Deep Learning-Stanford/Winter 2019)1月份已经开课,不过视频资源一直没有对外放出,直到前几天官方在油管上更新了前5节视频:CS224n: Natural Language Processing with Deep Learning | Winter 2019。

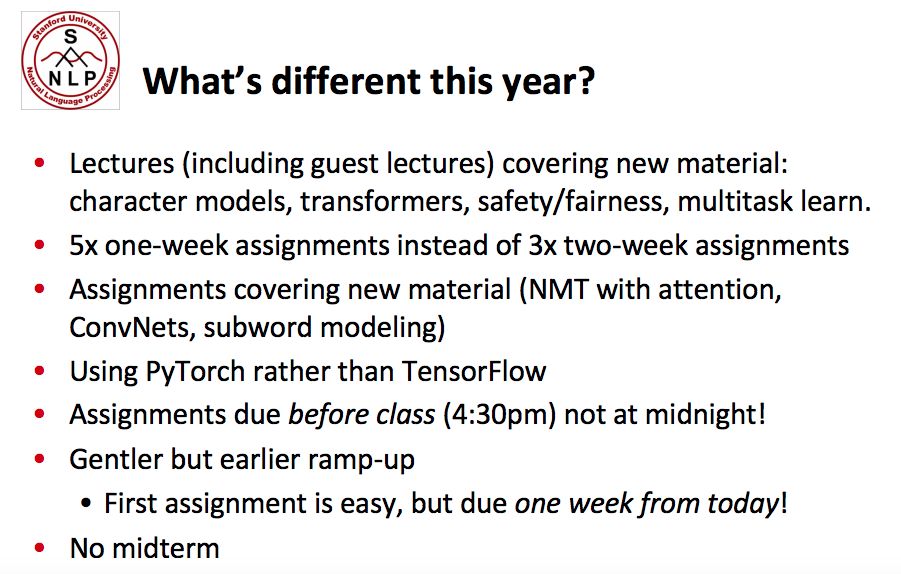

这门自然语言处理课程是值得每个NLPer学习的NLP课程,由 Christopher Manning 大神坐镇主讲,面向斯坦福大学的学生,在斯坦福大学已经讲授很多年。此次2019年新课,有很多更新,除了增加一些新内容外,最大的一点大概是代码由Tensorflow迁移到PyTorch:

这几年,由于深度学习、人工智能的概念的普及和推广,NLP作为AI领域的一颗明珠也逐渐广为人知,很多同学由此进入这个领域或者转行进入这个领域。Manning大神在第一堂课的视频开头之处给学生找位子(大概还有很多同学站着),同时开玩笑的说他在斯坦福大学讲授自然语言处理课程的第一个十年,平均每次选课的学生大约只有45个。

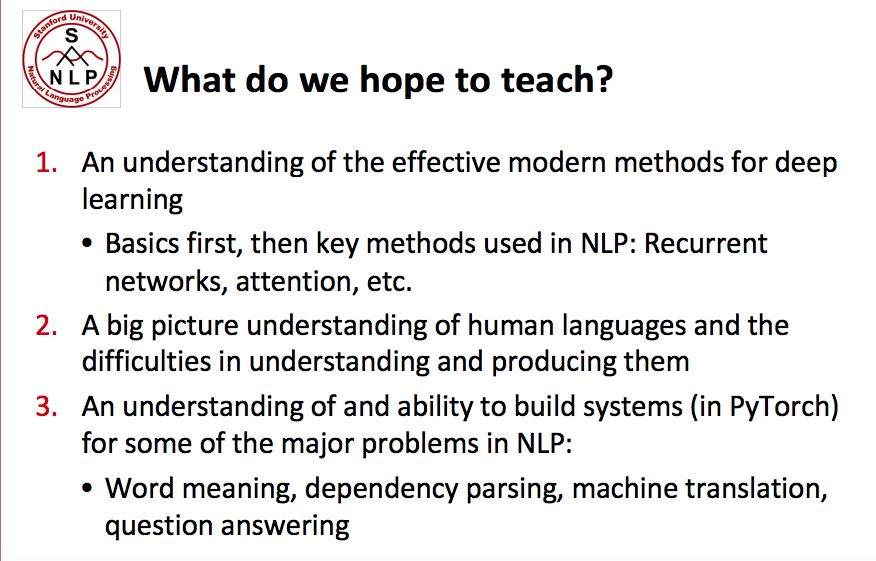

这门课程的主要目标是希望学生:能学到现代深度学习相关知识,特别是和NLP相关的一些知识点;能从宏观上了解人类语言以及理解和产生人类语言的难度;能理解和用代码(PyTorch)实习NLP中的一些主要问题和任务,例如词义理解、依存句法分析、机器翻译、问答系统等。

关于课程视频,目前官方只放出了前5节课程视频,我下载了一份放到了百度网盘里,感兴趣的同学可以关注AINLP,回复"cs224n"获取,这份视频会持续更新,直到完整版,欢迎关注:

以下是相关slides和其他阅读材料的相关链接,可以直接从官网下载:

http://web.stanford.edu/class/cs224n/index.html

| DATE | DESCRIPTION | COURSE MATERIALS | EVENTS | DEADLINES |

|---|---|---|---|---|

| Tue Jan 8 | Introduction and Word Vectors [slides] [notes] Gensim word vectors example: [zip] [preview] |

Suggested Readings:

|

Assignment 1 out [zip] [preview] |

|

| Thu Jan 10 | Word Vectors 2 and Word Senses [slides] [notes] |

Suggested Readings:

Additional Readings:

|

||

| Fri Jan 11 | Python review session [slides] |

1:30 - 2:50pm Skilling Auditorium [map] |

||

| Tue Jan 15 | Word Window Classification, Neural Networks, and Matrix Calculus [slides] [matrix calculus notes] [notes (lectures 3 and 4)] |

Suggested Readings:

Additional Readings:

|

Assignment 2 out [zip] [handout] |

Assignment 1 due |

| Thu Jan 17 | Backpropagation and Computation Graphs [slides] [notes (lectures 3 and 4)] |

Suggested Readings:

|

||

| Tue Jan 22 | Linguistic Structure: Dependency Parsing [slides] [scrawled-on slides] [notes] |

Suggested Readings:

|

Assignment 3 out [zip] [handout] |

Assignment 2 due |

| Thu Jan 24 | The probability of a sentence? Recurrent Neural Networks and Language Models [slides] [notes (lectures 6 and 7)] |

Suggested Readings:

|

||

| Tue Jan 29 | Vanishing Gradients and Fancy RNNs [slides] [notes (lectures 6 and 7)] |

Suggested Readings:

|

Assignment 4 out [zip] [handout] [Azure Guide] [Practical Guide to VMs] |

Assignment 3 due |

| Thu Jan 31 | Machine Translation, Seq2Seq and Attention [slides] [notes] |

Suggested Readings:

|

||

| Tue Feb 5 | Practical Tips for Final Projects [slides][notes] |

Suggested Readings:

|

||

| Thu Feb 7 | Question Answering and the Default Final Project [slides] |

Project Proposal out [instructions] Default Final Project out[handout] [github repo] |

Assignment 4 due | |

| Tue Feb 12 | ConvNets for NLP [slides] |

Suggested Readings:

|

||

| Thu Feb 14 | Information from parts of words: Subword Models [slides] |

Assignment 5 out [zip (requires Stanford login)] [handout] |

Project Proposal due | |

| Tue Feb 19 | Modeling contexts of use: Contextual Representations and Pretraining [slides] |

Suggested readings:

|

||

| Thu Feb 21 | Transformers and Self-Attention For Generative Models (guest lecture by Ashish Vaswaniand Anna Huang) [slides] |

Suggested readings:

|

||

| Fri Feb 22 | Project Milestone out [instructions] |

Assignment 5 due | ||

| Tue Feb 26 | Natural Language Generation [slides] |

|||

| Thu Feb 28 | Reference in Language and Coreference Resolution [slides] |

|||

| Tue Mar 5 | Multitask Learning: A general model for NLP? (guest lecture by Richard Socher) [slides] |

Project Milestone due | ||

| Thu Mar 7 | Constituency Parsing and Tree Recursive Neural Networks [slides] |

Suggested Readings:

|

||

| Tue Mar 12 | Safety, Bias, and Fairness (guest lecture by Margaret Mitchell) [slides] |

|||

| Thu Mar 14 | Future of NLP + Deep Learning [slides] |

|||

| Sun Mar 17 | Final Project Report due[instructions] | |||

| Wed Mar 20 | Final project poster session [details] |

5:15 - 8:30pm McCaw Hall at the Alumni Center [map] |

Project Poster/Video due[instructions] |

点击阅读原文可直达原文链接,下载更方便。