用 TensorFlow Extended 实现可扩展、快速且高效的 BERT 部署

来源:TensorFlow

-

BERT 模型

https://arxiv.org/abs/1810.04805

-

Colab 笔记本 https://colab.sandbox.google.com/github/tensorflow/workshops/blob/master/blog/TFX_Pipeline_for_Bert_Preprocessing.ipynb

-

TensorFlow Serving

https://tensorflow.google.cn/tfx/guide/serving -

可扩展的模型部署

https://tensorflow.google.cn/tfx/serving/serving_kubernetes

-

TensorFlow Transform

https://tensorflow.google.cn/tfx/guide/tft

-

RaggedTensors

https://tensorflow.google.cn/guide/ragged_tensor

-

TensorFlowWorld 2019 (Playlist)

https://v.youku.com/v_show/id_XNDQyMDUyNzE4OA -

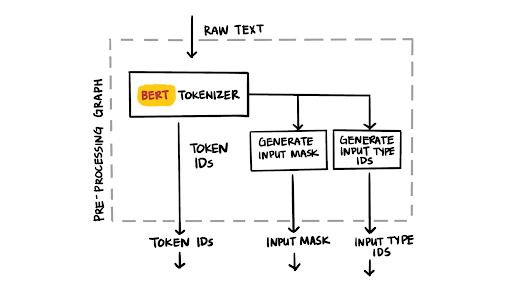

BERT Tokenizer

https://github.com/tensorflow/text/blob/master/tensorflow_text/python/ops/bert_tokenizer.py#L121

vocab_file_path = load_bert_layer().resolved_object.vocab_file.asset_path

bert_tokenizer = text.BertTokenizer(vocab_lookup_table=vocab_file_path,

token_out_type=tf.int64,

lower_case=do_lower_case)

...

input_word_ids = tokenize_text(text)

input_mask = tf.cast(input_word_ids >

0, tf.int64)

input_mask = tf.reshape(input_mask, [

-1, MAX_SEQ_LEN])

zeros_dims = tf.stack(tf.shape(input_mask))

input_type_ids = tf.fill(zeros_dims,

0)

input_type_ids = tf.cast(input_type_ids, tf.int64)

-

Colab

https://colab.sandbox.google.com/github/tensorflow/workshops/blob/master/blog/TFX_Pipeline_for_Bert_Preprocessing.ipynb -

Concur Labs 的演示页面

https://bert.concurlabs.com/

-

TFX 用户指南

https://tensorflow.google.cn/tfx/guide -

使用 TensorFlow 构建机器学习流水线及自动化模型生命周期

http://www.buildingmlpipelines.com

-

TFX 讨论组

https://groups.google.com/a/tensorflow.org/forum/#!forum/tfx

——END——