computer vision

2022.2.19更新

领域报告/资料整理

- 《中国工业机器视觉产业发展白皮书》,2020.11,赛迪研究院

- ppt:https://cetest02.cn-bj.ufileos.com/100001_2012145143/%E4%B8%AD%E5%9B%BD%E5%B7%A5%E4%B8%9A%E6%9C%BA%E5%99%A8%E8%A7%86%E8%A7%89%E4%BA%A7%E4%B8%9A%E5%8F%91%E5%B1%95%E7%99%BD%E7%9A%AE%E4%B9%A6.pdf

- PDF:https://www.ambchina.com/data/upload/image/20211124/%E4%B8%AD%E5%9B%BD%E5%B7%A5%E4%B8%9A%E6%9C%BA%E5%99%A8%E8%A7%86%E8%A7%89%E4%BA%A7%E4%B8%9A%E5%8F%91%E5%B1%95%E7%99%BD%E7%9A%AE%E4%B9%A6%EF%BC%882020%EF%BC%89.pdf

- 《人工智能医学影像应用与产业分析简报》,2021.6,派瑞医疗,https://pinery.io/assets/documents/2021%E6%B4%BE%E7%91%9E%E5%8C%BB%E7%96%97%E7%A7%91%E6%8A%80-%E4%BA%BA%E5%B7%A5%E6%99%BA%E8%83%BD%E5%8C%BB%E5%AD%A6%E5%BD%B1%E5%83%8F%E5%BA%94%E7%94%A8%E4%B8%8E%E4%BA%A7%E4%B8%9A%E5%88%86%E6%9E%90%E7%AE%80%E6%8A%A5.pdf

3.《机器视觉核心部件龙头:行业高增长与市占率提升共振》,2021.7,浙商证券,https://pdf.dfcfw.com/pdf/H3_AP202107141503712911_1.PDF

4.《2020年度计算机视觉人才调研报告》,2020,德勤,https://www2.deloitte.com/content/dam/Deloitte/cn/Documents/innovation/deloitte-cn-iddc-2020-china-computer-vision-talent-survey-report-zh-210220.pdf - 《OpenMMLab 计算机视觉开源算法体系》,2021,全球开源技术峰会,https://gotc.oschina.net/uploads/files/09%20%E9%99%88%E6%81%BAOpenMMLab%20%E6%BC%94%E8%AE%B2.pdf

- 《未来5-10年计算机视觉发展趋势》,2020,CCF计算机视觉委员会,https://mp.weixin.qq.com/s/ZCULMForCTQTub-INlTprA

- “图像分割二十年,盘点影响力最大的10篇论文”,2022,https://www.zhuanzhi.ai/vip/badb6122fb88ee972f5f63cc45eb47d6

- “CVPR 二十年,影响力最大的 10 篇论文!”,2022,https://www.zhuanzhi.ai/vip/d049a004e3d4d139d7cf10e0100b3d34

入门学习

-

计算机视觉:让冰冷的机器看懂这个多彩的世界 by

-

深度学习与视觉计算 by 王亮 中科院自动化所

-

如何做好计算机视觉的研究? by 华刚博士

- 微软亚洲研究院资深研究他将担任2019国际模式识别和计算机视觉大会 (CVPR 2019)的程序主席,以及CVPR 2017和ACM MM 2017的领域主席。

- [http://www.msra.cn/zh-cn/news/features/do-research-in-computer-vision-20161205]

-

计算机视觉 微软亚洲研究院系列文章

- 通俗介绍计算机视觉在生活中的各种应用。

- [http://www.msra.cn/zh-cn/research/computer-vision]

-

卷积神经网络如何进行图像识别

-

相似图片搜索的原理 阮一峰

-

如何识别图像边缘? 阮一峰

-

图像目标检测(Object Detection)原理与实现 (1-6)

-

运动目标跟踪系列(1-17) - [http://blog.csdn.net/App_12062011/article/category/6269524/1]

-

看图说话的AI小朋友——图像标注趣谈(上,下)

-

Video Analysis 相关领域介绍之Video Captioning(视频to文字描述)

-

从特斯拉到计算机视觉之「图像语义分割」

-

视觉求索 公众号相关文章系列,

- 浅谈人工智能:现状、任务、构架与统一 | 正本清源 [http://mp.weixin.qq.com/s/-wSYLu-XvOrsST8_KEUa-Q]

- 人生若只如初见 | 学术人生 [https://mp.weixin.qq.com/s/kFA7bI_FFjZQkBNDvcn01g]

- 初探计算机视觉的三个源头、兼谈人工智能|正本清源 [https://mp.weixin.qq.com/s/2ytV5Bt50yhYOFYXYQe6ZQ]

综述

- Annotated Computer Vision Bibliography: Table of Contents. Since 1994 Keith Price从1994年开始做了这个索引,涵盖了所有计算机视觉里面所有topic,所有subtopic的著作,包括论文,教材,还对各类主题的关键词。这个网站频繁更新(最近一次是2017年8月28号),收录每个方向重要期刊,会议文献和书籍,并且保证了所有链接不失效。

- What Sparked Video Research in 1877? The Overlooked Role of the Siemens Artificial Eye by Mark Schubin 2017 http://ieeexplore.ieee.org/document/7857854/

- Giving machines humanlike eyes. by Posch, C., Benosman, R., Etienne-Cummings, R. 2015 http://ieeexplore.ieee.org/document/7335800/

- Seeing is not enough by Tom GellerOberlin, OH https://dl.acm.org/citation.cfm?id=2001276

- Visual Tracking: An Experimental Survey https://dl.acm.org/citation.cfm?id=2693387

- A survey on object recognition and segmentation techniques http://ieeexplore.ieee.org/document/7724975/

- A Review of Image Recognition with Deep Convolutional Neural Network https://link.springer.com/chapter/10.1007/978-3-319-63309-1_7

- Recent Advance in Content-based Image Retrieval: A Literature Survey. Wengang Zhou, Houqiang Li, and Qi Tian 2017 https://arxiv.org/pdf/1706.06064.pdf

- Automatic Description Generation from Images: A Survey of Models, Datasets, and Evaluation Measures 2016 https://www.jair.org/media/4900/live-4900-9139-jair.pdf

- 基于图像和视频信息的社交关系理解研究综述 2021 https://cjc.ict.ac.cn/online/onlinepaper/wz-20216780932.pdf

进阶论文

Image Classification

- Microsoft Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun, Deep Residual Learning for Image Recognition [http://arxiv.org/pdf/1512.03385v1.pdf] [[http://image-net.org/challenges/talks/ilsvrc2015_deep_residual_learning_kaiminghe.pdf]]

- Microsoft Kaiming He, Xiangyu Zhang, Shaoqing Ren, Jian Sun, Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification, [http://arxiv.org/pdf/1502.01852]

- Batch Normalization Sergey Ioffe, Christian Szegedy, Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift[http://arxiv.org/pdf/1502.03167]

- GoogLeNet Christian Szegedy, Wei Liu, Yangqing Jia, Pierre Sermanet, Scott Reed, Dragomir Anguelov, Dumitru Erhan, Vincent Vanhoucke, Andrew Rabinovich, CVPR, 2015. [http://arxiv.org/pdf/1409.4842]

- VGG-Net Karen Simonyan and Andrew Zisserman, Very Deep Convolutional Networks for Large-Scale Visual Recognition, ICLR, 2015. [http://www.robots.ox.ac.uk/vgg/research/verydeep/] [http://arxiv.org/pdf/1409.1556]

- AlexNet Alex Krizhevsky, Ilya Sutskever, Geoffrey E. Hinton, ImageNet Classification with Deep Convolutional Neural Networks, NIPS, 2012. [http://papers.nips.cc/book/advances-in-neural-information-processing-systems-25-2012]

Object Detection

进阶文章

- Deep Neural Networks for Object Detection (基于DNN的对象检测)NIPS2013:

- R-CNN Rich feature hierarchies for accurate object detection and semantic segmentation:

- Fast R-CNN :

- Faster R-CNN Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks:

- Scalable Object Detection using Deep Neural Networks

- Scalable, High-Quality Object Detection

- SPP-Net Spatial Pyramid Pooling in Deep Convolutional Networks for Visual Recognition

- DeepID-Net DeepID-Net: Deformable Deep Convolutional Neural Networks for Object Detection

- Object Detectors Emerge in Deep Scene CNNs

- segDeepM: Exploiting Segmentation and Context in Deep Neural Networks for Object Detection

- Object Detection Networks on Convolutional Feature Maps

- Improving Object Detection with Deep Convolutional Networks via Bayesian Optimization and Structured Prediction

- DeepBox: Learning Objectness with Convolutional Networks

- Object detection via a multi-region & semantic segmentation-aware CNN model

- You Only Look Once: Unified, Real-Time Object Detection

- YOLOv2 YOLO9000: Better, Faster, Stronger

- AttentionNet: Aggregating Weak Directions for Accurate Object Detection

- DenseBox: Unifying Landmark Localization with End to End Object Detection

- SSD: Single Shot MultiBox Detector

- DSSD : Deconvolutional Single Shot Detector

- G-CNN: an Iterative Grid Based Object Detector

- HyperNet: Towards Accurate Region Proposal Generation and Joint Object Detection

- A MultiPath Network for Object Detection

- R-FCN: Object Detection via Region-based Fully Convolutional Networks

- A Unified Multi-scale Deep Convolutional Neural Network for Fast Object Detection

- PVANET: Deep but Lightweight Neural Networks for Real-time Object Detection

- Feature Pyramid Networks for Object Detection

- Learning Chained Deep Features and Classifiers for Cascade in Object Detection

- DSOD: Learning Deeply Supervised Object Detectors from Scratch

- Focal Loss for Dense Object Detection ICCV 2017 Best student paper award. Facebook AI Research

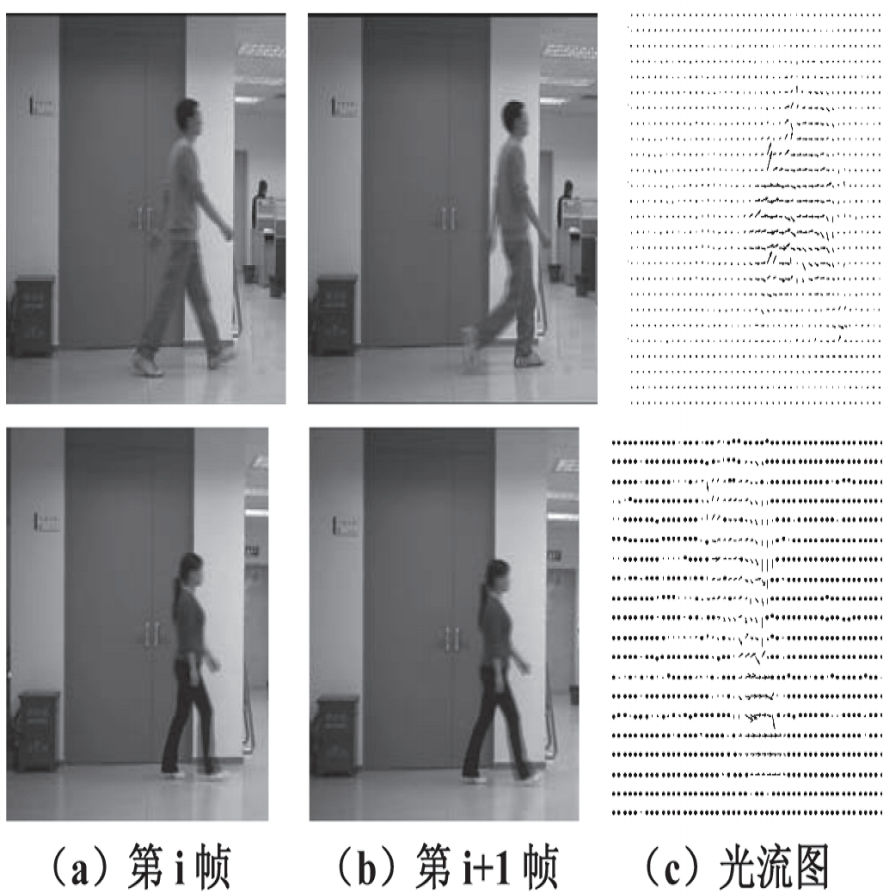

Video Classification

- Nicolas Ballas, Li Yao, Pal Chris, Aaron Courville, "Delving Deeper into Convolutional Networks for Learning Video Representations", ICLR 2016. [http://arxiv.org/pdf/1511.06432v4.pdf]

- Michael Mathieu, camille couprie, Yann Lecun, "Deep Multi Scale Video Prediction Beyond Mean Square Error", ICLR 2016. Paper [http://arxiv.org/pdf/1511.05440v6.pdf]

- Donahue, Jeffrey, et al. Long-term recurrent convolutional networks for visual recognition and description CVPR 2015 https://arxiv.org/abs/1411.4389

- Karpathy, Andrej, et al. Large-scale Video Classification with Convolutional Neural Networks. CVPR 2014 http://cs.stanford.edu/people/karpathy/deepvideo/

- Yue-Hei Ng, Joe, et al. Beyond short snippets: Deep networks for video classification. CVPR 2015 https://arxiv.org/abs/1503.08909

- Tran, Du, et al. Learning Spatiotemporal Features with 3D Convolutional Networks. ICCV 2015 https://arxiv.org/abs/1412.0767

Object Tracking

- Seunghoon Hong, Tackgeun You, Suha Kwak, Bohyung Han, Online Tracking by Learning Discriminative Saliency Map with Convolutional Neural Network, arXiv:1502.06796. [http://arxiv.org/pdf/1502.06796]

- Hanxi Li, Yi Li and Fatih Porikli, DeepTrack: Learning Discriminative Feature Representations by Convolutional Neural Networks for Visual Tracking, BMVC, 2014. [http://www.bmva.org/bmvc/2014/files/paper028.pdf]

- N Wang, DY Yeung, Learning a Deep Compact Image Representation for Visual Tracking, NIPS, 2013. [http://winsty.net/papers/dlt.pdf]

- Chao Ma, Jia-Bin Huang, Xiaokang Yang and Ming-Hsuan Yang, Hierarchical Convolutional Features for Visual Tracking, ICCV 2015 Paper [http://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Ma_Hierarchical_Convolutional_Features_ICCV_2015_paper.pdf] [https://github.com/jbhuang0604/CF2]

- Lijun Wang, Wanli Ouyang, Xiaogang Wang, and Huchuan Lu, Visual Tracking with fully Convolutional Networks, ICCV 2015 [http://202.118.75.4/lu/Paper/ICCV2015/iccv15_lijun.pdf] [https://github.com/scott89/FCNT]

- Hyeonseob Namand Bohyung Han, Learning Multi-Domain Convolutional Neural Networks for Visual Tracking. [http://arxiv.org/pdf/1510.07945.pdf] [https://github.com/HyeonseobNam/MDNet] [http://cvlab.postech.ac.kr/research/mdnet/]

Segmentation

- Alexander Kolesnikov, Christoph Lampert, Seed, Expand and Constrain: Three Principles for Weakly-Supervised Image Segmentation, ECCV, 2016. [http://pub.ist.ac.at/akolesnikov/files/ECCV2016/main.pdf] [https://github.com/kolesman/SEC]

- Guosheng Lin, Chunhua Shen, Ian Reid, Anton van dan Hengel, Efficient piecewise training of deep structured models for semantic segmentation, arXiv:1504.01013. [http://arxiv.org/pdf/1504.01013]

- Guosheng Lin, Chunhua Shen, Ian Reid, Anton van den Hengel, Deeply Learning the Messages in Message Passing Inference, arXiv:1508.02108. [http://arxiv.org/pdf/1506.02108]

- Deep Parsing Network . Ziwei Liu, Xiaoxiao Li, Ping Luo, Chen Change Loy, Xiaoou Tang, Semantic Image Segmentation via Deep Parsing Network, arXiv:1509.02634 / ICCV 2015 [http://arxiv.org/pdf/1509.02634.pdf]

- CentraleSuperBoundaries, Iasonas Kokkinos, Surpassing Humans in Boundary Detection using Deep Learning INRIA [http://arxiv.org/pdf/1511.07386]

- BoxSup. Jifeng Dai, Kaiming He, Jian Sun, BoxSup: Exploiting Bounding Boxes to Supervise Convolutional Networks for Semantic Segmentation [http://arxiv.org/pdf/1503.01640]

- Hyeonwoo Noh, Seunghoon Hong, Bohyung Han, Learning Deconvolution Network for Semantic Segmentation, arXiv:1505.04366. [http://arxiv.org/pdf/1505.04366]

- Seunghoon Hong, Hyeonwoo Noh, Bohyung Han, Decoupled Deep Neural Network for Semi-supervised Semantic Segmentation, arXiv:1506.04924. [http://arxiv.org/pdf/1506.04924]

- Seunghoon Hong, Junhyuk Oh, Bohyung Han, and Honglak Lee, Learning Transferrable Knowledge for Semantic Segmentation with Deep Convolutional Neural Network. [http://arxiv.org/pdf/1512.07928.pdf] Project Page[http://cvlab.postech.ac.kr/research/transfernet/]

- Shuai Zheng, Sadeep Jayasumana, Bernardino Romera-Paredes, Vibhav Vineet, Zhizhong Su, Dalong Du, Chang Huang, Philip H. S. Torr, Conditional Random Fields as Recurrent Neural Networks. [http://arxiv.org/pdf/1502.03240]

- Liang-Chieh Chen, George Papandreou, Kevin Murphy, Alan L. Yuille, Weakly-and semi-supervised learning of a DCNN for semantic image segmentation, arXiv:1502.02734. [http://arxiv.org/pdf/1502.02734]

- Mohammadreza Mostajabi, Payman Yadollahpour, Gregory Shakhnarovich, Feedforward Semantic Segmentation With Zoom-Out Features, CVPR, 2015 [http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Mostajabi_Feedforward_Semantic_Segmentation_2015_CVPR_paper.pdf]

- Holger Caesar, Jasper Uijlings, Vittorio Ferrari, Joint Calibration for Semantic Segmentation. [http://arxiv.org/pdf/1507.01581]

- Jonathan Long, Evan Shelhamer, Trevor Darrell, Fully Convolutional Networks for Semantic Segmentation, CVPR, 2015. [http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Long_Fully_Convolutional_Networks_2015_CVPR_paper.pdf]

- Bharath Hariharan, Pablo Arbelaez, Ross Girshick, Jitendra Malik, Hypercolumns for Object Segmentation and Fine-Grained Localization, CVPR, 2015. [http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Hariharan_Hypercolumns_for_Object_2015_CVPR_paper.pdf]

- Abhishek Sharma, Oncel Tuzel, David W. Jacobs, Deep Hierarchical Parsing for Semantic Segmentation, CVPR, 2015. [http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Sharma_Deep_Hierarchical_Parsing_2015_CVPR_paper.pdf]

- Clement Farabet, Camille Couprie, Laurent Najman, Yann LeCun, Scene Parsing with Multiscale Feature Learning, Purity Trees, and Optimal Covers, ICML, 2012. [http://yann.lecun.com/exdb/publis/pdf/farabet-icml-12.pdf]

- Clement Farabet, Camille Couprie, Laurent Najman, Yann LeCun, Learning Hierarchical Features for Scene Labeling, PAMI, 2013. [http://yann.lecun.com/exdb/publis/pdf/farabet-pami-13.pdf]

- Fisher Yu, Vladlen Koltun, "Multi-Scale Context Aggregation by Dilated Convolutions", ICLR 2016, [http://arxiv.org/pdf/1511.07122v2.pdf]

- Niloufar Pourian, S. Karthikeyan, and B.S. Manjunath, "Weakly supervised graph based semantic segmentation by learning communities of image-parts", ICCV, 2015, [http://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Pourian_Weakly_Supervised_Graph_ICCV_2015_paper.pdf]

Object Recognition

- DeCAF: A Deep Convolutional Activation Feature for Generic Visual Recognition. Jeff Donahue, Yangqing Jia, Oriol Vinyals, Judy Hoffman, Ning Zhang, Eric Tzeng, Trevor Darrell [http://arxiv.org/abs/1310.1531]

- CNN Features off-the-shelf: an Astounding Baseline for Recognition CVPR 2014 [http://arxiv.org/abs/1403.6382]

- HD-CNN: Hierarchical Deep Convolutional Neural Network for Image Classification intro: ICCV 2015 [https://arxiv.org/abs/1410.0736]

- Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. ImageNet top-5 error: 4.94% [http://arxiv.org/abs/1502.01852]

- Humans and deep networks largely agree on which kinds of variation make object recognition harder [http://arxiv.org/abs/1604.06486]

- FusionNet: 3D Object Classification Using Multiple Data Representations [https://arxiv.org/abs/1607.05695]

- Deep FisherNet for Object Classification [http://arxiv.org/abs/1608.00182]

- Factorized Bilinear Models for Image Recognition [https://arxiv.org/abs/1611.05709]

- Hyperspectral CNN Classification with Limited Training Samples [https://arxiv.org/abs/1611.09007]

- The More You Know: Using Knowledge Graphs for Image Classification [https://arxiv.org/abs/1612.04844]

- MaxMin Convolutional Neural Networks for Image Classification [http://webia.lip6.fr/thomen/papers/BlotICIP2016.pdf]

- Cost-Effective Active Learning for Deep Image Classification. TCSVT 2016. [https://arxiv.org/abs/1701.03551]

- Deep Collaborative Learning for Visual Recognition [https://www.arxiv.org/abs/1703.01229]

- Convolutional Low-Resolution Fine-Grained Classification [https://arxiv.org/abs/1703.05393]

- Deep Mixture of Diverse Experts for Large-Scale Visual Recognition [https://arxiv.org/abs/1706.07901] Sunrise or Sunset: Selective Comparison Learning for Subtle Attribute Recognition [https://arxiv.org/abs/1707.06335]

- Why Do Deep Neural Networks Still Not Recognize These Images?: A Qualitative Analysis on Failure Cases of ImageNet Classification [https://arxiv.org/abs/1709.03439]

- B-CNN: Branch Convolutional Neural Network for Hierarchical Classification [https://arxiv.org/abs/1709.09890](

- Multiple Object Recognition with Visual Attention [https://arxiv.org/abs/1412.7755]

- Multiple Instance Learning Convolutional Neural Networks for Object Recognition [https://arxiv.org/abs/1610.03155]

- Deep Learning Face Representation from Predicting 10,000 Classes. intro: CVPR 2014 [http://mmlab.ie.cuhk.edu.hk/pdf/YiSun_CVPR14.pdf]

- Deep Learning Face Representation by Joint Identification-Verification [http://papers.nips.cc/paper/5416-analog-memories-in-a-balanced-rate-based-network-of-e-i-neurons]

- Deeply learned face representations are sparse, selective, and robust [http://arxiv.org/abs/1412.1265]

- FaceNet: A Unified Embedding for Face Recognition and Clustering [http://arxiv.org/abs/1503.03832]

- Bilinear CNN Models for Fine-grained Visual Recognition http://vis-www.cs.umass.edu/bcnn/

- DeepFood: Deep Learning-Based Food Image Recognition for Computer-Aided Dietary Assessment http://arxiv.org/abs/1606.05675

- Multi-attribute Learning for Pedestrian Attribute Recognition in Surveillance Scenarios http://or.nsfc.gov.cn/bitstream/00001903-5/417802/1/1000014103914.pdf

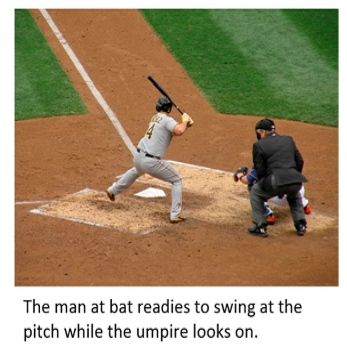

Image Captioning

- m-RNN模型《 Explain Images with Multimodal Recurrent Neural Networks》 2014 https://arxiv.org/pdf/1410.1090.pdf

- NIC模型 《Show and Tell: A Neural Image Caption Generator》2014

- MS Captivator From captions to visual concepts and back 2014

- Show, Attend and Tell: Neural Image Caption Generation with Visual Attention 2015

- What Value Do Explicit High Level Concepts Have in Vision to Language Problems? 2016 https://arxiv.org/pdf/1506.01144.pdf

- Guiding Long-Short Term Memory for Image Caption Generation 2015 https://arxiv.org/pdf/1509.04942.pdf

- Watch What You Just Said: Image Captioning with Text-Conditional Attention 2016 https://arxiv.org/pdf/1606.04621.pdf https://github.com/LuoweiZhou/e2e-gLSTM-sc

- Knowing When to Look: Adaptive Attention via A Visual Sentinel for Image Captioning 2017 https://arxiv.org/pdf/1612.01887.pdf

- Self-critical Sequence Training for Image Captioning 2017 CVPR https://arxiv.org/pdf/1612.00563.pdf

- Deep Reinforcement Learning-based Image Captioning with Embedding Reward 2017 cvpr https://arxiv.org/abs/1704.03899

- Knowing When to Look: Adaptive Attention via a Visual Sentinel for Image Captioning 2017 cvpr https://arxiv.org/pdf/1612.01887.pdf https://github.com/jiasenlu/AdaptiveAttention

- Ryan Kiros, Ruslan Salakhutdinov, Richard S. Zemel, Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models, arXiv:1411.2539. https://arxiv.org/abs/1411.2539

- Berkeley Jeff Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, Trevor Darrell, Long-term Recurrent Convolutional Networks for Visual Recognition and Description https://arxiv.org/abs/1411.4389

- UML / UT Subhashini Venugopalan, Huijuan Xu, Jeff Donahue, Marcus Rohrbach, Raymond Mooney, Kate Saenko, Translating Videos to Natural Language Using Deep Recurrent Neural Networks, NAACL-HLT, 2015. https://arxiv.org/abs/1412.4729

- CMU / Microsoft Xinlei Chen, C. Lawrence Zitnick, Learning a Recurrent Visual Representation for Image Caption Generation https://arxiv.org/abs/1411.5654

- Xinlei Chen, C. Lawrence Zitnick, Mind’s Eye: A Recurrent Visual Representation for Image Caption Generation, CVPR 2015 https://www.cs.cmu.edu/~xinleic/papers/cvpr15_rnn.pdf

- Facebook Remi Lebret, Pedro O. Pinheiro, Ronan Collobert, Phrase-based Image Captioning, arXiv:1502.03671 / ICML 2015 https://arxiv.org/abs/1502.03671

- UCLA / Baidu Junhua Mao, Wei Xu, Yi Yang, Jiang Wang, Zhiheng Huang, Alan L. Yuille, Learning like a Child: Fast Novel Visual Concept Learning from Sentence Descriptions of Images https://arxiv.org/abs/1504.06692

- MS + Berkeley Jacob Devlin, Saurabh Gupta, Ross Girshick, Margaret Mitchell, C. Lawrence Zitnick, Exploring Nearest Neighbor Approaches for Image Captioning https://arxiv.org/abs/1505.04467

- Jacob Devlin, Hao Cheng, Hao Fang, Saurabh Gupta, Li Deng, Xiaodong He, Geoffrey Zweig, Margaret Mitchell, Language Models for Image Captioning: The Quirks and What Works https://arxiv.org/abs/1505.01809

- Adelaide Qi Wu, Chunhua Shen, Anton van den Hengel, Lingqiao Liu, Anthony Dick, Image Captioning with an Intermediate Attributes Layer https://arxiv.org/abs/1506.01144v1

- Tilburg Grzegorz Chrupala, Akos Kadar, Afra Alishahi, Learning language through pictures https://arxiv.org/abs/1506.03694

- Univ. Montreal Kyunghyun Cho, Aaron Courville, Yoshua Bengio, Describing Multimedia Content using Attention-based Encoder-Decoder Networks https://arxiv.org/abs/1507.01053

- Cornell Jack Hessel, Nicolas Savva, Michael J. Wilber, Image Representations and New Domains in Neural Image Captioning https://arxiv.org/abs/1508.02091

- MS + City Univ. of HongKong Ting Yao, Tao Mei, and Chong-Wah Ngo, "Learning Query and Image Similarities with Ranking Canonical Correlation Analysis", ICCV, 2015 https://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Yao_Learning_Query_and_ICCV_2015_paper.pdf

- Mao J, Xu W, Yang Y, et al. Deep Captioning with Multimodal Recurrent Neural Networks (m- RNN) 2015. https://arxiv.org/abs/1412.6632

- Pan Y, Yao T, Li H, et al. Video Captioning with Transferred Semantic Attributes 2016. https://arxiv.org/abs/1611.07675

- Johnson J, Karpathy A, Li F F. DenseCap: Fully Convolutional Localization Networks for Dense Captioning https://arxiv.org/abs/1511.07571

- I. Sutskever, O. Vinyals, and Q. V. Le. Sequence to sequence learning with neural networks. NIPS 2014. https://arxiv.org/abs/1409.3215

- Karpathy A, Li F F. Deep Visual-Semantic Alignments for Generating Image Descriptions TPAMI 2015 https://arxiv.org/abs/1412.2306

- A. Karpathy, G. Toderici, S. Shetty, T. Leung, R. Sukthankar, and L. Fei-Fei. Large-scale video classification with convolutional neural networks. CVPR, 2014. http://cs.stanford.edu/people/karpathy/deepvideo/

- Yao T, Pan Y, Li Y, et al. Boosting Image Captioning with Attributes 2016. https://arxiv.org/abs/1611.01646

- Venugopalan S, Rohrbach M, Donahue J, et al. Sequence to Sequence -- Video to Text. 2015. https://arxiv.org/abs/1505.00487

Video Captioning

- Jeff Donahue, Lisa Anne Hendricks, Sergio Guadarrama, Marcus Rohrbach, Subhashini Venugopalan, Kate Saenko, Trevor Darrell, Long-term Recurrent Convolutional Networks for Visual Recognition and Description, CVPR, 2015.

[http://jeffdonahue.com/lrcn/]

[http://arxiv.org/pdf/1411.4389.pdf] - Subhashini Venugopalan, Huijuan Xu, Jeff Donahue, Marcus Rohrbach, Raymond Mooney, Kate Saenko, Translating Videos to Natural Language Using Deep Recurrent Neural Networks, arXiv:1412.4729. UT / UML / Berkeley [http://arxiv.org/pdf/1412.4729]

- Yingwei Pan, Tao Mei, Ting Yao, Houqiang Li, Yong Rui, Joint Modeling Embedding and Translation to Bridge Video and Language, arXiv:1505.01861. Microsoft [http://arxiv.org/pdf/1505.01861]

- Subhashini Venugopalan, Marcus Rohrbach, Jeff Donahue, Raymond Mooney, Trevor Darrell, Kate Saenko, Sequence to Sequence--Video to Text, arXiv:1505.00487. UT / Berkeley / UML [http://arxiv.org/pdf/1505.00487]

- Li Yao, Atousa Torabi, Kyunghyun Cho, Nicolas Ballas, Christopher Pal, Hugo Larochelle, Aaron Courville, Describing Videos by Exploiting Temporal Structure, arXiv:1502.08029 Univ. Montreal / Univ. Sherbrooke [http://arxiv.org/pdf/1502.08029.pdf]]

- Anna Rohrbach, Marcus Rohrbach, Bernt Schiele, The Long-Short Story of Movie Description, arXiv:1506.01698 MPI / Berkeley [http://arxiv.org/pdf/1506.01698.pdf]]

- Yukun Zhu, Ryan Kiros, Richard Zemel, Ruslan Salakhutdinov, Raquel Urtasun, Antonio Torralba, Sanja Fidler, Aligning Books and Movies: Towards Story-like Visual Explanations by Watching Movies and Reading Books, arXiv:1506.06724 Univ. Toronto / MIT [[http://arxiv.org/pdf/1506.06724.pdf]]

- Kyunghyun Cho, Aaron Courville, Yoshua Bengio, Describing Multimedia Content using Attention-based Encoder-Decoder Networks, arXiv:1507.01053 Univ. Montreal [http://arxiv.org/pdf/1507.01053.pdf]

- Dotan Kaufman, Gil Levi, Tal Hassner, Lior Wolf, Temporal Tessellation for Video Annotation and Summarization, arXiv:1612.06950. TAU / USC [[https://arxiv.org/pdf/1612.06950.pdf]]

- Chiori Hori, Takaaki Hori, Teng-Yok Lee, Kazuhiro Sumi, John R. Hershey, Tim K. Marks Attention-Based Multimodal Fusion for Video Description https://arxiv.org/abs/1701.03126

- Describing Videos using Multi-modal Fusion https://dl.acm.org/citation.cfm?id=2984065

- Andrew Shin , Katsunori Ohnishi , Tatsuya Harada Beyond caption to narrative: Video captioning with multiple sentences http://ieeexplore.ieee.org/abstract/document/7532983/

- Jianfeng Dong, Xirong Li, Cees G. M. Snoek Word2VisualVec: Image and Video to Sentence Matching by Visual Feature Prediction https://pdfs.semanticscholar.org/de22/8875bc33e9db85123469ef80fc0071a92386.pdf

- Multimodal Video Description https://dl.acm.org/citation.cfm?id=2984066

- Xiaodan Liang, Zhiting Hu, Hao Zhang, Chuang Gan, Eric P. Xing Recurrent Topic-Transition GAN for Visual Paragraph Generation https://arxiv.org/abs/1703.07022

- Weakly Supervised Dense Video Captioning(CVPR2017)

- Multi-Task Video Captioning with Video and Entailment Generation(ACL2017)

Visual Question Answering

- Kushal Kafle, and Christopher Kanan. An Analysis of Visual Question Answering Algorithms. arXiv:1703.09684, 2017. [https://arxiv.org/abs/1703.09684]

- Hyeonseob Nam, Jung-Woo Ha, Jeonghee Kim, Dual Attention Networks for Multimodal Reasoning and Matching, arXiv:1611.00471, 2016. [https://arxiv.org/abs/1611.00471]

- Jin-Hwa Kim, Kyoung Woon On, Jeonghee Kim, Jung-Woo Ha, Byoung-Tak Zhang, Hadamard Product for Low-rank Bilinear Pooling, arXiv:1610.04325, 2016. [https://arxiv.org/abs/1610.04325]

- Akira Fukui, Dong Huk Park, Daylen Yang, Anna Rohrbach, Trevor Darrell, Marcus Rohrbach, Multimodal Compact Bilinear Pooling for Visual Question Answering and Visual Grounding, arXiv:1606.01847, 2016. [https://arxiv.org/abs/1606.01847] [[code]][https://github.com/akirafukui/vqa-mcb]

- Kuniaki Saito, Andrew Shin, Yoshitaka Ushiku, Tatsuya Harada, DualNet: Domain-Invariant Network for Visual Question Answering. arXiv:1606.06108v1, 2016. [https://arxiv.org/pdf/1606.06108.pdf]

- Arijit Ray, Gordon Christie, Mohit Bansal, Dhruv Batra, Devi Parikh, Question Relevance in VQA: Identifying Non-Visual And False-Premise Questions, arXiv:1606.06622, 2016. [https://arxiv.org/pdf/1606.06622v1.pdf]

- Hyeonwoo Noh, Bohyung Han, Training Recurrent Answering Units with Joint Loss Minimization for VQA, arXiv:1606.03647, 2016. [http://arxiv.org/abs/1606.03647v1]

- Jiasen Lu, Jianwei Yang, Dhruv Batra, Devi Parikh, Hierarchical Question-Image Co-Attention for Visual Question Answering, arXiv:1606.00061, 2016. [https://arxiv.org/pdf/1606.00061v2.pdf] [[code]][https://github.com/jiasenlu/HieCoAttenVQA]

- Jin-Hwa Kim, Sang-Woo Lee, Dong-Hyun Kwak, Min-Oh Heo, Jeonghee Kim, Jung-Woo Ha, Byoung-Tak Zhang, Multimodal Residual Learning for Visual QA, arXiv:1606.01455, 2016. [https://arxiv.org/pdf/1606.01455v1.pdf]

- Peng Wang, Qi Wu, Chunhua Shen, Anton van den Hengel, Anthony Dick, FVQA: Fact-based Visual Question Answering, arXiv:1606.05433, 2016. [https://arxiv.org/pdf/1606.05433.pdf]

- Ilija Ilievski, Shuicheng Yan, Jiashi Feng, A Focused Dynamic Attention Model for Visual Question Answering, arXiv:1604.01485. [https://arxiv.org/pdf/1604.01485v1.pdf]

- Yuke Zhu, Oliver Groth, Michael Bernstein, Li Fei-Fei, Visual7W: Grounded Question Answering in Images, CVPR 2016. [http://arxiv.org/abs/1511.03416]

- Hyeonwoo Noh, Paul Hongsuck Seo, and Bohyung Han, Image Question Answering using Convolutional Neural Network with Dynamic Parameter Prediction, CVPR, 2016.[http://arxiv.org/pdf/1511.05756.pdf]

- Jacob Andreas, Marcus Rohrbach, Trevor Darrell, Dan Klein, Learning to Compose Neural Networks for Question Answering, NAACL 2016. [http://arxiv.org/pdf/1601.01705.pdf]

- Jacob Andreas, Marcus Rohrbach, Trevor Darrell, Dan Klein, Deep compositional question answering with neural module networks, CVPR 2016. [https://arxiv.org/abs/1511.02799]

- Zichao Yang, Xiaodong He, Jianfeng Gao, Li Deng, Alex Smola, Stacked Attention Networks for Image Question Answering, CVPR 2016. [http://arxiv.org/abs/1511.02274] [[code]][https://github.com/JamesChuanggg/san-torch]

- Kevin J. Shih, Saurabh Singh, Derek Hoiem, Where To Look: Focus Regions for Visual Question Answering, CVPR, 2015. [http://arxiv.org/pdf/1511.07394v2.pdf]

- Kan Chen, Jiang Wang, Liang-Chieh Chen, Haoyuan Gao, Wei Xu, Ram Nevatia, ABC-CNN: An Attention Based Convolutional Neural Network for Visual Question Answering, arXiv:1511.05960v1, Nov 2015. [http://arxiv.org/pdf/1511.05960v1.pdf]

- Huijuan Xu, Kate Saenko, Ask, Attend and Answer: Exploring Question-Guided Spatial Attention for Visual Question Answering, arXiv:1511.05234v1, Nov 2015. [http://arxiv.org/abs/1511.05234]

- Kushal Kafle and Christopher Kanan, Answer-Type Prediction for Visual Question Answering, CVPR 2016. [http://www.cv-foundation.org/openaccess/content_cvpr_2016/html/Kafle_Answer-Type_Prediction_for_CVPR_2016_paper.html]

- Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh, VQA: Visual Question Answering, ICCV, 2015. [http://arxiv.org/pdf/1505.00468]

- Stanislaw Antol, Aishwarya Agrawal, Jiasen Lu, Margaret Mitchell, Dhruv Batra, C. Lawrence Zitnick, Devi Parikh, VQA: Visual Question Answering, ICCV, 2015. [http://arxiv.org/pdf/1505.00468] [[code]][https://github.com/JamesChuanggg/VQA-tensorflow]

- Bolei Zhou, Yuandong Tian, Sainbayar Sukhbaatar, Arthur Szlam, Rob Fergus, Simple Baseline for Visual Question Answering, arXiv:1512.02167v2, Dec 2015. [http://arxiv.org/abs/1512.02167]

- Hauyuan Gao, Junhua Mao, Jie Zhou, Zhiheng Huang, Lei Wang, Wei Xu, Are You Talking to a Machine? Dataset and Methods for Multilingual Image Question Answering, NIPS 2015. [http://arxiv.org/pdf/1505.05612.pdf]

- Mateusz Malinowski, Marcus Rohrbach, Mario Fritz, Ask Your Neurons: A Neural-based Approach to Answering Questions about Images, ICCV 2015. [http://arxiv.org/pdf/1505.01121v3.pdf]

- Mengye Ren, Ryan Kiros, Richard Zemel, Exploring Models and Data for Image Question Answering, ICML 2015. [http://arxiv.org/pdf/1505.02074.pdf]

- Mateusz Malinowski, Mario Fritz, Towards a Visual Turing Challe, NIPS Workshop 2015. [http://arxiv.org/abs/1410.8027]

- Mateusz Malinowski, Mario Fritz, A Multi-World Approach to Question Answering about Real-World Scenes based on Uncertain Input, NIPS 2014. [http://arxiv.org/pdf/1410.0210v4.pdf]

- Hedi Ben-younes, Remi Cadene, Matthieu Cord, Nicolas Thome: MUTAN: Multimodal Tucker Fusion for Visual Question Answering [https://arxiv.org/pdf/1705.06676.pdf] [[Code]][https://github.com/Cadene/vqa.pytorch]

- Jin-Hwa Kim, Kyoung Woon On, Jeonghee Kim, Jung-Woo Ha, Byoung-Tak Zhang, Hadamard Product for Low-rank Bilinear Pooling, arXiv:1610.04325, 2016. [https://arxiv.org/abs/1610.04325]

- Akira Fukui, Dong Huk Park, Daylen Yang, Anna Rohrbach, Trevor Darrell, Marcus Rohrbach, Multimodal Compact Bilinear Pooling for Visual Question Answering and Visual Grounding, arXiv:1606.01847, 2016. [https://arxiv.org/abs/1606.01847]

Edge Detection

- Saining Xie, Zhuowen Tu, Holistically-Nested Edge Detection Holistically-Nested Edge Detection [http://arxiv.org/pdf/1504.06375] [https://github.com/s9xie/hed]

- Gedas Bertasius, Jianbo Shi, Lorenzo Torresani, DeepEdge: A Multi-Scale Bifurcated Deep Network for Top-Down Contour Detection, CVPR, 2015. [http://arxiv.org/pdf/1412.1123]

- Wei Shen, Xinggang Wang, Yan Wang, Xiang Bai, Zhijiang Zhang, DeepContour: A Deep Convolutional Feature Learned by Positive-Sharing Loss for Contour Detection, CVPR, 2015. [http://mc.eistar.net/UpLoadFiles/Papers/DeepContour_cvpr15.pdf]

Human Pose Estimation

- Zhe Cao, Tomas Simon, Shih-En Wei, and Yaser Sheikh, Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields, CVPR, 2017.

- Leonid Pishchulin, Eldar Insafutdinov, Siyu Tang, Bjoern Andres, Mykhaylo Andriluka, Peter Gehler, and Bernt Schiele, Deepcut: Joint subset partition and labeling for multi person pose estimation, CVPR, 2016.

- Shih-En Wei, Varun Ramakrishna, Takeo Kanade, and Yaser Sheikh, Convolutional pose machines, CVPR, 2016.

- Alejandro Newell, Kaiyu Yang, and Jia Deng, Stacked hourglass networks for human pose estimation, ECCV, 2016.

- Tomas Pfister, James Charles, and Andrew Zisserman, Flowing convnets for human pose estimation in videos, ICCV, 2015.

- Jonathan J. Tompson, Arjun Jain, Yann LeCun, Christoph Bregler, Joint training of a convolutional network and a graphical model for human pose estimation, NIPS, 2014.

Image Generation

- Aäron van den Oord, Nal Kalchbrenner, Oriol Vinyals, Lasse Espeholt, Alex Graves, Koray Kavukcuoglu. "Conditional Image Generation with PixelCNN Decoders"[https://arxiv.org/pdf/1606.05328v2.pdf][https://github.com/kundan2510/pixelCNN]

- Alexey Dosovitskiy, Jost Tobias Springenberg, Thomas Brox, "Learning to Generate Chairs with Convolutional Neural Networks", CVPR, 2015. [http://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Dosovitskiy_Learning_to_Generate_2015_CVPR_paper.pdf]

- Karol Gregor, Ivo Danihelka, Alex Graves, Danilo Jimenez Rezende, Daan Wierstra, "DRAW: A Recurrent Neural Network For Image Generation", ICML, 2015. [https://arxiv.org/pdf/1502.04623v2.pdf]

- Ian J. Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, Yoshua Bengio, Generative Adversarial Networks, NIPS, 2014. [http://arxiv.org/abs/1406.2661]

- Emily Denton, Soumith Chintala, Arthur Szlam, Rob Fergus, Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks, NIPS, 2015. [http://arxiv.org/abs/1506.05751]

- Lucas Theis, Aäron van den Oord, Matthias Bethge, "A note on the evaluation of generative models", ICLR 2016. [http://arxiv.org/abs/1511.01844]

- Zhenwen Dai, Andreas Damianou, Javier Gonzalez, Neil Lawrence, "Variationally Auto-Encoded Deep Gaussian Processes", ICLR 2016. [http://arxiv.org/pdf/1511.06455v2.pdf]

- Elman Mansimov, Emilio Parisotto, Jimmy Ba, Ruslan Salakhutdinov, "Generating Images from Captions with Attention", ICLR 2016, [http://arxiv.org/pdf/1511.02793v2.pdf]

- Jost Tobias Springenberg, "Unsupervised and Semi-supervised Learning with Categorical Generative Adversarial Networks", ICLR 2016, [http://arxiv.org/pdf/1511.06390v1.pdf]

- Harrison Edwards, Amos Storkey, "Censoring Representations with an Adversary", ICLR 2016, [http://arxiv.org/pdf/1511.05897v3.pdf]

- Takeru Miyato, Shin-ichi Maeda, Masanori Koyama, Ken Nakae, Shin Ishii, "Distributional Smoothing with Virtual Adversarial Training", ICLR 2016, [http://arxiv.org/pdf/1507.00677v8.pdf]

- Jun-Yan Zhu, Philipp Krahenbuhl, Eli Shechtman, and Alexei A. Efros, "Generative Visual Manipulation on the Natural Image Manifold", ECCV 2016. [https://arxiv.org/pdf/1609.03552v2.pdf] [https://github.com/junyanz/iGAN] [https://youtu.be/9c4z6YsBGQ0]

- Alec Radford, Luke Metz, Soumith Chintala, "Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks", ICLR 2016. [http://arxiv.org/pdf/1511.06434.pdf]

课程

- 斯坦福视觉实验室主页:http://vision.stanford.edu/ 李飞飞组CS131, CS231A, CS231n 三个课程,可是说是最好的计算机视觉课程。

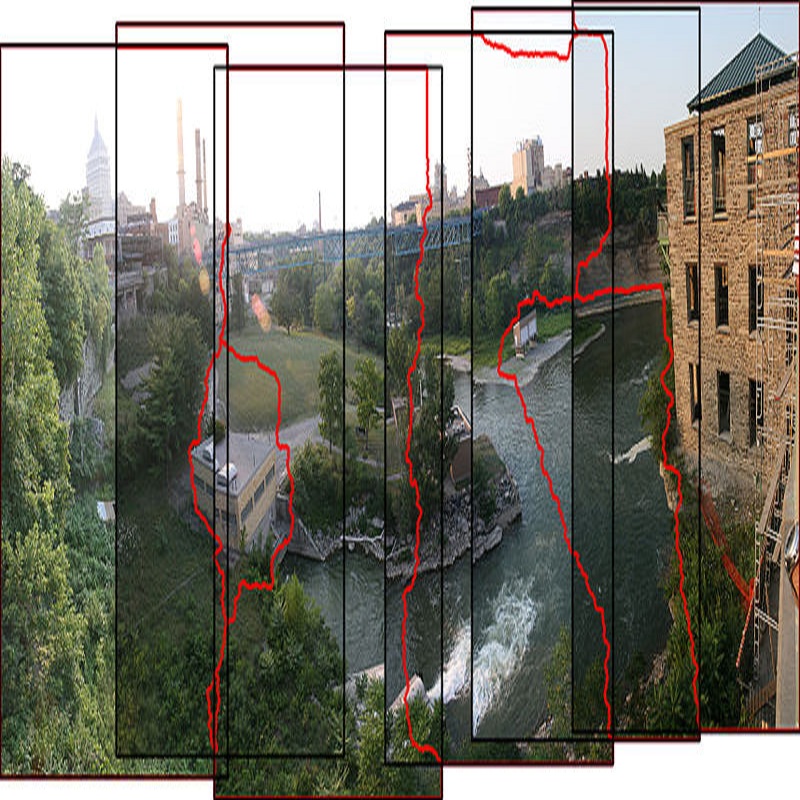

- CS 131 Computer Vision: Foundations and Applications: 基础知识:主要讲传统的边缘检测,特征点描述,相机标定,全景图拼接等知识 [http://vision.stanford.edu/teaching/cs131_fall1415/schedule.html]

- CS231A Computer Vision: from 3D reconstruction to recognition: [http://cvgl.stanford.edu/teaching/cs231a_winter1415/schedule.html]

- CS231n 2017: Convolutional Neural Networks for Visual Recognition 主要讲卷积神经网络的具体结构,各组成部分的原理优化以及各种应用。 [http://vision.stanford.edu/teaching/cs231n/] 国内地址:[http://www.bilibili.com/video/av13260183/]

- Stanford CS231n 2016 : Convolutional Neural Networks for Visual Recognition

- homepage: [http://cs231n.stanford.edu/]

- homepage: [http://vision.stanford.edu/teaching/cs231n/index.html]

- syllabus: [http://vision.stanford.edu/teaching/cs231n/syllabus.html]

- course notes: [http://cs231n.github.io/]

- youtube: [https://www.youtube.com/watch?v=NfnWJUyUJYU&feature=youtu.be]

- mirror: [http://pan.baidu.com/s/1pKsTivp]

- mirror: [http://pan.baidu.com/s/1c2wR8dy]

- 网易中文字幕:http://study.163.com/course/introduction/1003223001.htm

- assignment 1: [http://cs231n.github.io/assignments2016/assignment1/]

- assignment 2: [http://cs231n.github.io/assignments2016/assignment2/]

- assignment 3: [http://cs231n.github.io/assignments2016/assignment3/]

- 1st Summer School on Deep Learning for Computer Vision Barcelona: (July 4-8, 2016)

- 深度学习计算机视觉夏季学校课程, 包含基础知识以及许多深度学习在计算机视觉中的应用,比如分类,检测,captioning等等

- homepage(slides+videos): [http://imatge-upc.github.io/telecombcn-2016-dlcv/]

- homepage: [https://imatge.upc.edu/web/teaching/deep-learning-computer-vision]

- youtube: [https://www.youtube.com/user/imatgeupc/videos?shelf_id=0&sort=dd&view=0]

- 2nd Summer School on Deep Learning for Computer VisionBarcelona (June 21-27, 2017) https://telecombcn-dl.github.io/2017-dlcv/

- 计算机视觉 课程讲义

- 中国科学院自动化研究所 模式识别国家重点实验室 机器视觉课题组

- 主讲:胡占义、董秋雷、申抒含

- http://vision.ia.ac.cn/zh/teaching/

Turorial

- Intro to Deep Learning for Computer Vision 2016 http://chaosmail.github.io/deeplearning/2016/10/22/intro-to-deep-learning-for-computer-vision/

- CVPR 2014 Tutorial on Deep Learning in Computer Vision https://sites.google.com/site/deeplearningcvpr2014/

- CVPR 2015 Applied Deep Learning for Computer Vision with Torch https://github.com/soumith/cvpr2015

- Deep Learning for Computer Vision – Introduction to Convolution Neural Networks http://www.analyticsvidhya.com/blog/2016/04/deep-learning-computer-vision-introduction-convolution-neural-networks/

- A Beginner's Guide To Understanding Convolutional Neural Networks [https://adeshpande3.github.io/adeshpande3.github.io/A-Beginner's-Guide-To-Understanding-Convolutional-Neural-Networks/]

- CVPR'17 Tutorial Deep Learning for Objects and Scenes by Kaiming He Ross Girshick http://deeplearning.csail.mit.edu/

- CVPR tutorial : Large-Scale Visual Recognition http://www.europe.naverlabs.com/Research/Computer-Vision/Highlights/CVPR-tutorial-Large-Scale-Visual-Recognition

- CVPR’16 Tutorial on Image Tag Assignment, Refinement and Retrieval http://www.lambertoballan.net/2016/06/cvpr16-tutorial-image-tag-assignment-refinement-and-retrieval/

- Tutorial on Answering Questions about Images with Deep Learning The tutorial was presented at '2nd Summer School on Integrating Vision and Language: Deep Learning' in Malta, 2016 [https://arxiv.org/abs/1610.01076]

- “Semantic Segmentation for Scene Understanding: Algorithms and Implementations" tutorial [ https://www.youtube.com/watch?v=pQ318oCGJGY]

- A tutorial on training recurrent neural networks, covering BPPT, RTRL, EKF and the "echo state network" approach [http://minds.jacobs-university.de/sites/default/files/uploads/papers/ESNTutorialRev.pdf] [http://deeplearning.cs.cmu.edu/notes/shaoweiwang.pdf]

- Towards Good Practices for Recognition & Detection by Hikvision Research Institute. Supervised Data Augmentation (SDA) [http://image-net.org/challenges/talks/2016/Hikvision_at_ImageNet_2016.pdf]

- Generative Adversarial Networks by Ian Goodfellow, NIPS 2016 tutorial [ https://arxiv.org/abs/1701.00160] [http://www.iangoodfellow.com/slides/2016-12-04-NIPS.pdf]

- Deep Learning for Computer Vision – Introduction to Convolution Neural Networks [http://www.analyticsvidhya.com/blog/2016/04/deep-learning-computer-vision-introduction-convolution-neural-networks/]

图书

- 两本经典教材《Computer Vision: A Modern Approach》和《Computer Vision: Algorithms and Applications》,可以先读完第一本再读第二本。

- Computer Vision: A Modern Approach by David A. Forsyth, Jean Ponce 英文:http://cmuems.com/excap/readings/forsyth-ponce-computer-vision-a-modern-approach.pdf 中文:https://pan.baidu.com/s/1min99eK

- Computer Vision: Algorithms and Applications by Richard Szeliski 英文:http://szeliski.org/Book/drafts/SzeliskiBook_20100903_draft.pdf 中文:https://pan.baidu.com/s/1mhYGtio

- Computer Vision: Models, Learning, and Inference by Simon J.D. Prince 书的主页上还有配套的Slider, 代码,tutorial,演示等各种资源。 http://www.computervisionmodels.com/

- Challenges of Artificial Intelligence -- From Machine Learning and Computer Vision to Emotional Intelligence by Matti Pietikäinen,Olli Silven 【2021干货书】人工智能的挑战:从机器学习和计算机视觉到情感智能,241页pdf。 [https://www.zhuanzhi.ai/vip/50e9f1f79b73461fb4931d271028369e)

相关期刊与会议

国际会议

- CVPR, Computer Vision and Pattern Recognition

CVPR 2017:http://cvpr2017.thecvf.com/ - ICCV, International Conference on Computer Vision

ICCV2017:http://iccv2017.thecvf.com/ - ECCV, European Conference on Computer Vision

- SIGGRAPH, Special Interest Group on Computer Graphics and Interactive techniques

SIGGRAPH2017 http://s2017.siggraph.org/ - ACM International Conference on Multimedia

ACMMM2017: http://www.acmmm.org/2017/ - ICIP, International Conference on Image Processing http://2017.ieeeicip.org/

期刊

- ACM Transactions on Graphics

- IEEE Communications Surveys and Tutorials

- IEEE Signal Processing Magazine

- IEEE Transactions on EVOLUTIONARY COMPUTATION

- IEEE Transactions on GEOSCIENCE and REMOTE SENSING 2区

- IEEE Transactions on Pattern Analysis and Machine Intelligence

- NEUROCOMPUTING 2区

- Pattern Recognition Letters 2区

- Proceedings of the IEEE

- Signal image and Video Processing 4区

- IEEE journal on Selected areas in Communications 2区

- IEEE Transactions on image Processing 2区

- journal of Visual Communication and image Representation 3区

- Machine Vision and Application 3区

- Pattern Recognition 2区

- Signal Processing-image Communication 3区

- COMPUTER Vision and image UNDERSTANDING 3区

- IEEE Communications Surveys and Tutorials

- IET image Processing 4区

- Artificial Intelligence 2区

- Machine Learning 3区

- Medical image Analysis 2区

领域专家

北美

http://www.ics.uci.edu/~yyang8/extra/cv_giants.html

Other schools in no particular order:

- UCB (Malik, Darrel, Efros)

- UMD (Davis, Chellappa, Jacobs, Aloimonos, Doermann)

- UIUC (Forsyth, Hoiem, Ahuja, Lazebnik)

- UCSD (Kriegman)

- UT-Austin (Aggarwal, Grauman)

- Stanford (Fei-Fei Li, Savarese)

- USC (Nevatia, Medioni)

- Brown (Felzenszwalb, Hays, Sudderth)

- NYU (Rob Fergus)

- UC-Irvine (Fowlkes)

- UNC (Tamara Berg, Alex Berg, Jan-Michael Frahm)

- Columbia (Belhumeur, Shree Nayar, Shih-Fu Chang)

- Washington (Seitz, Farhadi)

- UMass, Amherst (Learned-Miller, Maji)

- Cornell Tech (Belongie)

- Virgina Tech (Batra, Parikh)

- Princeton (Xiao)

- Caltech (Perona)

http://www.it610.com/article/1770772.htm

组织机构与科研院所 THE Computer Vision Foundation 计算视觉基金会 → http://www.cv-foundation.org/

(a non-profit organization that fosters and supports research in all aspects of computer vision) Stanford Vision Lab 斯坦福大学视觉实验室 → http://vision.stanford.edu/

It focuses on two intimately connected branches of vision research: computer vision and human vision UC Berkeley Computer Vision Group → https://www2.eecs.berkeley.edu/Research/Projects/CS/vision/ Face Recognition Homepage → http://www.face-rec.org/ USC Computer Vision 南加州大学计算机视觉实验室 → http://iris.usc.edu/USC-Computer-Vision.html

The Computer Vision Laboratory at the University of Southern California ibug Intelligent behaviour understanding group → https://ibug.doc.ic.ac.uk/ The core expertise of the iBUG group is the machine analysis of human behaviour in space and time including face analysis, body gesture analysis, visual, audio, and multimodal analysis of human behaviour, and biometrics analysis.

datasets

Detection

- PASCAL VOC 2009 dataset Classification/Detection Competitions, Segmentation Competition, Person Layout Taster Competition datasets

- LabelMe dataset LabelMe is a web-based image annotation tool that allows researchers to label images and share the annotations with the rest of the community. If you use the database, we only ask that you contribute to it, from time to time, by using the labeling tool.

- BioID Face Detection Database

- 1521 images with human faces, recorded under natural conditions, i.e. varying illumination and complex background. The eye positions have been set manually.

- CMU/VASC & PIE Face dataset

- Yale Face dataset

- Caltech Cars, Motorcycles, Airplanes, Faces, Leaves, Backgrounds

- Caltech 101 Pictures of objects belonging to 101 categories

- Caltech 256 Pictures of objects belonging to 256 categories

- Daimler Pedestrian Detection Benchmark 15,560 pedestrian and non-pedestrian samples (image cut-outs) and 6744 additional full images not containing pedestrians for bootstrapping. The test set contains more than 21,790 images with 56,492 pedestrian labels (fully visible or partially occluded), captured from a vehicle in urban traffic.

- MIT Pedestrian dataset CVC Pedestrian Datasets

- CVC Pedestrian Datasets CBCL Pedestrian Database

- MIT Face dataset CBCL Face Database

- MIT Car dataset CBCL Car Database

- MIT Street dataset CBCL Street Database

- INRIA Person Data Set A large set of marked up images of standing or walking people

- INRIA car dataset A set of car and non-car images taken in a parking lot nearby INRIA

- INRIA horse dataset A set of horse and non-horse images

- H3D Dataset 3D skeletons and segmented regions for 1000 people in images

- HRI RoadTraffic dataset A large-scale vehicle detection dataset

- BelgaLogos 10000 images of natural scenes, with 37 different logos, and 2695 logos instances, annotated with a bounding box.

- FlickrBelgaLogos 10000 images of natural scenes grabbed on Flickr, with 2695 logos instances cut and pasted from the BelgaLogos dataset.

- FlickrLogos-32 The dataset FlickrLogos-32 contains photos depicting logos and is meant for the evaluation of multi-class logo detection/recognition as well as logo retrieval methods on real-world images. It consists of 8240 images downloaded from Flickr.

- TME Motorway Dataset 30000+ frames with vehicle rear annotation and classification (car and trucks) on motorway/highway sequences. Annotation semi-automatically generated using laser-scanner data. Distance estimation and consistent target ID over time available.

- PHOS (Color Image Database for illumination invariant feature selection) Phos is a color image database of 15 scenes captured under different illumination conditions. More particularly, every scene of the database contains 15 different images: 9 images captured under various strengths of uniform illumination, and 6 images under different degrees of non-uniform illumination. The images contain objects of different shape, color and texture and can be used for illumination invariant feature detection and selection.

- CaliforniaND: An Annotated Dataset For Near-Duplicate Detection In Personal Photo Collections California-ND contains 701 photos taken directly from a real user's personal photo collection, including many challenging non-identical near-duplicate cases, without the use of artificial image transformations. The dataset is annotated by 10 different subjects, including the photographer, regarding near duplicates.

- USPTO Algorithm Challenge, Detecting Figures and Part Labels in Patents Contains drawing pages from US patents with manually labeled figure and part labels.

- Abnormal Objects Dataset Contains 6 object categories similar to object categories in Pascal VOC that are suitable for studying the abnormalities stemming from objects.

- Human detection and tracking using RGB-D camera Collected in a clothing store. Captured with Kinect (640*480, about 30fps)

- Multi-Task Facial Landmark (MTFL) dataset This dataset contains 12,995 face images collected from the Internet. The images are annotated with (1) five facial landmarks, (2) attributes of gender, smiling, wearing glasses, and head pose.

- WIDER FACE: A Face Detection Benchmark WIDER FACE dataset is a face detection benchmark dataset with images selected from the publicly available WIDER dataset. It contains 32,203 images and 393,703 face annotations.

- PIROPO Database: People in Indoor ROoms with Perspective and Omnidirectional cameras Multiple sequences recorded in two different indoor rooms, using both omnidirectional and perspective cameras, containing people in a variety of situations (people walking, standing, and sitting). Both annotated and non-annotated sequences are provided, where ground truth is point-based. In total, more than 100,000 annotated frames are available.

Classification

- PASCAL VOC 2009 dataset Classification/Detection Competitions, Segmentation Competition, Person Layout Taster Competition datasets

- Caltech Cars, Motorcycles, Airplanes, Faces, Leaves, Backgrounds

- Caltech 101 Pictures of objects belonging to 101 categories

- Caltech 256 Pictures of objects belonging to 256 categories

- ETHZ Shape Classes A dataset for testing object class detection algorithms. It contains 255 test images and features five diverse shape-based classes (apple logos, bottles, giraffes, mugs, and swans).

- Flower classification data sets 17 Flower Category Dataset

- Animals with attributes A dataset for Attribute Based Classification. It consists of 30475 images of 50 animals classes with six pre-extracted feature representations for each image.

- Stanford Dogs Dataset Dataset of 20,580 images of 120 dog breeds with bounding-box annotation, for fine-grained image categorization.

- Video classification USAA dataset The USAA dataset includes 8 different semantic class videos which are home videos of social occassions which feature activities of group of people. It contains around 100 videos for training and testing respectively. Each video is labeled by 69 attributes. The 69 attributes can be broken down into five broad classes: actions, objects, scenes, sounds, and camera movement.

- McGill Real-World Face Video Database This database contains 18000 video frames of 640x480 resolution from 60 video sequences, each of which recorded from a different subject (31 female and 29 male).

- e-Lab Video Data Set Video data sets to train machines to recognise objects in our environment. e-VDS35 has 35 classes and a total of 2050 videos of roughly 10 seconds each.

Recognition

- Face and Gesture Recognition Working Group FGnet Face and Gesture Recognition Working Group FGnet

- Feret Face and Gesture Recognition Working Group FGnet

- PUT face 9971 images of 100 people

- Labeled Faces in the Wild A database of face photographs designed for studying the problem of unconstrained face recognition

- Urban scene recognition Traffic Lights Recognition, Lara's public benchmarks.

- PubFig: Public Figures Face Database The PubFig database is a large, real-world face dataset consisting of 58,797 images of 200 people collected from the internet. Unlike most other existing face datasets, these images are taken in completely uncontrolled situations with non-cooperative subjects.

- YouTube Faces The data set contains 3,425 videos of 1,595 different people. The shortest clip duration is 48 frames, the longest clip is 6,070 frames, and the average length of a video clip is 181.3 frames.

- MSRC-12: Kinect gesture data set The Microsoft Research Cambridge-12 Kinect gesture data set consists of sequences of human movements, represented as body-part locations, and the associated gesture to be recognized by the system.

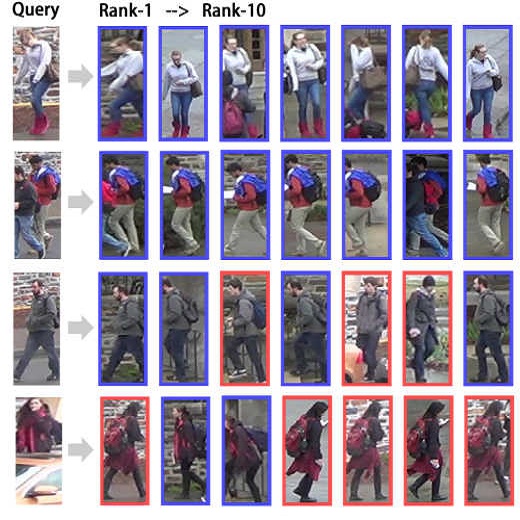

- QMUL underGround Re-IDentification (GRID) Dataset This dataset contains 250 pedestrian image pairs + 775 additional images captured in a busy underground station for the research on person re-identification.

- Person identification in TV series Face tracks, features and shot boundaries from our latest CVPR 2013 paper. It is obtained from 6 episodes of Buffy the Vampire Slayer and 6 episodes of Big Bang Theory.

- ChokePoint Dataset ChokePoint is a video dataset designed for experiments in person identification/verification under real-world surveillance conditions. The dataset consists of 25 subjects (19 male and 6 female) in portal 1 and 29 subjects (23 male and 6 female) in portal 2.

- Hieroglyph Dataset Ancient Egyptian Hieroglyph Dataset.

- Rijksmuseum Challenge Dataset: Visual Recognition for Art Dataset Over 110,000 photographic reproductions of the artworks exhibited in the Rijksmuseum (Amsterdam, the Netherlands). Offers four automatic visual recognition challenges consisting of predicting the artist, type, material and creation year. Includes a set of baseline features, and offer a baseline based on state-of-the-art image features encoded with the Fisher vector.

- The OU-ISIR Gait Database, Treadmill Dataset Treadmill gait datasets composed of 34 subjects with 9 speed variations, 68 subjects with 68 subjects, and 185 subjects with various degrees of gait fluctuations.

- The OU-ISIR Gait Database, Large Population Dataset Large population gait datasets composed of 4,016 subjects.

- Pedestrian Attribute Recognition At Far Distance Large-scale PEdesTrian Attribute (PETA) dataset, covering more than 60 attributes (e.g. gender, age range, hair style, casual/formal) on 19000 images.

- FaceScrub Face Dataset The FaceScrub dataset is a real-world face dataset comprising 107,818 face images of 530 male and female celebrities detected in images retrieved from the Internet. The images are taken under real-world situations (uncontrolled conditions). Name and gender annotations of the faces are included.

- Depth-Based Person Identification Depth-Based Person Identification from Top View Dataset.

Tracking

-

Dataset-AMP: Luka Čehovin Zajc; Alan Lukežič; Aleš Leonardis; Matej Kristan. "Beyond Standard Benchmarks: Parameterizing Performance Evaluation in Visual Object Tracking." ICCV (2017). [paper]

-

Dataset-Nfs: Hamed Kiani Galoogahi, Ashton Fagg, Chen Huang, Deva Ramanan and Simon Lucey. "Need for Speed: A Benchmark for Higher Frame Rate Object Tracking." ICCV (2017) [paper] [supp] [project]

-

Dataset-DTB70: Siyi Li, Dit-Yan Yeung. "Visual Object Tracking for Unmanned Aerial Vehicles: A Benchmark and New Motion Models." AAAI (2017) [paper] [project] [dataset]

-

Dataset-UAV123: Matthias Mueller, Neil Smith and Bernard Ghanem. "A Benchmark and Simulator for UAV Tracking." ECCV (2016) [paper] [project] [dataset]

-

Dataset-TColor-128: Pengpeng Liang, Erik Blasch, Haibin Ling. "Encoding color information for visual tracking: Algorithms and benchmark." TIP (2015) [paper] [project] [dataset]

-

Dataset-NUS-PRO: Annan Li, Min Lin, Yi Wu, Ming-Hsuan Yang, and Shuicheng Yan. "NUS-PRO: A New Visual Tracking Challenge." PAMI (2015) [paper] [project] [Data_360(code:bf28)] [Data_baidu]] [View_360(code:515a)] [View_baidu]]

-

Dataset-PTB: Shuran Song and Jianxiong Xiao. "Tracking Revisited using RGBD Camera: Unified Benchmark and Baselines." ICCV (2013) [paper] [project] [5 validation] [95 evaluation]

-

Dataset-ALOV300+: Arnold W. M. Smeulders, Dung M. Chu, Rita Cucchiara, Simone Calderara, Afshin Dehghan, Mubarak Shah. "Visual Tracking: An Experimental Survey." PAMI (2014) [paper] [project] Mirror Link:ALOV300 Mirror Link:ALOV300

-

OTB2013: Wu, Yi, Jongwoo Lim, and Minghsuan Yang. "Online Object Tracking: A Benchmark." CVPR (2013). [paper]

-

OTB2015: Wu, Yi, Jongwoo Lim, and Minghsuan Yang. "Object Tracking Benchmark." TPAMI (2015). [paper] [project]

-

Dataset-VOT: [project]

[VOT13_paper_ICCV]The Visual Object Tracking VOT2013 challenge results

[VOT14_paper_ECCV]The Visual Object Tracking VOT2014 challenge results

[VOT15_paper_ICCV]The Visual Object Tracking VOT2015 challenge results

[VOT16_paper_ECCV]The Visual Object Tracking VOT2016 challenge results

[VOT17_paper_ECCV]The Visual Object Tracking VOT2017 challenge results

Segmentation

- Image Segmentation with A Bounding Box Prior dataset Ground truth database of 50 images with: Data, Segmentation, Labelling - Lasso, Labelling - Rectangle

- PASCAL VOC 2009 dataset Classification/Detection Competitions, Segmentation Competition, Person Layout Taster Competition datasets

- Motion Segmentation and OBJCUT data Cows for object segmentation, Five video sequences for motion segmentation

- Geometric Context Dataset Geometric Context Dataset: pixel labels for seven geometric classes for 300 images

- Crowd Segmentation Dataset This dataset contains videos of crowds and other high density moving objects. The videos are collected mainly from the BBC Motion Gallery and Getty Images website. The videos are shared only for the research purposes. Please consult the terms and conditions of use of these videos from the respective websites.

- CMU-Cornell iCoseg Dataset Contains hand-labelled pixel annotations for 38 groups of images, each group containing a common foreground. Approximately 17 images per group, 643 images total.

- Segmentation evaluation database 200 gray level images along with ground truth segmentations

- The Berkeley Segmentation Dataset and Benchmark Image segmentation and boundary detection. Grayscale and color segmentations for 300 images, the images are divided into a training set of 200 images, and a test set of 100 images.

- Weizmann horses 328 side-view color images of horses that were manually segmented. The images were randomly collected from the WWW.

- Saliency-based video segmentation with sequentially updated priors 10 videos as inputs, and segmented image sequences as ground-truth

- Daimler Urban Segmentation Dataset The dataset consists of video sequences recorded in urban traffic. The dataset consists of 5000 rectified stereo image pairs. 500 frames come with pixel-level semantic class annotations into 5 classes: ground, building, vehicle, pedestrian, sky. Dense disparity maps are provided as a reference.

- DAVIS: Densely Annotated VIdeo Segmentation A Benchmark Dataset and Evaluation Methodology for Video Object Segmentation.

Foreground/Background

- Wallflower Dataset For evaluating background modelling algorithms

- Foreground/Background Microsoft Cambridge Dataset Foreground/Background segmentation and Stereo dataset from Microsoft Cambridge

- Stuttgart Artificial Background Subtraction Dataset The SABS (Stuttgart Artificial Background Subtraction) dataset is an artificial dataset for pixel-wise evaluation of background models.

- Image Alpha Matting Dataset Image Alpha Matting Dataset.

- LASIESTA: Labeled and Annotated Sequences for Integral Evaluation of SegmenTation Algorithms LASIESTA is composed by many real indoor and outdoor sequences organized in diferent categories, each of one covering a specific challenge in moving object detection strategies.

Saliency Detection (source)

- AIM 120 Images / 20 Observers (Neil D. B. Bruce and John K. Tsotsos 2005).

- LeMeur 27 Images / 40 Observers (O. Le Meur, P. Le Callet, D. Barba and D. Thoreau 2006).

- Kootstra 100 Images / 31 Observers (Kootstra, G., Nederveen, A. and de Boer, B. 2008).

- DOVES 101 Images / 29 Observers (van der Linde, I., Rajashekar, U., Bovik, A.C., Cormack, L.K. 2009).

- Ehinger 912 Images / 14 Observers (Krista A. Ehinger, Barbara Hidalgo-Sotelo, Antonio Torralba and Aude Oliva 2009).

- NUSEF 758 Images / 75 Observers (R. Subramanian, H. Katti, N. Sebe1, M. Kankanhalli and T-S. Chua 2010).

- JianLi 235 Images / 19 Observers (Jian Li, Martin D. Levine, Xiangjing An and Hangen He 2011).

- Extended Complex Scene Saliency Dataset (ECSSD) ECSSD contains 1000 natural images with complex foreground or background. For each image, the ground truth mask of salient object(s) is provided.

Video Surveillance

- CAVIAR For the CAVIAR project a number of video clips were recorded acting out the different scenarios of interest. These include people walking alone, meeting with others, window shopping, entering and exitting shops, fighting and passing out and last, but not least, leaving a package in a public place.

- ViSOR ViSOR contains a large set of multimedia data and the corresponding annotations.

- CUHK Crowd Dataset 474 video clips from 215 crowded scenes, with ground truth on group detection and video classes.?

- TImes Square Intersection (TISI) Dataset A busy outdoor dataset for research on visual surveillance.

- Educational Resource Centre (ERCe) Dataset An indoor dataset collected from a university campus for physical event understanding of long video streams.

- PIROPO Database: People in Indoor ROoms with Perspective and Omnidirectional cameras Multiple sequences recorded in two different indoor rooms, using both omnidirectional and perspective cameras, containing people in a variety of situations (people walking, standing, and sitting). Both annotated and non-annotated sequences are provided, where ground truth is point-based. In total, more than 100,000 annotated frames are available.

Multiview

- 3D Photography Dataset Multiview stereo data sets: a set of images

- Multi-view Visual Geometry group's data set Dinosaur, Model House, Corridor, Aerial views, Valbonne Church, Raglan Castle, Kapel sequence

- Oxford reconstruction data set (building reconstruction) Oxford colleges

- Multi-View Stereo dataset (Vision Middlebury) Temple, Dino

- Multi-View Stereo for Community Photo Collections Venus de Milo, Duomo in Pisa, Notre Dame de Paris

- IS-3D Data Dataset provided by Center for Machine Perception

- CVLab dataset CVLab dense multi-view stereo image database

- 3D Objects on Turntable Objects viewed from 144 calibrated viewpoints under 3 different lighting conditions

- Object Recognition in Probabilistic 3D Scenes Images from 19 sites collected from a helicopter flying around Providence, RI. USA. The imagery contains approximately a full circle around each site.

- Multiple cameras fall dataset 24 scenarios recorded with 8 IP video cameras. The first 22 first scenarios contain a fall and confounding events, the last 2 ones contain only confounding events.

- CMP Extreme View Dataset 15 wide baseline stereo image pairs with large viewpoint change, provided ground truth homographies.

- KTH Multiview Football Dataset II This dataset consists of 8000+ images of professional footballers during a match of the Allsvenskan league. It consists of two parts: one with ground truth pose in 2D and one with ground truth pose in both 2D and 3D.

- Disney Research light field datasets This dataset includes: camera calibration information, raw input images we have captured, radially undistorted, rectified, and cropped images, depth maps resulting from our reconstruction and propagation algorithm, depth maps computed at each available view by the reconstruction algorithm without the propagation applied.

- CMU Panoptic Studio Dataset Multiple people social interaction dataset captured by 500+ synchronized video cameras, with 3D full body skeletons and calibration data.

- 4D Light Field Dataset 24 synthetic scenes. Available data per scene: 9x9 input images (512x512x3) , ground truth (disparity and depth), camera parameters, disparity ranges, evaluation masks.

Action

- UCF Sports Action Dataset This dataset consists of a set of actions collected from various sports which are typically featured on broadcast television channels such as the BBC and ESPN. The video sequences were obtained from a wide range of stock footage websites including BBC Motion gallery, and GettyImages.

- UCF Aerial Action Dataset This dataset features video sequences that were obtained using a R/C-controlled blimp equipped with an HD camera mounted on a gimbal.The collection represents a diverse pool of actions featured at different heights and aerial viewpoints. Multiple instances of each action were recorded at different flying altitudes which ranged from 400-450 feet and were performed by different actors.

- UCF YouTube Action Dataset It contains 11 action categories collected from YouTube.

- Weizmann action recognition Walk, Run, Jump, Gallop sideways, Bend, One-hand wave, Two-hands wave, Jump in place, Jumping Jack, Skip.

- UCF50 UCF50 is an action recognition dataset with 50 action categories, consisting of realistic videos taken from YouTube.

- ASLAN The Action Similarity Labeling (ASLAN) Challenge.

- MSR Action Recognition Datasets The dataset was captured by a Kinect device. There are 12 dynamic American Sign Language (ASL) gestures, and 10 people. Each person performs each gesture 2-3 times.

- KTH Recognition of human actions Contains six types of human actions (walking, jogging, running, boxing, hand waving and hand clapping) performed several times by 25 subjects in four different scenarios: outdoors, outdoors with scale variation, outdoors with different clothes and indoors.

- Hollywood-2 Human Actions and Scenes dataset Hollywood-2 datset contains 12 classes of human actions and 10 classes of scenes distributed over 3669 video clips and approximately 20.1 hours of video in total.

- Collective Activity Dataset This dataset contains 5 different collective activities : crossing, walking, waiting, talking, and queueing and 44 short video sequences some of which were recorded by consumer hand-held digital camera with varying view point.

- Olympic Sports Dataset The Olympic Sports Dataset contains YouTube videos of athletes practicing different sports.

- SDHA 2010 Surveillance-type videos

- VIRAT Video Dataset The dataset is designed to be realistic, natural and challenging for video surveillance domains in terms of its resolution, background clutter, diversity in scenes, and human activity/event categories than existing action recognition datasets.

- HMDB: A Large Video Database for Human Motion Recognition Collected from various sources, mostly from movies, and a small proportion from public databases, YouTube and Google videos. The dataset contains 6849 clips divided into 51 action categories, each containing a minimum of 101 clips.

- Stanford 40 Actions Dataset Dataset of 9,532 images of humans performing 40 different actions, annotated with bounding-boxes.

- 50Salads dataset Fully annotated dataset of RGB-D video data and data from accelerometers attached to kitchen objects capturing 25 people preparing two mixed salads each (4.5h of annotated data). Annotated activities correspond to steps in the recipe and include phase (pre-/ core-/ post) and the ingredient acted upon.

- Penn Sports Action The dataset contains 2326 video sequences of 15 different sport actions and human body joint annotations for all sequences.

- CVRR-HANDS 3D A Kinect dataset for hand detection in naturalistic driving settings as well as a challenging 19 dynamic hand gesture recognition dataset for human machine interfaces.

- TUM Kitchen Data Set Observations of several subjects setting a table in different ways. Contains videos, motion capture data, RFID tag readings,...

- TUM Breakfast Actions Dataset

- This dataset comprises of 10 actions related to breakfast preparation, performed by 52 different individuals in 18 different kitchens.

- MPII Cooking Activities Dataset Cooking Activities dataset.

- GTEA Gaze+ Dataset This dataset consists of seven meal-preparation activities, each performed by 10 subjects. Subjects perform the activities based on the given cooking recipes.

- UTD-MHAD: multimodal human action recogniton dataset The dataset consists of four temporally synchronized data modalities. These modalities include RGB videos, depth videos, skeleton positions, and inertial signals (3-axis acceleration and 3-axis angular velocity) from a Kinect RGB-D camera and a wearable inertial sensor for a comprehensive set of 27 human actions.

Human pose/Expression

- AFEW (Acted Facial Expressions In The Wild)/SFEW (Static Facial Expressions In The Wild) Dynamic temporal facial expressions data corpus consisting of close to real world environment extracted from movies.

- Expression in-the-Wild (ExpW) Dataset Contains 91,793 faces manually labeled with expressions. Each of the face images was manually annotated as one of the seven basic expression categories: “angryâ€, “disgustâ€, “fearâ€, “happyâ€, “sadâ€, “surpriseâ€, or “neutralâ€.

- ETHZ CALVIN Dataset CALVIN research group datasets

- HandNet (annotated depth images of articulating hands) This dataset includes 214971 annotated depth images of hands captured by a RealSense RGBD sensor of hand poses. Annotations: per pixel classes, 6D fingertip pose, heatmap. Images -> Train: 202198, Test: 10000, Validation: 2773. Recorded at GIP Lab, Technion.

- 3D Human Pose Estimation Depth videos + ground truth human poses from 2 viewpoints to improve 3D human pose estimation.

Medical

- VIP Laparoscopic / Endoscopic Dataset Collection of endoscopic and laparoscopic (mono/stereo) videos and images

- Mouse Embryo Tracking Database DB Contains 100 examples with the uncompressed frames, up to the 10th frame after the appearance of the 8th cell; a text file with the trajectories of all the cells, from appearance to division; a movie file showing the trajectories of the cells.

- FIRE Fundus Image Registration Dataset 134 retinal image pairs and ground truth for registration.

Misc

- Zurich Buildings Database ZuBuD Image Database contains over 1005 images about Zurich city building.

- Color Name Data Sets

- Mall dataset The mall dataset was collected from a publicly accessible webcam for crowd counting and activity profiling research.

- QMUL Junction Dataset A busy traffic dataset for research on activity analysis and behaviour understanding.

- Miracl-VC1 Miracl-VC1 is a lip-reading dataset including both depth and color images. Fifteen speakers positioned in the frustum of a MS Kinect sensor and utter ten times a set of ten words and ten phrases.

- NYU Symmetry Database The mirror symmetry database contains 176 single-symmetry and 63 multiple-symmetry images (.png files) with accompanying ground-truth annotations (.mat files).

- RGB-W: When Vision Meets Wireless Data with the wireless signal emitted by individuals' cell phones, referred to as RGB-W.

Challenge

- Microsoft COCO Image Captioning Challenge https://competitions.codalab.org/competitions/3221

- ImageNet Large Scale Visual Recognition Challenge http://www.image-net.org/

- COCO 2017 Detection Challenge http://cocodataset.org/#detections-challenge2017

- Visual Domain Adaptation (VisDA2017) Segmentation Challenge https://competitions.codalab.org/competitions/17054

- The PASCAL Visual Object Classes Homepage http://host.robots.ox.ac.uk/pascal/VOC/

- YouTube-8M Large-Scale Video Understanding https://research.google.com/youtube8m/workshop.html

- joint COCO and Places Challenge https://places-coco2017.github.io/

- Places Challenge 2017: Deep Scene Understanding is held jointly with COCO Challenge at ICCV'17 http://placeschallenge.csail.mit.edu/

- COCO Challenges. http://cocodataset.org/#home

- CBCL StreetScenes Challenge Framework http://cbcl.mit.edu/software-datasets/streetscenes/

- CoronARe: A Coronary Artery Reconstruction Challenge https://challenge.kitware.com/#phase/58d2925ecad3a532cfa20e37

- NUS-PRO: A New Visual Tracking Challenge http://www.visionbib.com/bibliography/journal/pam.html#PAMI(38)

- i-LIDS: Bag and vehicle detection challenge http://www.elec.qmul.ac.uk/staffinfo/andrea/avss2007_d.html

- The Action Similarity Labeling Challenge http://www.visionbib.com/bibliography/journal/pam.html#PAMI(34)

- The PASCAL Visual Object Classes Challenge 2012 http://www.visionbib.com/bibliography/journal-listeu.html#TT1663

创业公司

- 旷视科技:让机器看懂世界 [https://megvii.com/]

- 云从科技:源自计算机视觉之父的人脸识别技术 [http://www.cloudwalk.cn/]

- 格林深瞳:让计算机看懂世界 [http://www.deepglint.com/]

- 北京陌上花科技有限公司:人工智能计算机视觉引擎 [http://www.dressplus.cn/]

- 依图科技:与您一起构建计算机视觉的未来 [http://www.yitutech.com/]

- 码隆科技:最时尚的人工智能 [https://www.malong.com/]

- Linkface脸云科技:全球领先的人脸识别技术服务 [https://www.linkface.cn/]

- 速感科技:让机器人认识世界,用机器人改变世界 [http://www.qfeeltech.com/]

- 图森: 中国自动驾驶商业化领跑者 [http://www.tusimple.com/]

- Sense TIme商汤科技:教会计算机看懂这个世界 [https://www.sensetime.com/]

- 图普科技:专注于图像识别 [https://us.tuputech.com/?from=gz]

- 亮风台: 专注增强现实,引领人机交互 [https://www.hiscene.com/]

- 中科视拓 : 知人识面辨万物,开源赋能共发展 [http://www.seetatech.com/]

公众号

- 视觉求索 thevisionseeker

- 深度学习大讲堂 deeplearningclass

- VALSE valse_wechat

- computer vision

- 领域报告/资料整理

- 入门学习

- 综述

- 进阶论文

- Image Classification

- Object Detection

- 进阶文章

- Video Classification

- Object Tracking

- Segmentation

- Object Recognition

- Image Captioning

- Video Captioning

- Visual Question Answering

- Edge Detection

- Human Pose Estimation

- Image Generation

- 课程

- Turorial

- 图书

- 相关期刊与会议

- 国际会议

- 期刊

- 领域专家

- 北美

- datasets

- Detection

- Classification

- Recognition

- Tracking

- Segmentation

- Foreground/Background

- Saliency Detection (source)

- Video Surveillance

- Multiview

- Action

- Human pose/Expression

- Medical

- Misc

- Challenge

- 创业公司

- 公众号