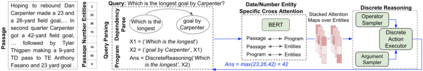

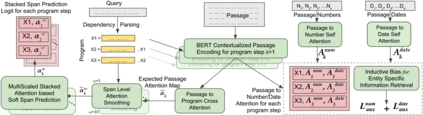

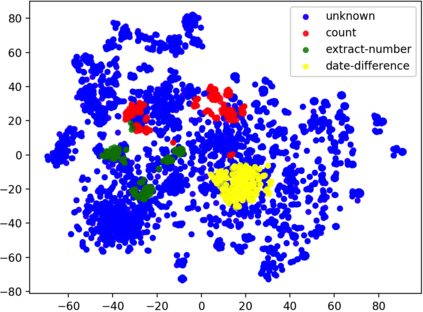

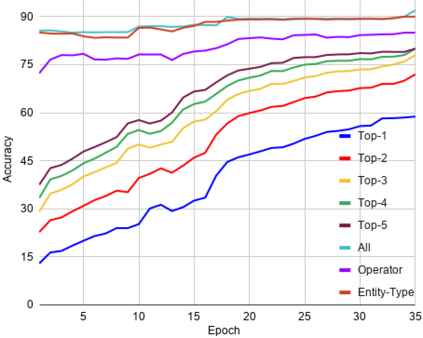

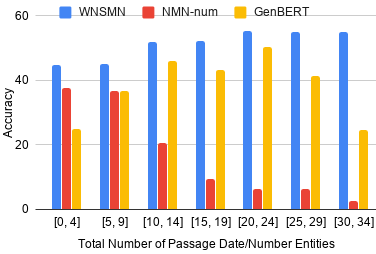

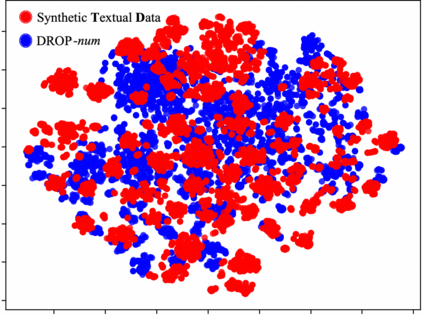

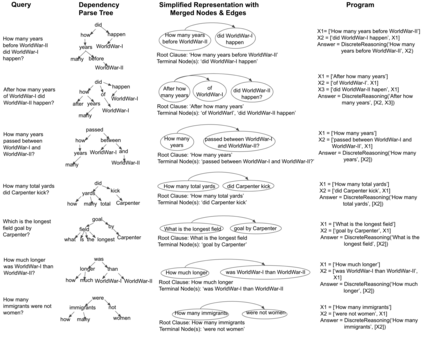

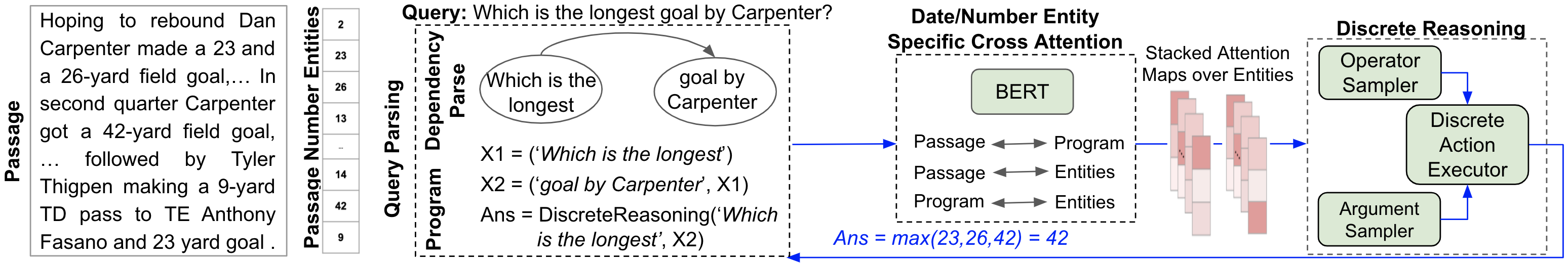

Neural Module Networks (NMNs) have been quite successful in incorporating explicit reasoning as learnable modules in various question answering tasks, including the most generic form of numerical reasoning over text in Machine Reading Comprehension (MRC). However, to achieve this, contemporary NMNs need strong supervision in executing the query as a specialized program over reasoning modules and fail to generalize to more open-ended settings without such supervision. Hence we propose Weakly-Supervised Neuro-Symbolic Module Network (WNSMN) trained with answers as the sole supervision for numerical reasoning based MRC. It learns to execute a noisy heuristic program obtained from the dependency parsing of the query, as discrete actions over both neural and symbolic reasoning modules and trains it end-to-end in a reinforcement learning framework with discrete reward from answer matching. On the numerical-answer subset of DROP, WNSMN out-performs NMN by 32% and the reasoning-free language model GenBERT by 8% in exact match accuracy when trained under comparable weak supervised settings. This showcases the effectiveness and generalizability of modular networks that can handle explicit discrete reasoning over noisy programs in an end-to-end manner.

翻译:神经模块网络(NMNNs)在将明确推理作为可学习模块纳入各种答题任务中方面取得了相当的成功,包括机器阅读理解(MRC)中最通用的文字数字推理形式。然而,为了实现这一点,当代NMNs需要强有力的监督,将查询作为针对推理模块的专门程序加以执行,而没有这种监督,无法推广到更开放的设置。因此,我们提议在基于数字推理(MRC)的唯一监管下,将答案作为可学习的模块。它学会执行从查询依赖性分解中获得的超音速程序,作为神经和象征性推理模块的单独行动,并在一个强化学习框架内对其进行端对答案匹配的单独奖励培训。关于DROP的数字解答子,WNSMN出NMNMNMNMNM的32%,以及无逻辑语言模型GENBERT的8 %,在受类似薄弱监管环境下培训时精确匹配。这展示了模块网络的实效和通用性,可以以清晰的离式推理。