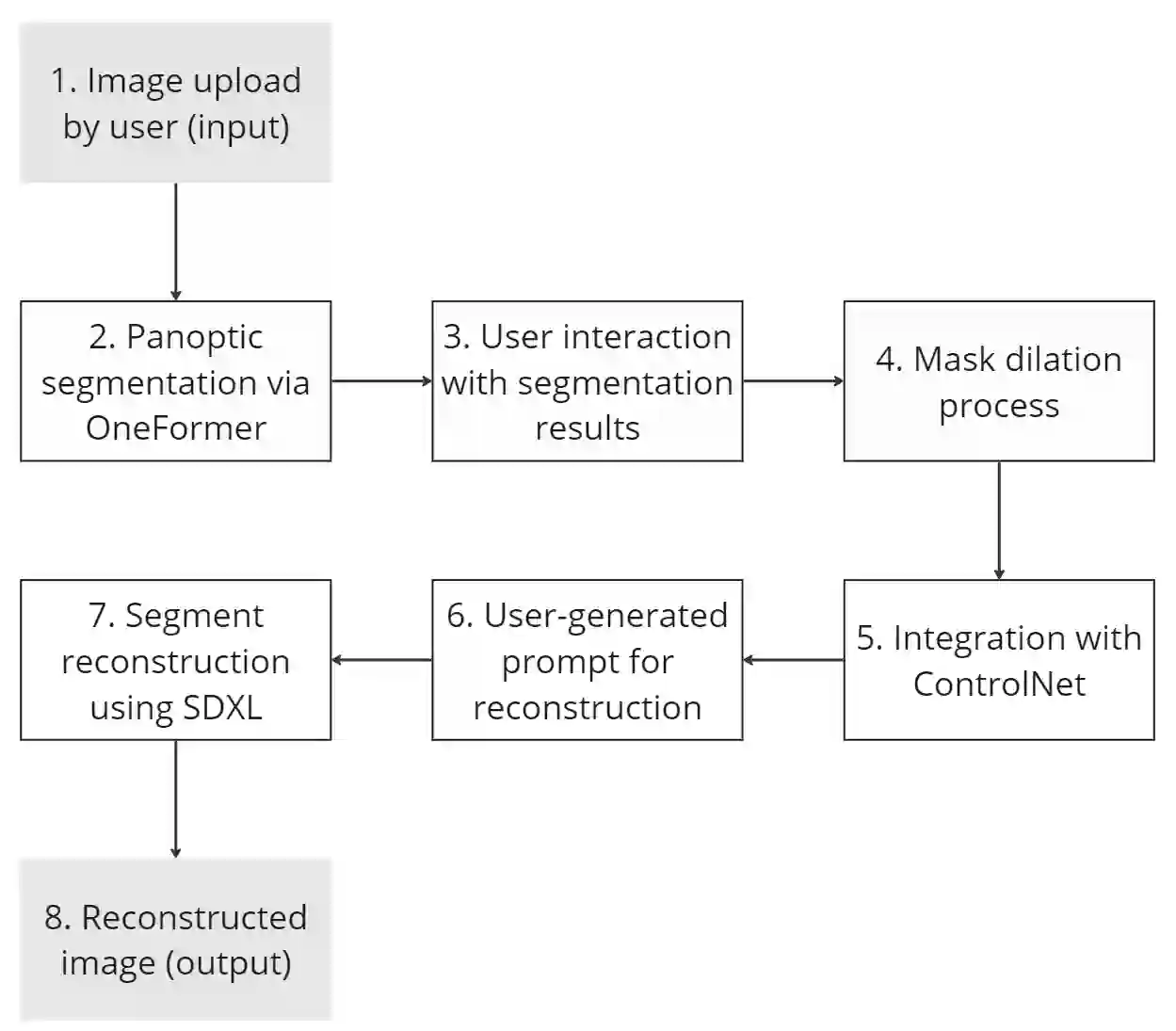

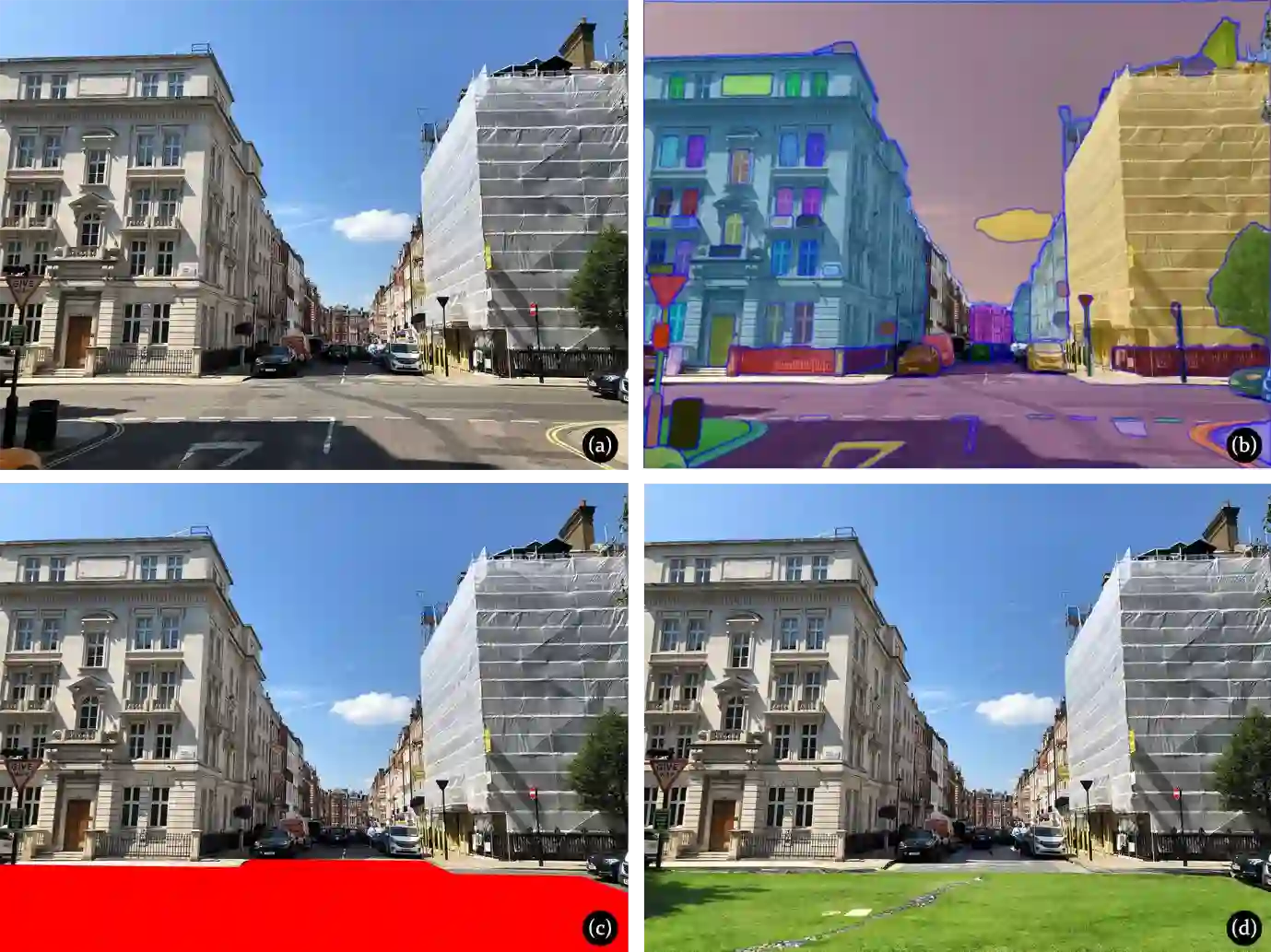

In contemporary design practices, the integration of computer vision and generative artificial intelligence (genAI) represents a transformative shift towards more interactive and inclusive processes. These technologies offer new dimensions of image analysis and generation, which are particularly relevant in the context of urban landscape reconstruction. This paper presents a novel workflow encapsulated within a prototype application, designed to leverage the synergies between advanced image segmentation and diffusion models for a comprehensive approach to urban design. Our methodology encompasses the OneFormer model for detailed image segmentation and the Stable Diffusion XL (SDXL) diffusion model, implemented through ControlNet, for generating images from textual descriptions. Validation results indicated a high degree of performance by the prototype application, showcasing significant accuracy in both object detection and text-to-image generation. This was evidenced by superior Intersection over Union (IoU) and CLIP scores across iterative evaluations for various categories of urban landscape features. Preliminary testing included utilising UrbanGenAI as an educational tool enhancing the learning experience in design pedagogy, and as a participatory instrument facilitating community-driven urban planning. Early results suggested that UrbanGenAI not only advances the technical frontiers of urban landscape reconstruction but also provides significant pedagogical and participatory planning benefits. The ongoing development of UrbanGenAI aims to further validate its effectiveness across broader contexts and integrate additional features such as real-time feedback mechanisms and 3D modelling capabilities. Keywords: generative AI; panoptic image segmentation; diffusion models; urban landscape design; design pedagogy; co-design

翻译:暂无翻译