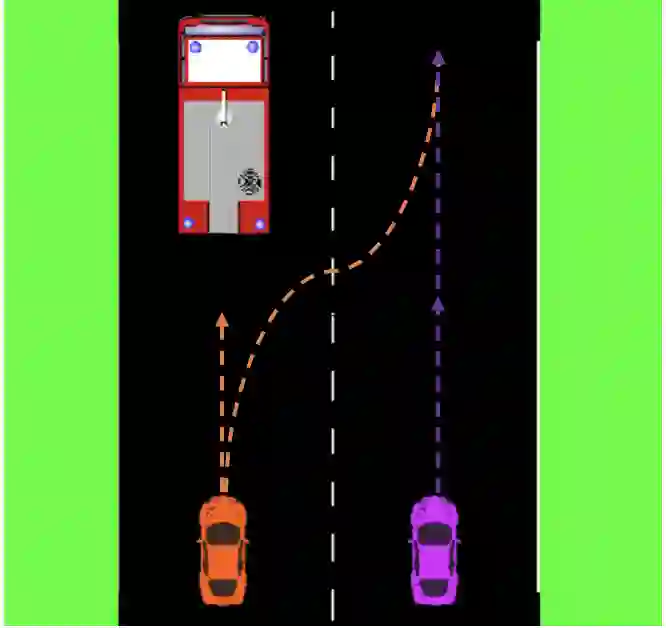

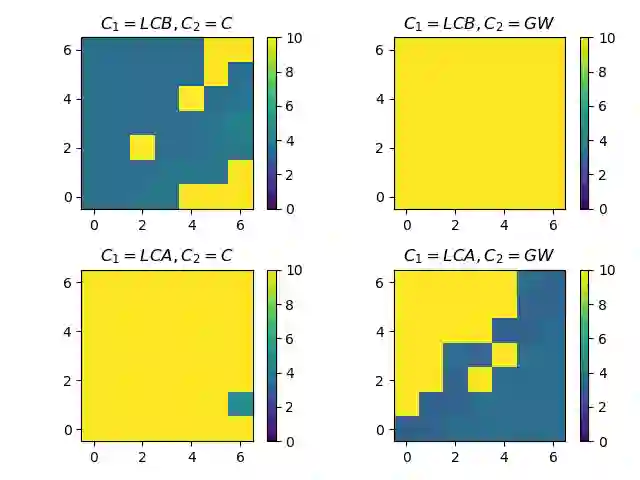

Recent work on decision making and planning for autonomous driving has made use of game theoretic methods to model interaction between agents. We demonstrate that methods based on the Stackelberg game formulation of this problem are susceptible to an issue that we refer to as conflict. Our results show that when conflict occurs, it causes sub-optimal and potentially dangerous behaviour. In response, we develop a theoretical framework for analysing the extent to which such methods are impacted by conflict, and apply this framework to several existing approaches modelling interaction between agents. Moreover, we propose Augmented Altruism, a novel approach to modelling interaction between players in a Stackelberg game, and show that it is less prone to conflict than previous techniques. Finally, we investigate the behavioural assumptions that underpin our approach by performing experiments with human participants. The results show that our model explains human decision-making better than existing game-theoretic models of interactive driving.

翻译:最近关于自主驾驶的决策和规划的工作利用游戏理论方法来模拟代理人之间的互动。我们证明基于Stakkelberg游戏对这一问题的配方方法容易出现我们称之为冲突的问题。我们的结果显示,当冲突发生时,它会造成次优和潜在危险的行为。作为回应,我们开发了一个理论框架来分析这些方法在多大程度上受到冲突的影响,并将这一框架应用于若干现有的方法,模拟代理人之间的互动。此外,我们提出了增强的利他主义,这是模拟Stakkelberg游戏中玩家之间互动的一种新颖方法,并表明它比以往的技术更容易发生冲突。最后,我们通过与人类参与者进行实验,调查作为我们做法基础的行为假设。结果显示,我们的模型比现有的互动驾驶游戏理论模型更好地解释人类决策。