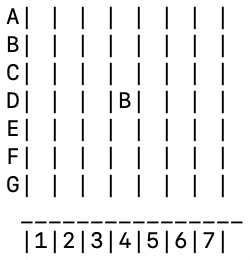

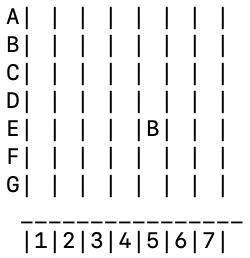

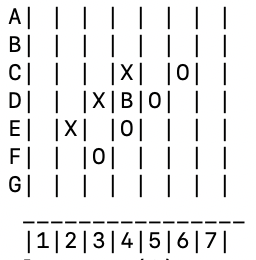

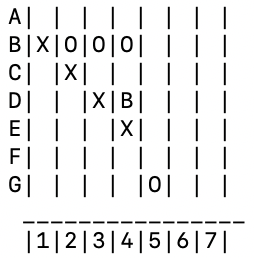

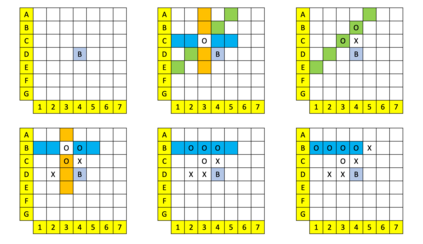

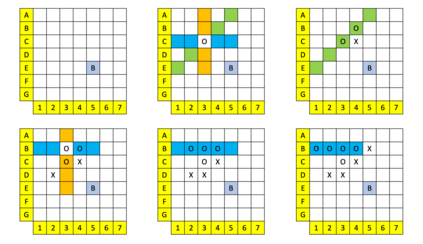

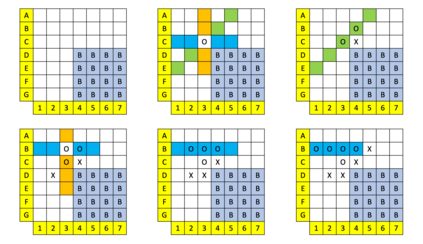

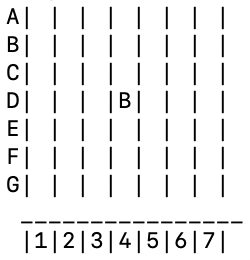

Traditional reinforcement learning (RL) environments typically are the same for both the training and testing phases. Hence, current RL methods are largely not generalizable to a test environment which is conceptually similar but different from what the method has been trained on, which we term the novel test environment. As an effort to push RL research towards algorithms which can generalize to novel test environments, we introduce the Brick Tic-Tac-Toe (BTTT) test bed, where the brick position in the test environment is different from that in the training environment. Using a round-robin tournament on the BTTT environment, we show that traditional RL state-search approaches such as Monte Carlo Tree Search (MCTS) and Minimax are more generalizable to novel test environments than AlphaZero is. This is surprising because AlphaZero has been shown to achieve superhuman performance in environments such as Go, Chess and Shogi, which may lead one to think that it performs well in novel test environments. Our results show that BTTT, though simple, is rich enough to explore the generalizability of AlphaZero. We find that merely increasing MCTS lookahead iterations was insufficient for AlphaZero to generalize to some novel test environments. Rather, increasing the variety of training environments helps to progressively improve generalizability across all possible starting brick configurations.

翻译:传统的强化学习( RL) 环境通常在培训和测试阶段都是一样的。 因此, 目前的 RL 方法基本上无法在概念上相似但与所培训方法不同的测试环境, 我们称之为新测试环境。 作为努力将RL 研究推向算法, 可以向创新测试环境推广, 我们引入 Brick Tic- Tac- Toe (BTTT) 测试床, 测试环境中的砖位与培训环境中的砖位不同。 在 BTT 环境中, 我们使用圆环环赛, 显示传统的 RL 状态研究方法, 如 Monte Carlo Tree Search (MCTS) 和 Minimax 等, 在概念上与该方法相似, 我们称之为新测试环境。 令人惊讶的是, AlphaZero 已证明在像 Go、 Chesss 和 Shogi ( BTTTTT) 这样的环境下, 可能会让人觉得它在新的测试环境中表现良好。 我们的结果表明, BTTTT 十分简单, 足以探索 AlpheZerororo的通用配置。 我们发现, 只是的测试环境在普通环境上只是增加了它在普通的测试范围中可能。