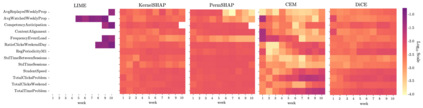

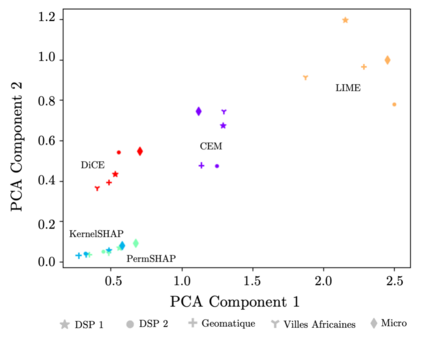

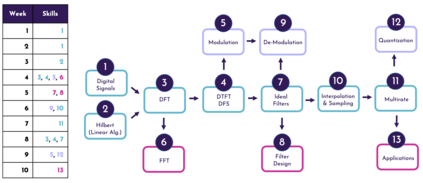

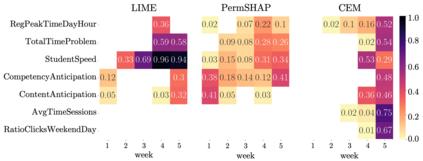

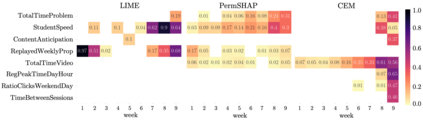

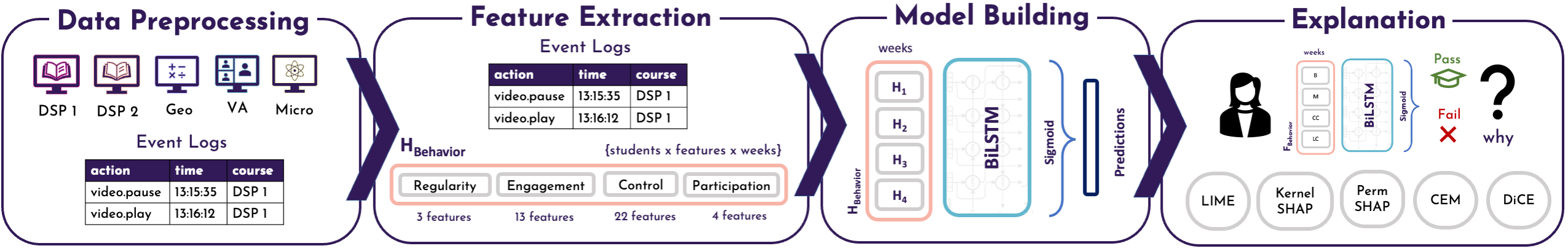

Neural networks are ubiquitous in applied machine learning for education. Their pervasive success in predictive performance comes alongside a severe weakness, the lack of explainability of their decisions, especially relevant in human-centric fields. We implement five state-of-the-art methodologies for explaining black-box machine learning models (LIME, PermutationSHAP, KernelSHAP, DiCE, CEM) and examine the strengths of each approach on the downstream task of student performance prediction for five massive open online courses. Our experiments demonstrate that the families of explainers do not agree with each other on feature importance for the same Bidirectional LSTM models with the same representative set of students. We use Principal Component Analysis, Jensen-Shannon distance, and Spearman's rank-order correlation to quantitatively cross-examine explanations across methods and courses. Furthermore, we validate explainer performance across curriculum-based prerequisite relationships. Our results come to the concerning conclusion that the choice of explainer is an important decision and is in fact paramount to the interpretation of the predictive results, even more so than the course the model is trained on. Source code and models are released at http://github.com/epfl-ml4ed/evaluating-explainers.

翻译:应用机器教学的神经网络无处不在,在应用机器教学的教育中,其预测性表现普遍的成功与严重缺陷相伴而生,其决定缺乏解释性,特别是在以人为中心的领域。我们采用五种最先进的方法解释黑箱机器学习模式(LIME、变异SHAP、KernelSHAP、DICE、CEM),并审查在五个大规模开放式在线课程的学生成绩预测下游任务中每种方法的优点。我们的实验表明,解释者家属在同一个有代表性的学生组成的双向LSTM模型的特征重要性上并不相互一致。我们使用主构件分析、Jensen-Shannon距离和Spearman的级级顺序相关性来对不同方法和课程的解释进行定量交叉审查。此外,我们验证基于课程的先决条件关系中的解释性表现。我们的结论是,选择解释者是一项重要决定,事实上对预测结果的解释至关重要,甚至比模型上的课程更为重要。我们使用的源码和模型是在http://flgimaslambrelactionsal4上发布。