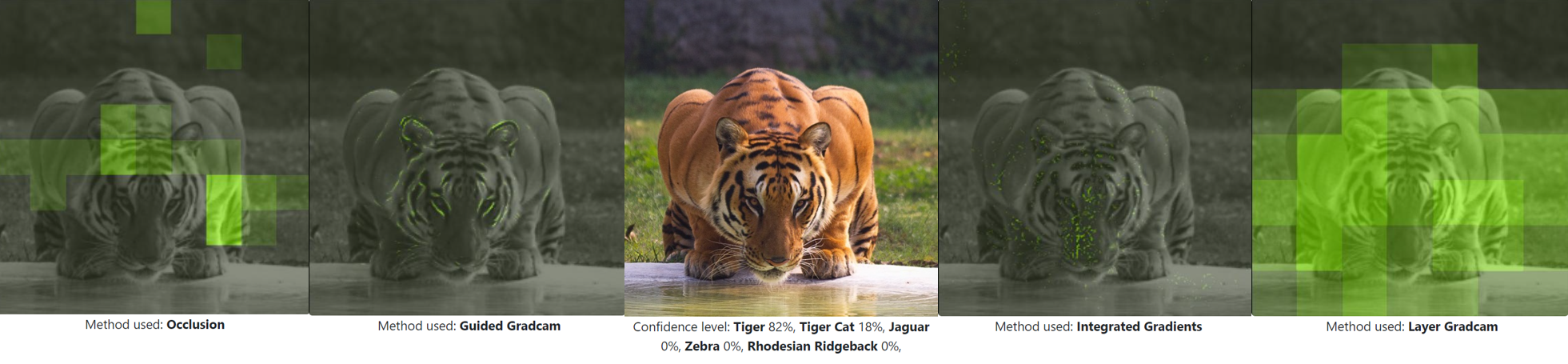

The currently dominating artificial intelligence and machine learning technology, neural networks, builds on inductive statistical learning. Neural networks of today are information processing systems void of understanding and reasoning capabilities, consequently, they cannot explain promoted decisions in a humanly valid form. In this work, we revisit and use fundamental philosophy of science theories as an analytical lens with the goal of revealing, what can be expected, and more importantly, not expected, from methods that aim to explain decisions promoted by a neural network. By conducting a case study we investigate a selection of explainability method's performance over two mundane domains, animals and headgear. Through our study, we lay bare that the usefulness of these methods relies on human domain knowledge and our ability to understand, generalise and reason. The explainability methods can be useful when the goal is to gain further insights into a trained neural network's strengths and weaknesses. If our aim instead is to use these explainability methods to promote actionable decisions or build trust in ML-models they need to be less ambiguous than they are today. In this work, we conclude from our study, that benchmarking explainability methods, is a central quest towards trustworthy artificial intelligence and machine learning.

翻译:当今的神经网络是信息处理系统,没有理解和推理能力,因此,它们无法以人性有效的形式解释促进决策。在这项工作中,我们重新审视并使用科学理论的基本哲学作为分析透镜,目的是揭示、预期什么,更重要的是,从旨在解释神经网络所推动的决定的方法中可以预见到哪些,更重要的是,不是预期到什么。通过进行案例研究,我们调查了在两个平坦的领域,即动物和头盔上如何选择解释方法的性能。通过我们的研究,我们发现这些方法的有用性取决于人类领域知识以及我们理解、概括和理性的能力。当我们的目标是进一步深入了解经过训练的神经网络的长处和弱点时,解释方法是有用的。如果我们的目的是利用这些解释方法来促进可操作的决定或建立对ML模型的信任,它们需要比今天更加模糊。我们从研究中得出的结论是,基准化的方法是走向可信赖的机器学习。