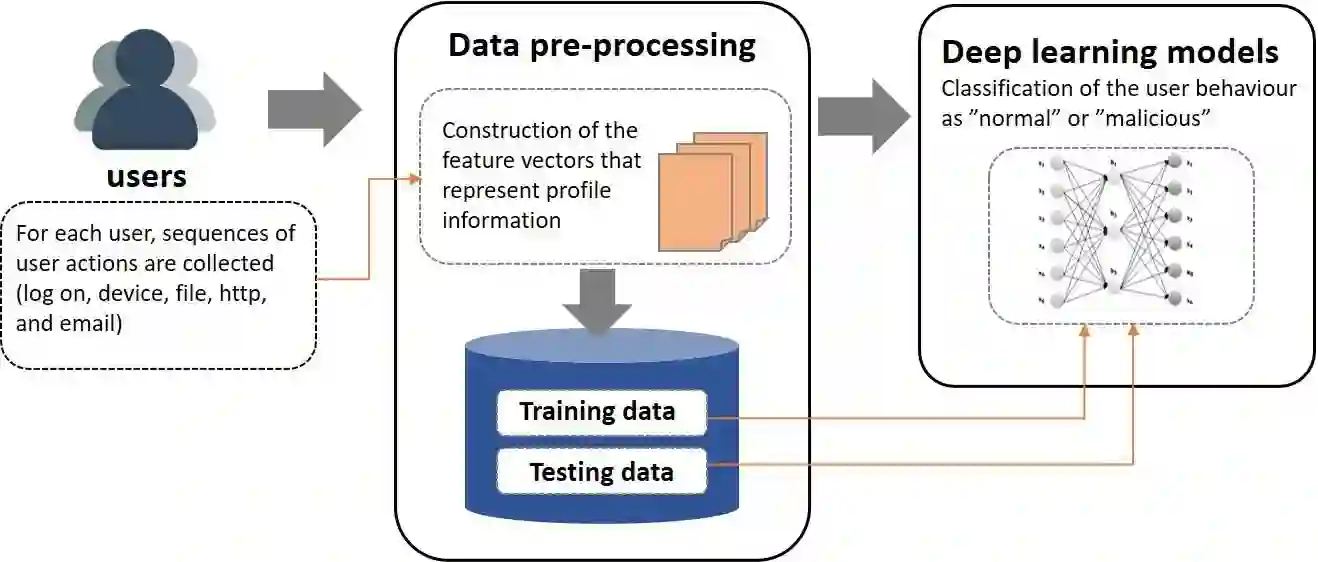

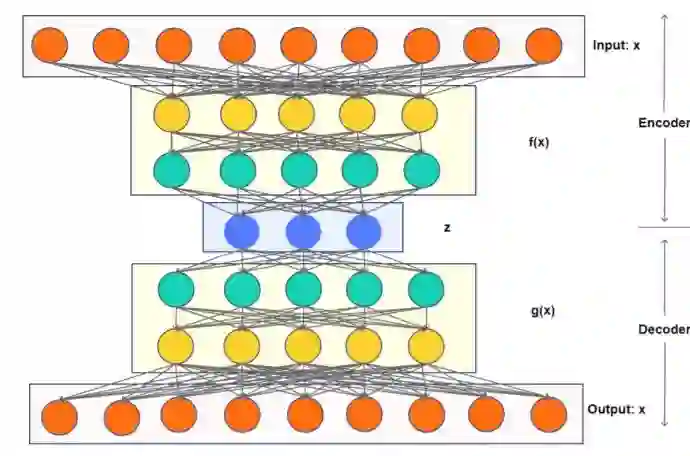

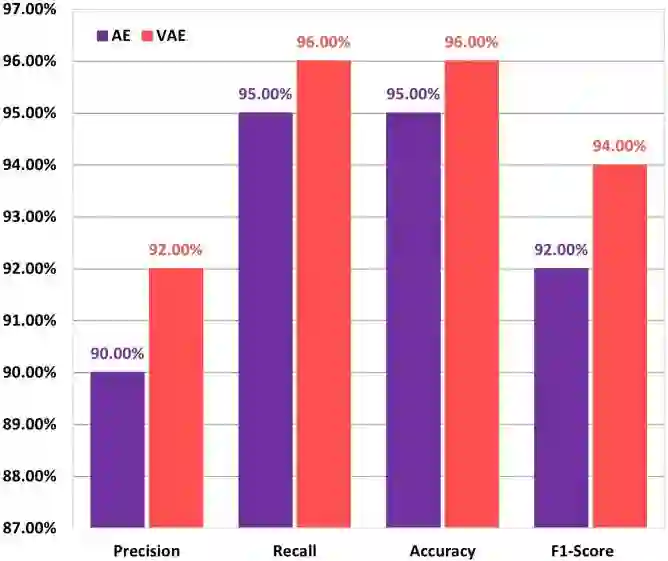

Insider attacks are one of the most challenging cybersecurity issues for companies, businesses and critical infrastructures. Despite the implemented perimeter defences, the risk of this kind of attack is still very high. In fact, the detection of insider attacks is a very complicated security task and presents a serious challenge to the research community. In this paper, we aim to address this issue by using deep learning algorithms Autoencoder and Variational Autoencoder deep. We will especially investigate the usefulness of applying these algorithms to automatically defend against potential internal threats, without human intervention. The effectiveness of these two models is evaluated on the public dataset CERT dataset (CERT r4.2). This version of the CERT Insider Threat Test dataset includes both benign and malicious activities generated from 1000 simulated users. The comparison results with other models show that the Variational Autoencoder neural network provides the best overall performance with a greater detection accuracy and a reasonable false positive rate

翻译:内部攻击是公司、企业和重要基础设施最具有挑战性的网络安全问题之一。 尽管实施了周边防御,但这类攻击的风险仍然很高。事实上,发现内部攻击是一项非常复杂的安全任务,对研究界构成严重挑战。在本文中,我们的目标是通过使用深学算法Autoencoder和Variational Autoencoder深层来解决这一问题。我们将特别调查应用这些算法来自动防御潜在内部威胁的有用性,而不进行人类干预。这两个模型的有效性在公共数据集CERT数据集(CERT r4.2)上进行了评估。CERT 内部威胁测试数据集的这一版本包括1000个模拟用户产生的良性和恶意活动。与其他模型的比较结果显示,Variational Autencoder神经网络提供了更好的总体性表现,其检测准确性更高,而且具有合理的假正率。