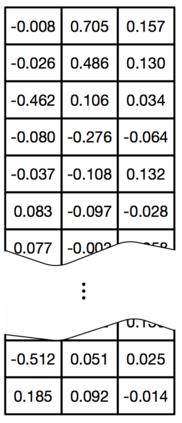

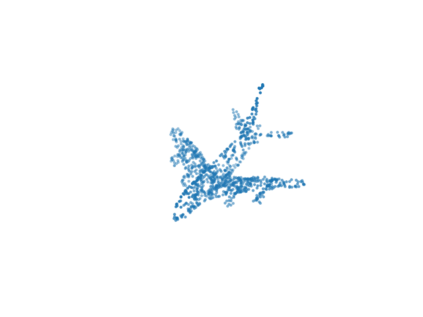

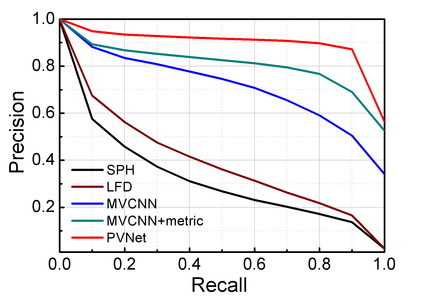

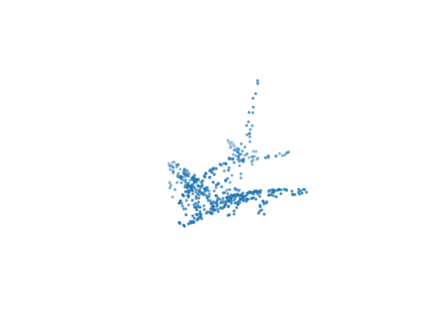

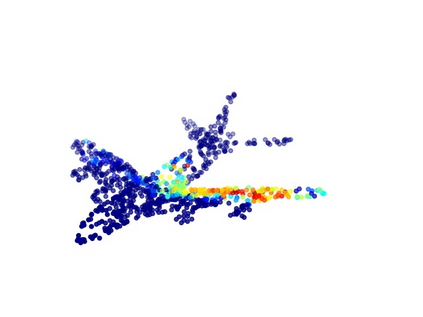

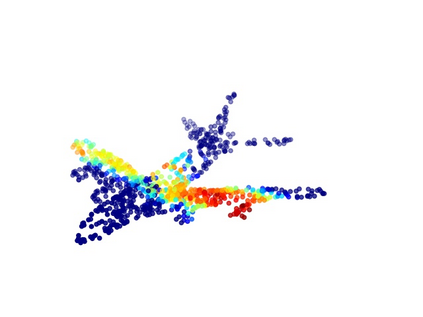

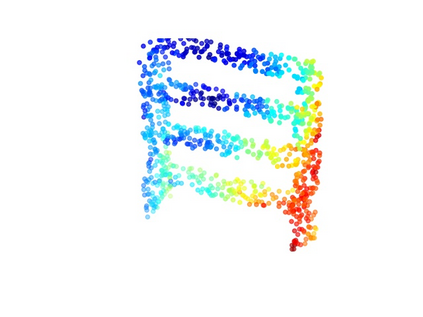

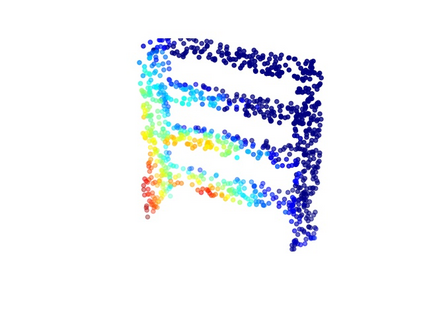

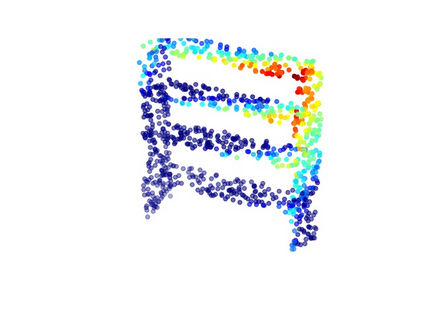

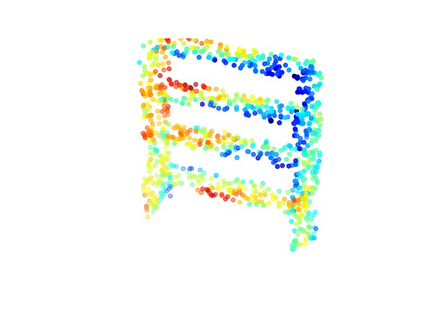

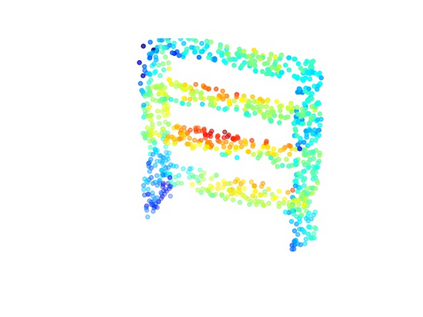

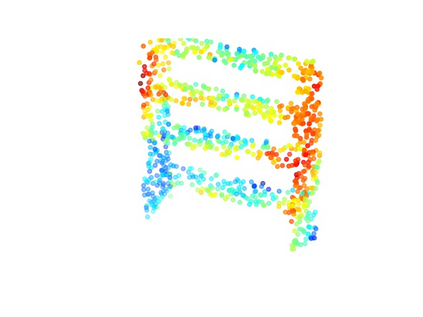

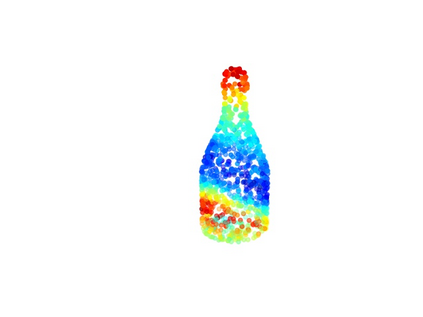

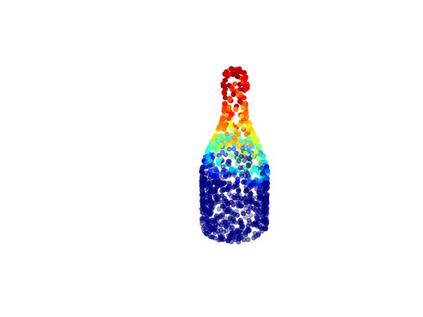

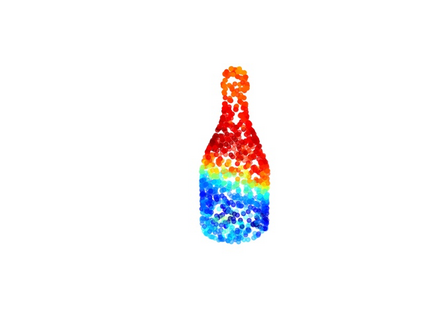

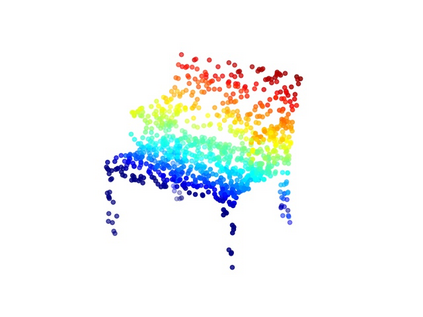

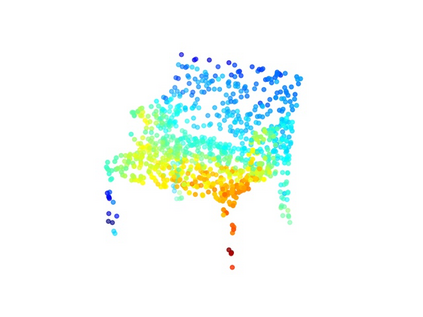

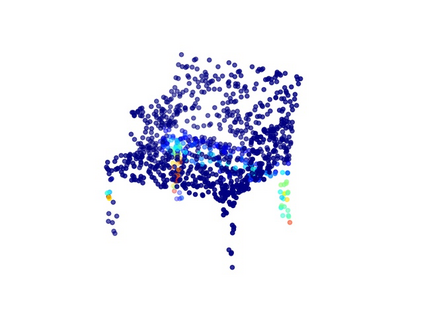

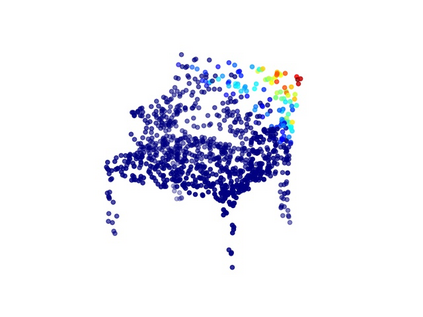

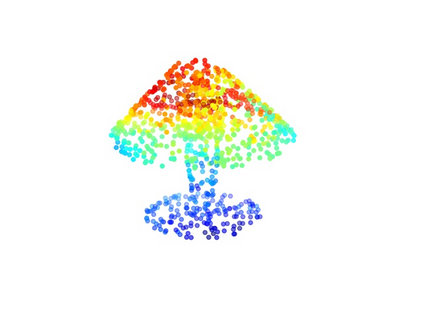

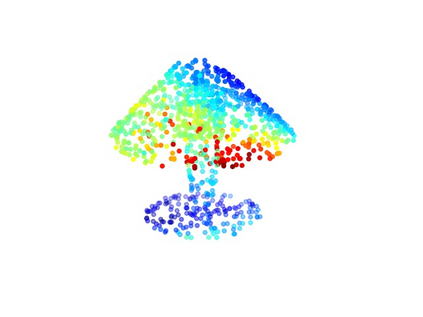

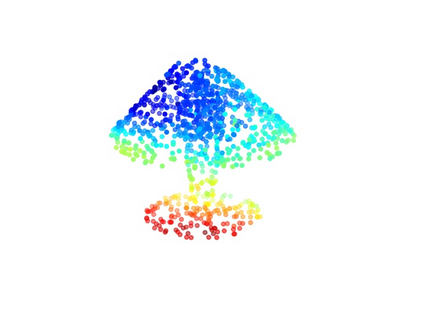

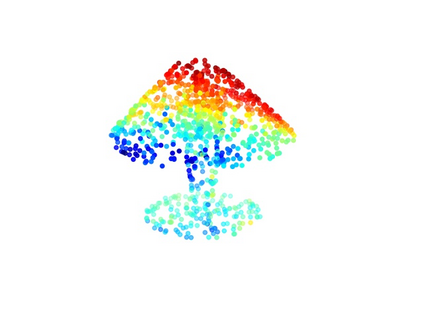

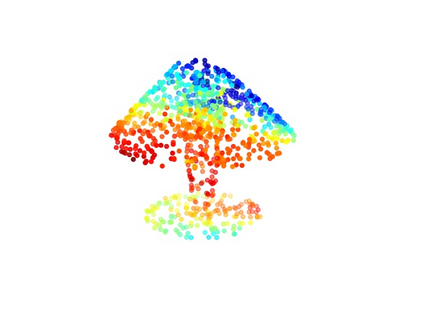

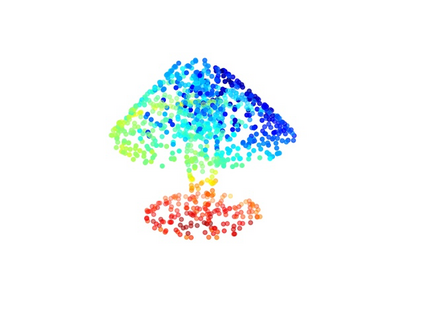

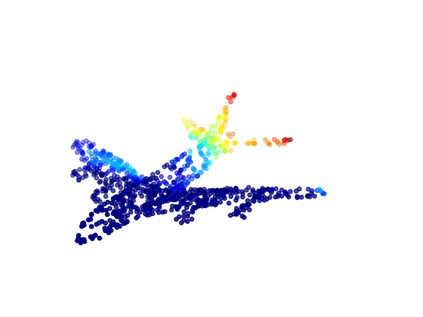

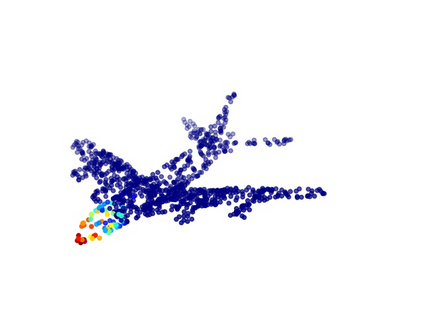

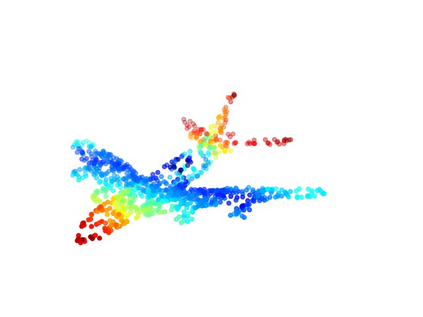

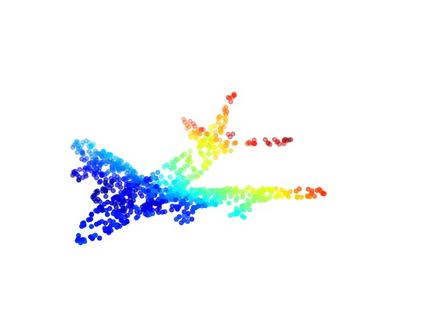

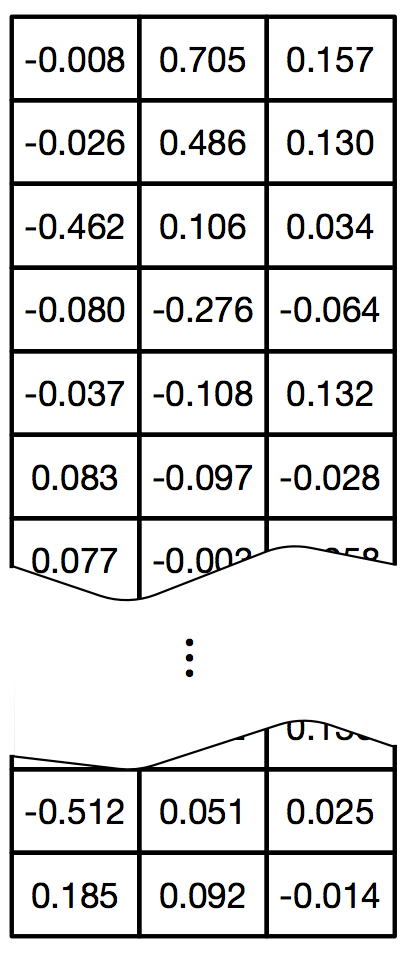

3D object recognition has attracted wide research attention in the field of multimedia and computer vision. With the recent proliferation of deep learning, various deep models with different representations have achieved the state-of-the-art performance. Among them, point cloud and multi-view based 3D shape representations are promising recently, and their corresponding deep models have shown significant performance on 3D shape recognition. However, there is little effort concentrating point cloud data and multi-view data for 3D shape representation, which is, in our consideration, beneficial and compensated to each other. In this paper, we propose the Point-View Network (PVNet), the first framework integrating both the point cloud and the multi-view data towards joint 3D shape recognition. More specifically, an embedding attention fusion scheme is proposed that could employ high-level features from the multi-view data to model the intrinsic correlation and discriminability of different structure features from the point cloud data. In particular, the discriminative descriptions are quantified and leveraged as the soft attention mask to further refine the structure feature of the 3D shape. We have evaluated the proposed method on the ModelNet40 dataset for 3D shape classification and retrieval tasks. Experimental results and comparisons with state-of-the-art methods demonstrate that our framework can achieve superior performance.

翻译:3D对象的识别在多媒体和计算机视觉领域引起了广泛的研究关注。随着最近深层次学习的大量增加,各种深度模型(包括不同表现形式的深度模型)已经取得了最先进的性表现。其中,点云和基于多视图的3D形状表示最近充满希望,其相应的深度模型显示在3D形状识别方面表现显著。然而,几乎没有努力集中点云数据和多视图数据,用于3D形状代表领域3D形状代表领域,在我们的考虑中,3D形状代表的点云数据和多视图数据是有益和相互补偿的。在本文中,我们提议了点-观察网络(PVNet),这是将点云和多视图数据相结合的第一个框架,以联合3D形状识别为目的。更具体地说,建议采用嵌入式注意聚合计划,从多视角数据中采用高层次特征来模拟点云数据不同结构特征的内在相关性和不相容异性。特别是,在我们考虑、有益和相互补偿的情况下,将歧视性描述量化和作为软关注掩体遮,以进一步完善3D形状的结构特征。我们评估了3D形状分类和检索框架3D分类和检索我们3D分析的模型的模型模型-40数据数据集分类和检索分类和检索分析框架的拟议方法,我们评估了拟议的方法。实验性比较和检索结果和检索结果和比较和检索结果,可以进行比较和比较,可以展示结果和比较。实验结果比较。实验结果和比较,可以用来比较。比较和工具可以用来比较,可以用来比较。比较。比较和运用。比较和运用实验结果和运用实验性说明,可以进行。比较和运用。比较,可以进行。比较。比较和运用实验结果和运用实验结果和比较。比较,可以比较,可以进行。比较。比较和运用实验性说明和运用实验性说明和比较,可以进行。比较。比较,可以比较,可以比较,可以比较,可以比较和运用实验性说明。比较。比较。比较和运用实验性说明。比较。比较,可以比较,可以比较和运用实验结果,特别比较,特别是比较,特别是比较,特别是比较,特别比较,特别是比较,特别是比较和运用。比较和运用。比较和运用。比较,特别比较,特别比较和运用。比较,特别比较,特别是比较和运用。比较和运用。比较,特别比较,特别比较,特别比较和运用