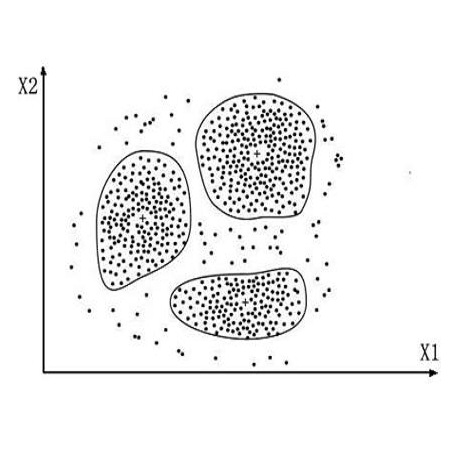

The application of AI in finance is increasingly dependent on the principles of responsible AI. These principles - explainability, fairness, privacy, accountability, transparency and soundness form the basis for trust in future AI systems. In this study, we address the first principle by providing an explanation for a deep neural network that is trained on a mixture of numerical, categorical and textual inputs for financial transaction classification. The explanation is achieved through (1) a feature importance analysis using Shapley additive explanations (SHAP) and (2) a hybrid approach of text clustering and decision tree classifiers. We then test the robustness of the model by exposing it to a targeted evasion attack, leveraging the knowledge we gained about the model through the extracted explanation.

翻译:在金融中应用AI越来越取决于负责任的AI原则。这些原则----解释性、公平性、隐私、问责制、透明度和健全性构成了对未来AI系统的信任基础。本研究报告通过解释一个深层的神经网络,对它进行关于金融交易分类的数值、绝对性和文字投入混合的培训,从而解决了第一项原则。通过(1) 利用Shapley 添加解释(SHAP)进行特征重要分析,(2) 采用文本分组和决策树分类的混合方法。然后,我们通过将模型暴露在有针对性的规避攻击之下,利用我们通过提取解释获得的关于模型的知识,检验模型的稳健性。