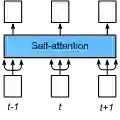

Multiview diffusion models have rapidly emerged as a powerful tool for content creation with spatial consistency across viewpoints, offering rich visual realism without requiring explicit geometry and appearance representation. However, compared to meshes or radiance fields, existing multiview diffusion models offer limited appearance manipulation, particularly in terms of material, texture, or style. In this paper, we present a lightweight adaptation technique for appearance transfer in multiview diffusion models. Our method learns to combine object identity from an input image with appearance cues rendered in a separate reference image, producing multi-view-consistent output that reflects the desired materials, textures, or styles. This allows explicit specification of appearance parameters at generation time while preserving the underlying object geometry and view coherence. We leverage three diffusion denoising processes responsible for generating the original object, the reference, and the target images, and perform reverse sampling to aggregate a small subset of layer-wise self-attention features from the object and the reference to influence the target generation. Our method requires only a few training examples to introduce appearance awareness to pretrained multiview models. The experiments show that our method provides a simple yet effective way toward multiview generation with diverse appearance, advocating the adoption of implicit generative 3D representations in practice.

翻译:多视角扩散模型已迅速成为跨视角空间一致内容创作的强大工具,无需显式几何与外观表示即可提供丰富的视觉真实感。然而,相较于网格或辐射场,现有多视角扩散模型在外观操控方面存在局限,尤其在材质、纹理或风格层面。本文提出一种轻量级适配技术,用于多视角扩散模型中的外观迁移。该方法学习将输入图像中的物体身份与独立参考图像中渲染的外观线索相结合,生成反映目标材质、纹理或风格的多视角一致输出。这允许在生成时显式指定外观参数,同时保持底层物体几何结构与视角一致性。我们利用三个扩散去噪过程分别负责生成原始物体、参考图像与目标图像,并通过反向采样聚合来自物体与参考的少量层级自注意力特征以影响目标生成。本方法仅需少量训练样本即可为预训练多视角模型引入外观感知能力。实验表明,该方法为实现多样化外观的多视角生成提供了一种简洁有效的途径,推动了隐式生成式三维表征在实际应用中的采纳。