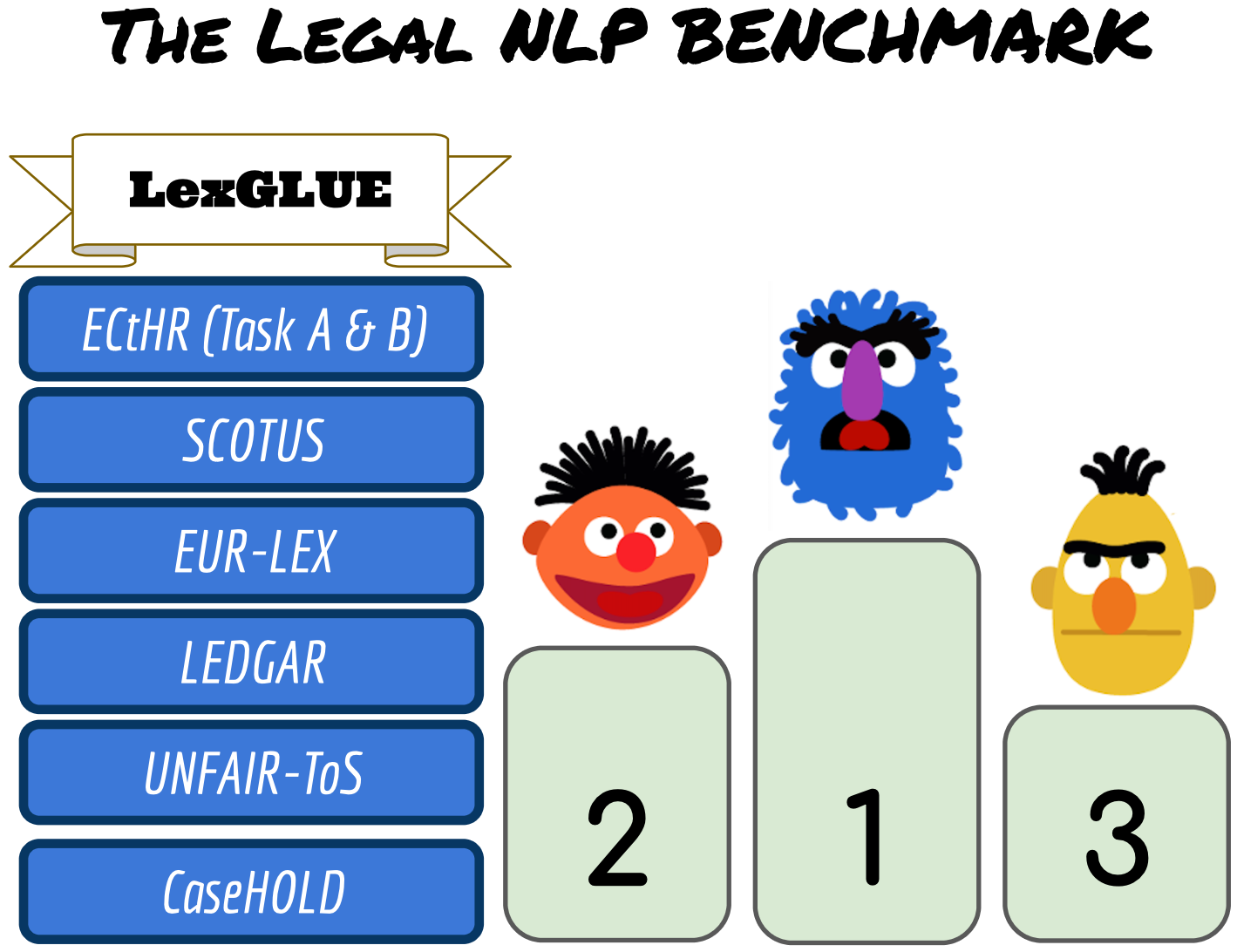

Law, interpretations of law, legal arguments, agreements, etc. are typically expressed in writing, leading to the production of vast corpora of legal text. Their analysis, which is at the center of legal practice, becomes increasingly elaborate as these collections grow in size. Natural language understanding (NLU) technologies can be a valuable tool to support legal practitioners in these endeavors. Their usefulness, however, largely depends on whether current state-of-the-art models can generalize across various tasks in the legal domain. To answer this currently open question, we introduce the Legal General Language Understanding Evaluation (LexGLUE) benchmark, a collection of datasets for evaluating model performance across a diverse set of legal NLU tasks in a standardized way. We also provide an evaluation and analysis of several generic and legal-oriented models demonstrating that the latter consistently offer performance improvements across multiple tasks.

翻译:法律、法律解释、法律论据、协议等通常以书面形式表述,导致产生大量法律文本,其分析是法律实践的核心,随着这些收藏规模的扩大,其分析变得越来越精细。自然语言理解技术可以成为支持法律从业人员开展这些工作的宝贵工具。然而,这些技术的有用性在很大程度上取决于目前的最新模式能否概括法律领域的各项任务。为了回答目前尚未解决的问题,我们引入了法律通用语言理解评价基准(LexGLUE),这是一套数据集,用于以标准化的方式评价一套不同的法律上的国家语言理解标准任务中的示范性业绩。我们还对一些通用的、面向法律的模式进行了评价和分析,表明后者始终在多个任务中提供业绩改进。