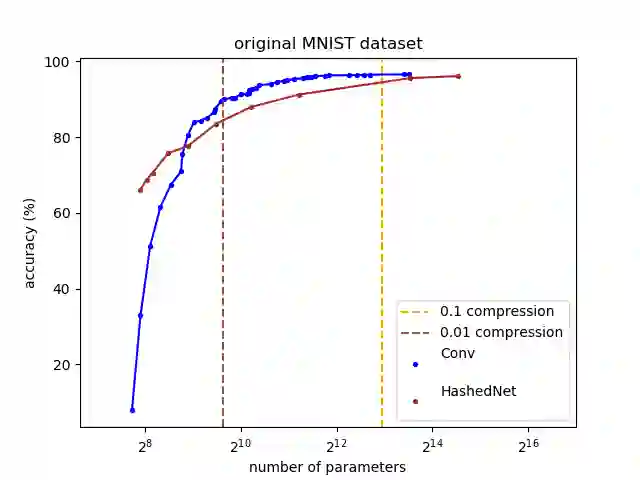

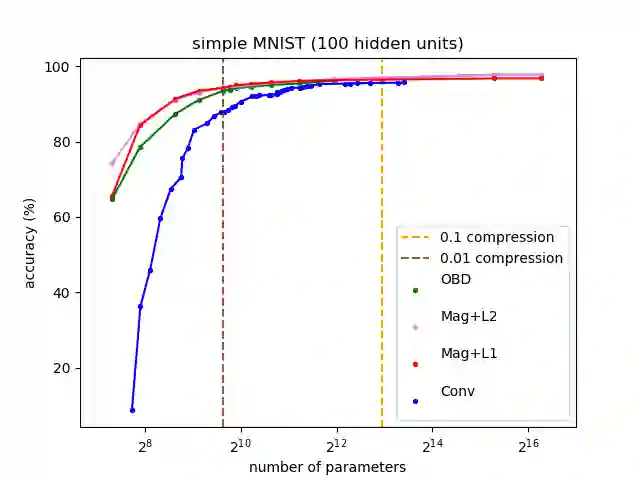

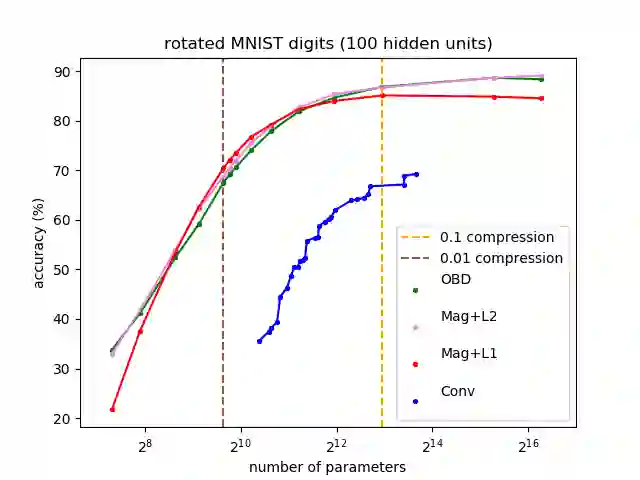

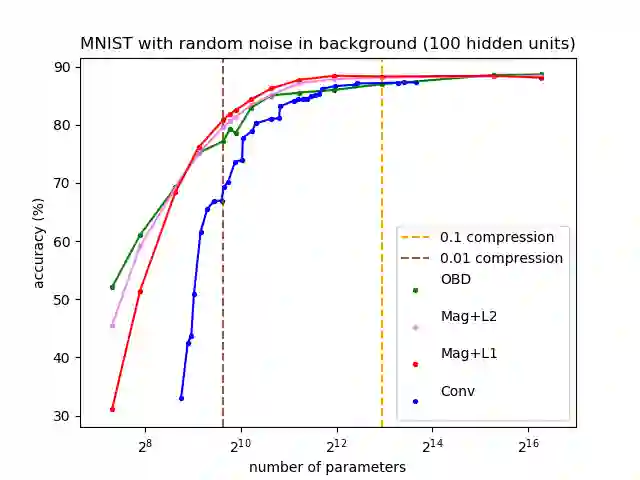

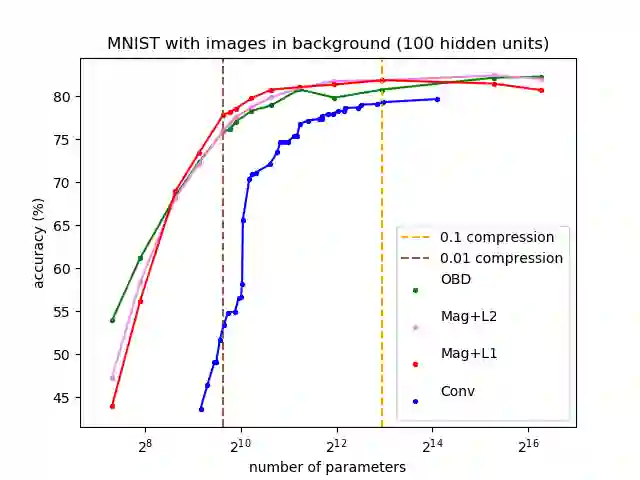

There has recently been an increasing desire to evaluate neural networks locally on computationally-limited devices in order to exploit their recent effectiveness for several applications; such effectiveness has nevertheless come together with a considerable increase in the size of modern neural networks, which constitute a major downside in several of the aforementioned computationally-limited settings. There has thus been a demand of compression techniques for neural networks. Several proposal in this direction have been made, which famously include hashing-based methods and pruning-based ones. However, the evaluation of the efficacy of these techniques has so far been heterogeneous, with no clear evidence in favor of any of them over the others. The goal of this work is to address this latter issue by providing a comparative study. While most previous studies test the capability of a technique in reducing the number of parameters of state-of-the-art networks , we follow [CWT + 15] in evaluating their performance on basic ar-chitectures on the MNIST dataset and variants of it, which allows for a clearer analysis of some aspects of their behavior. To the best of our knowledge, we are the first to directly compare famous approaches such as HashedNet, Optimal Brain Damage (OBD), and magnitude-based pruning with L1 and L2 regularization among them and against equivalent-size feed-forward neural networks with simple (fully-connected) and structural (convolutional) neural networks. Rather surprisingly, our experiments show that (iterative) pruning-based methods are substantially better than the HashedNet architecture, whose compression doesn't appear advantageous to a carefully chosen convolutional network. We also show that, when the compression level is high, the famous OBD pruning heuristics deteriorates to the point of being less efficient than simple magnitude-based techniques.

翻译:最近人们越来越希望在当地对计算上有限的装置的神经网络进行评估,以便利用这些技术最近的一些应用的功效;然而,这种效力与现代神经网络规模的大幅扩大结合在一起,而现代神经网络的规模是上述若干计算上的限制设置的主要下坡。因此,对神经网络的需求是压缩技术。在这方面提出了几项建议,其中著名的包括基于仓储的方法和基于剪裁的方法。然而,对这些技术的功效的评价迄今是多种多样的,没有任何明显的证据支持这些技术对其他应用的效益。这项工作的目标是通过提供比较研究来解决后一个问题。虽然大多数先前的研究测试了减少最新网络参数数量的技术的能力,但我们在评价其基础的电离心和基于其基础的变异方法方面表现得更差(MNIT数据集和基于其基础的变异,使得对其行为的某些方面进行更明确的分析。我们最清楚的就是,我们最精细的内脏2 和直线网络比直线1 结构规模要高。我们最精细的直径直径直径直径直径直的网络和直径直径直向直径直径直径直向直径直的网络展示了。