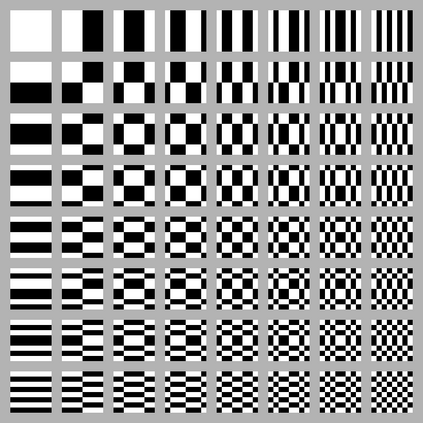

Most neural networks for computer vision are designed to infer using RGB images. However, these RGB images are commonly encoded in JPEG before saving to disk; decoding them imposes an unavoidable overhead for RGB networks. Instead, our work focuses on training Vision Transformers (ViT) directly from the encoded features of JPEG. This way, we can avoid most of the decoding overhead, accelerating data load. Existing works have studied this aspect but they focus on CNNs. Due to how these encoded features are structured, CNNs require heavy modification to their architecture to accept such data. Here, we show that this is not the case for ViTs. In addition, we tackle data augmentation directly on these encoded features, which to our knowledge, has not been explored in-depth for training in this setting. With these two improvements -- ViT and data augmentation -- we show that our ViT-Ti model achieves up to 39.2% faster training and 17.9% faster inference with no accuracy loss compared to the RGB counterpart.

翻译:计算机视觉的多数神经网络都设计了使用 RGB 图像。 然而, 这些 RGB 图像通常在保存成磁盘之前在 JPEG 中编码; 解码这些图像会给 RGB 网络带来不可避免的间接成本。 相反, 我们的工作重点是直接从 JPEG 的编码特征中培训View 变异器。 这样, 我们就可以避免大部分解码间接费用, 加速数据负荷。 现有的工程研究了这个方面, 但它们集中在CNN上。 由于这些编码特性是如何构建的, CNN 需要对其结构进行重大修改才能接受这些数据。 这里, 我们显示, VIT 图像的架构不是 ViG 。 此外, 我们直接处理这些编码特性的数据增强问题, 据我们所知, 还没有为在设置中的培训而深入探讨过。 通过这两个改进 -- ViT 和数据增强 -- 我们的VT- Ti 模型取得了39.2% 的更快的培训和17.9%的更快的推断, 与 RGB 对应方相比没有准确损失。