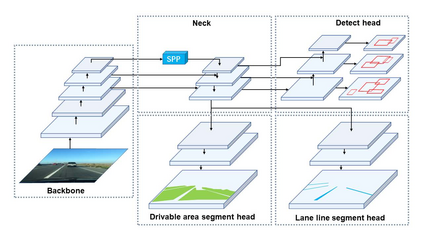

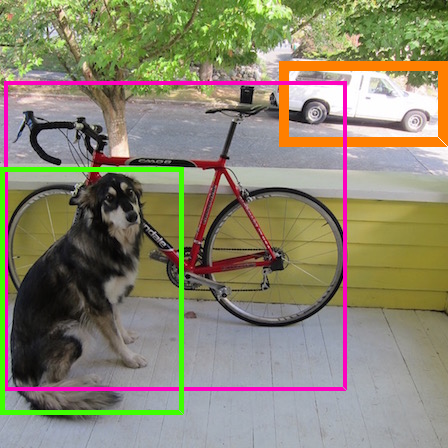

A panoptic driving perception system is an essential part of autonomous driving. A high-precision and real-time perception system can assist the vehicle in making the reasonable decision while driving. We present a panoptic driving perception network (YOLOP) to perform traffic object detection, drivable area segmentation and lane detection simultaneously. It is composed of one encoder for feature extraction and three decoders to handle the specific tasks. Our model performs extremely well on the challenging BDD100K dataset, achieving state-of-the-art on all three tasks in terms of accuracy and speed. Besides, we verify the effectiveness of our multi-task learning model for joint training via ablative studies. To our best knowledge, this is the first work that can process these three visual perception tasks simultaneously in real-time on an embedded device Jetson TX2(23 FPS) and maintain excellent accuracy. To facilitate further research, the source codes and pre-trained models will be released at https://github.com/hustvl/YOLOP.

翻译:光学驾驶感知系统是自主驾驶的一个基本部分。高精度和实时感知系统可以帮助车辆在驾驶时做出合理的决定。我们同时提供一个全光驾驶感知网络(YOLOP),以进行交通物体探测、可驾驶区段分解和车道探测。它由1个特征提取编码器和3个处理具体任务的解码器组成。我们的模型在具有挑战性的BDD100K数据集方面表现极好,在所有三项任务中都实现了最先进的精确和速度。此外,我们还核实了我们通过模拟研究进行联合培训的多任务学习模式的有效性。据我们所知,这是首次在安装在杰特森TX2(23 FPS)嵌入装置上实时处理这三项视觉感知任务并保持极准确性的工作。为了便利进一步的研究,源码和预先训练模型将在https://github.com/hustvl/YOLOP发布。