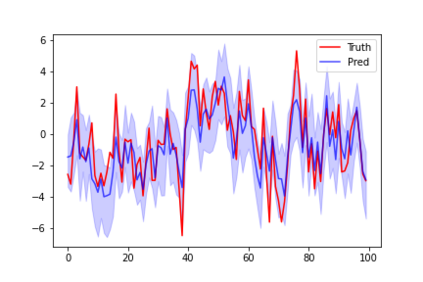

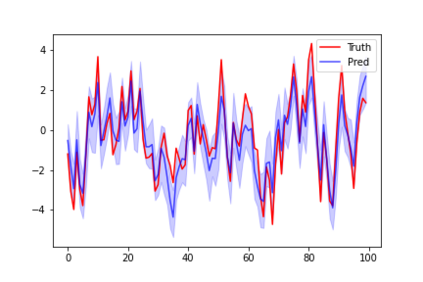

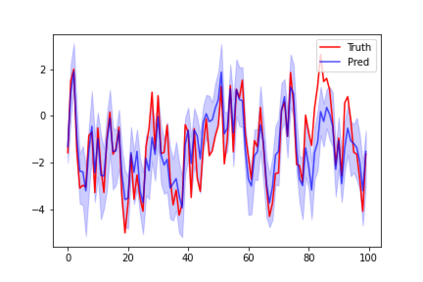

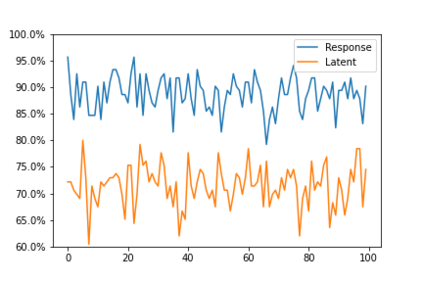

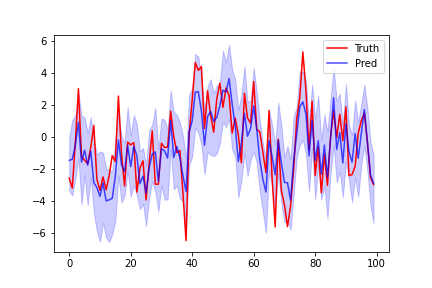

We introduce a new version of deep state-space models (DSSMs) that combines a recurrent neural network with a state-space framework to forecast time series data. The model estimates the observed series as functions of latent variables that evolve non-linearly through time. Due to the complexity and non-linearity inherent in DSSMs, previous works on DSSMs typically produced latent variables that are very difficult to interpret. Our paper focus on producing interpretable latent parameters with two key modifications. First, we simplify the predictive decoder by restricting the response variables to be a linear transformation of the latent variables plus some noise. Second, we utilize shrinkage priors on the latent variables to reduce redundancy and improve robustness. These changes make the latent variables much easier to understand and allow us to interpret the resulting latent variables as random effects in a linear mixed model. We show through two public benchmark datasets the resulting model improves forecasting performances.

翻译:我们引入了新的深层状态-空间模型(DSSMs)新版本, 将经常性神经网络与状态-空间框架结合起来, 以预测时间序列数据。 模型估计所观测到的序列是非线性地随时间演变的潜在变量的函数。 由于DSSMs固有的复杂性和非线性, DSSMs先前的工作通常会产生非常难以解释的潜在变量。 我们的文件重点是用两个关键修改来生成可解释的潜在参数。 首先, 我们通过限制潜在变量和一些噪音的直线变换来简化预测解密变量。 其次, 我们利用潜在变量的缩缩缩前端来减少冗余, 提高稳健性。 这些变化使得潜在变量更容易理解, 并使我们能够将由此产生的潜在变量解释为线性混合模型的随机效应。 我们通过两个公共基准数据集来显示由此产生的模型改进了预测性。