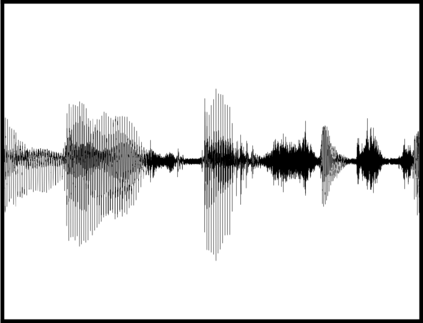

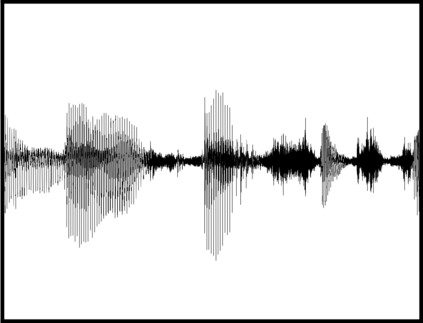

Adversarial attacks pose a threat to deep learning models. However, research on adversarial detection methods, especially in the multi-modal domain, is very limited. In this work, we propose an efficient and straightforward detection method based on the temporal correlation between audio and video streams. The main idea is that the correlation between audio and video in adversarial examples will be lower than benign examples due to added adversarial noise. We use the synchronisation confidence score as a proxy for audiovisual correlation and based on it we can detect adversarial attacks. To the best of our knowledge, this is the first work on detection of adversarial attacks on audiovisual speech recognition models. We apply recent adversarial attacks on two audiovisual speech recognition models trained on the GRID and LRW datasets. The experimental results demonstrate that the proposed approach is an effective way for detecting such attacks.

翻译:反向攻击对深层学习模式构成威胁。然而,关于对抗性探测方法的研究,特别是在多模式领域,却非常有限。在这项工作中,我们根据音频和视频流之间的时间相关性提出一种高效和直截了当的探测方法。主要的想法是,由于增加了对抗性噪音,对抗性例子中的音频和视频的相关性将低于良性例子。我们使用同步性信任评分作为视听相关性的代名词,并以此为基础,我们可以探测对抗性攻击。据我们所知,这是对视听语音识别模型进行对抗性攻击的首次调查。我们最近对在全球资源信息数据库和LRW数据集培训的两种视听语音识别模型采用了对抗性攻击。实验结果表明,拟议的方法是发现这类攻击的有效方法。