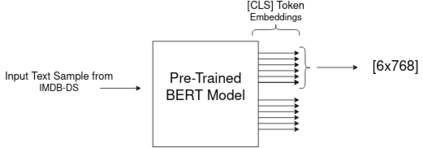

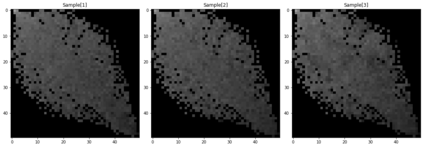

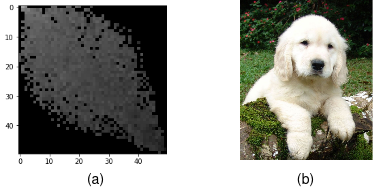

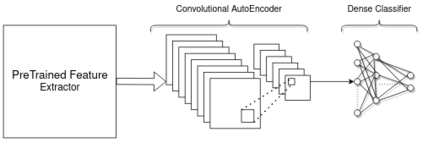

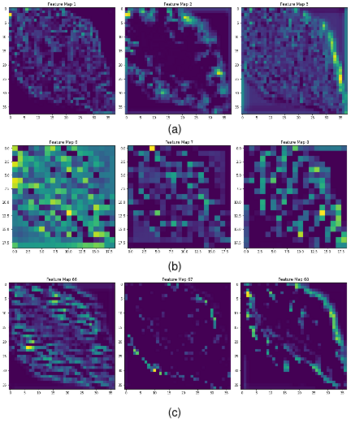

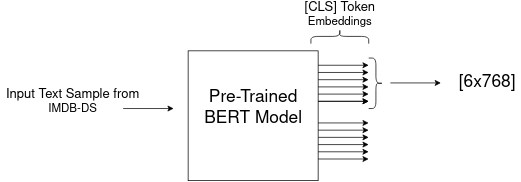

Knowledge is acquired by humans through experience, and no boundary is set between the kinds of knowledge or skill levels we can achieve on different tasks at the same time. When it comes to Neural Networks, that is not the case, the major breakthroughs in the field are extremely task and domain specific. Vision and language are dealt with in separate manners, using separate methods and different datasets. In this work, we propose to use knowledge acquired by benchmark Vision Models which are trained on ImageNet to help a much smaller architecture learn to classify text. After transforming the textual data contained in the IMDB dataset to gray scale images. An analysis of different domains and the Transfer Learning method is carried out. Despite the challenge posed by the very different datasets, promising results are achieved. The main contribution of this work is a novel approach which links large pretrained models on both language and vision to achieve state-of-the-art results in different sub-fields from the original task. Without needing high compute capacity resources. Specifically, Sentiment Analysis is achieved after transferring knowledge between vision and language models. BERT embeddings are transformed into grayscale images, these images are then used as training examples for pretrained vision models such as VGG16 and ResNet Index Terms: Natural language, Vision, BERT, Transfer Learning, CNN, Domain Adaptation.

翻译:人类通过经验获得知识, 并且没有在我们能够同时实现的不同任务的知识或技能水平之间设定界限。 在神经网络方面, 情况并非如此, 实地的重大突破是极其艰巨的任务和特定领域。 视野和语言以不同的方式处理, 使用不同的方法和不同的数据集。 在这项工作中, 我们提议使用通过在图像网络上培训的基准愿景模型获得的知识, 帮助一个小得多的结构学习对文本进行分类。 在将IMDB数据集中包含的文本数据转换为灰色比例图像之后, 对不同领域和传输学习方法进行了分析。 尽管不同数据集提出了挑战, 却取得了可喜的成果。 这项工作的主要贡献是采用新颖的方法, 将语言和愿景上的大型预先培训模型与愿景挂钩, 以在最初任务的不同子领域实现最新结果。 不需要高的校正能力资源。 具体地说, 在将视觉模型和语言模型之间的知识转换为灰色比例图像后, 将实现感官分析。 BERT 嵌入式和传输方法被转换为灰色图像, 这些图像被使用为B 学习前的图像。