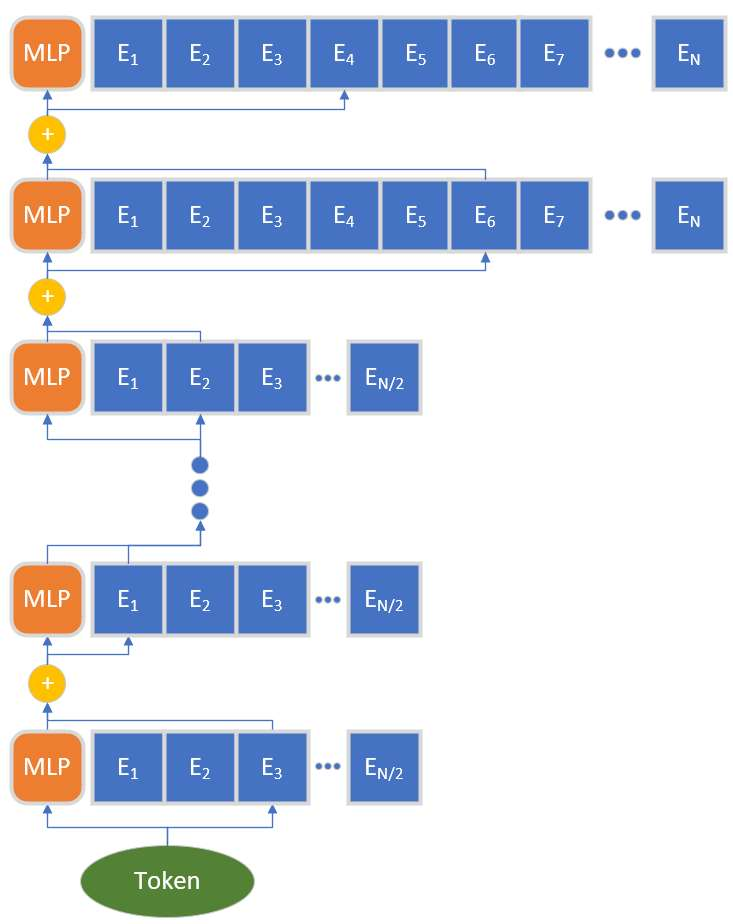

As the training of giant dense models hits the boundary on the availability and capability of the hardware resources today, Mixture-of-Experts (MoE) models become one of the most promising model architectures due to their significant training cost reduction compared to a quality-equivalent dense model. Its training cost saving is demonstrated from encoder-decoder models (prior works) to a 5x saving for auto-aggressive language models (this work along with parallel explorations). However, due to the much larger model size and unique architecture, how to provide fast MoE model inference remains challenging and unsolved, limiting its practical usage. To tackle this, we present DeepSpeed-MoE, an end-to-end MoE training and inference solution as part of the DeepSpeed library, including novel MoE architecture designs and model compression techniques that reduce MoE model size by up to 3.7x, and a highly optimized inference system that provides 7.3x better latency and cost compared to existing MoE inference solutions. DeepSpeed-MoE offers an unprecedented scale and efficiency to serve massive MoE models with up to 4.5x faster and 9x cheaper inference compared to quality-equivalent dense models. We hope our innovations and systems help open a promising path to new directions in the large model landscape, a shift from dense to sparse MoE models, where training and deploying higher-quality models with fewer resources becomes more widely possible.

翻译:由于巨型密集模型的培训在当今硬件资源的可用性和能力的界限上达到了巨大的密度模型的培训程度,混合专家模型(MOE)模型成为最有希望的模式结构之一,因为与质量等量的密集模型相比,它们的培训成本大大降低,其培训成本的节省表现从编码器脱coder模型(初级工程)到自动侵略语言模型的5x节约(这项工作与平行探索同时进行);然而,由于模型规模大得多,结构独特,如何广泛提供快速的MOE模型推断仍然具有挑战性,而且尚未解决,限制了其实际用途。要解决这个问题,我们提出深层Speed模型,即端到端的MOE培训和推断解决方案,作为DeepSpeed图书馆的一部分,包括新型的ME结构设计和模型压缩技术,将MOE模型的规模缩小至3.7x,以及高度优化的推导力系统,比现有的MOE推力解决方案提供7.3x更好的弹性和成本。 深层Spee-MoE提供了前所未有的转变,从更迅速的转变和高效的转变,从更接近于更密集的、更稳定的 MoE 9-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-A-S-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-A-